Nurses and naked photos

Nurses and naked photos

Opinion + AnalysisPolitics + Human Rights

BY Matthew Beard The Ethics Centre 6 NOV 2015

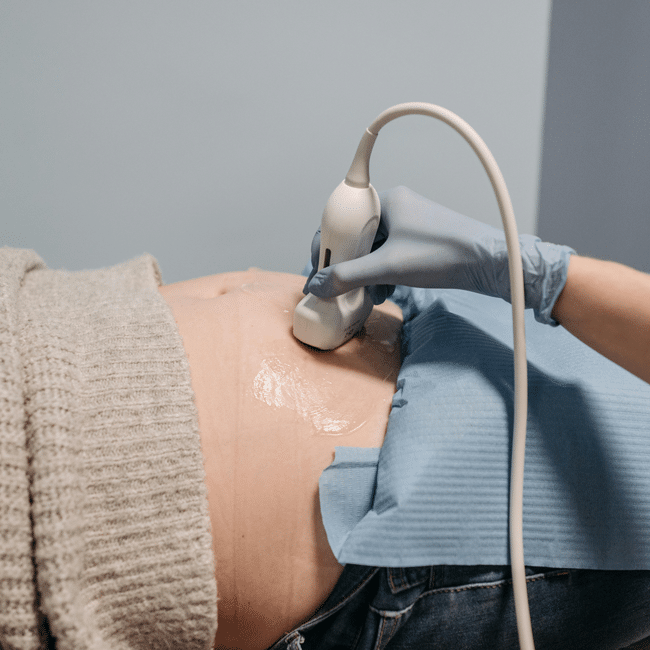

A Sydney nurse took an explicit photo of a schoolteacher who was under anaesthetic and awaiting surgery.

The teacher said, “I am a larger woman. To me, it’s obvious she took it to make fun of fat people.” The teacher is campaigning for legal reform to protect other patients from suffering in similar ways and to see the nurse punished for a criminal offence.

Obviously what this nurse did was wrong. It objectified another human being, treating her as an object of ridicule and subject to the whimsical mood of the nurse. What’s more, the nurse violated the contract of trust that underpins the relationship between patients and the medical profession.

By photographing her naked the nurse also subjected the teacher to deep and ongoing humiliation about her body and usually private sexual organs. The photograph also creates the possibility for further exploitation, distribution, and humiliation.

The teacher said, “I felt like my world was exploding. I felt I was in great peril that this photo was going to destroy my life, my career and that my son would find out.” It seems the psychological ramifications have been severe.

The act was intrinsically wrong. It violated notions of trust, the inherent dignity with which people ought to be treated, and undermined the values that inform the profession of nursing.

But independently of the consequences, the act was intrinsically wrong. It violated notions of trust, the inherent dignity with which people ought to be treated, and undermined the values that inform the profession of nursing.

It will be distressing to learn that there is currently no law in NSW forbidding behaviour like this. A loophole in the law means that because the photo was not motivated by sexual deviancy, but by the desire to make fun of the patient, no legal recourse was available. It seems reasonable to call for legal reform. This is precisely what Fiona McLay, the teacher’s lawyer, is doing.

The act was incontrovertibly unethical regardless of its legal standing and yet this nurse still felt empowered to take the photo. And worse, the nurse is still practising without restrictions or supervision, having apologised and found to have shown “the appropriate level of contrition”.

Despite all this, the instinct to turn to law as a way to amend or prevent unethical behaviour is misguided. What is required is for the nursing profession to demonstrate this behaviour will not be tolerated and is directly against the values nursing stands for.

Making such photographs illegal will do little to return trust – patients will still be vulnerable before surgery occurs and nurses will still have the opportunity to take such photos. Making photographs illegal will do little more than allow wrongdoers to be sent to prison.

Law is a clumsy instrument for enforcing ethical behaviour.

For us to trust nurses in spite of a story like this the profession must uniformly state their disapproval for the conduct and demonstrate willingness to enforce its own ethical standards. Law is a clumsy instrument for enforcing ethical behaviour. Re-committing as a profession and as individual professionals to the core values of the field – trust, respect for persons and patient care – is much more likely to avoid instances like this in the future.

There is no reason to excuse the nurse’s behaviour in this case. However, it is worth understanding this incident occurred in a context where crass jokes may well be the norm.

Jokes help us get through the day and although this one went seriously awry we should recognise the context in which it was made. Nursing is a tough field. It’s demanding on the body and the mind, and sometimes errors of judgement – including insensitive, invasive jokes – are a possibility.

As the old saying goes, sometimes you have to laugh to keep from crying.

Humour is a matter of taste, and much relies on pushing against ordinary modes of thinking – including moral norms. Of late there has been heated debate regarding the place and value of racist and sexist humour in comedy. Regardless of the view we take on that particular subject, we should agree that any form of humour that trades off the humiliation of a particular individual, or is done in ways that can have lasting and severe consequences for a person’s wellbeing will be unethical – even if funny to some.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Politics + Human Rights

Israel or Palestine: Do you have to pick a side?

Opinion + Analysis

Politics + Human Rights, Relationships, Society + Culture

Of what does the machine dream? The Wire and collectivism

Opinion + Analysis

Politics + Human Rights, Society + Culture

When our possibilities seem to collapse

Opinion + Analysis

Business + Leadership, Politics + Human Rights

Can philosophy help us when it comes to defining tax fairness?

BY Matthew Beard

Matt is a moral philosopher with a background in applied and military ethics. In 2016, Matt won the Australasian Association of Philosophy prize for media engagement. Formerly a fellow at The Ethics Centre, Matt is currently host on ABC’s Short & Curly podcast and the Vincent Fairfax Fellowship Program Director.

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Are there any powerful swear words left?

Are there any powerful swear words left?

Opinion + AnalysisHealth + WellbeingRelationships

BY Rebecca Roache The Ethics Centre 4 NOV 2015

Despite its usefulness when you lock your keys in the house, or forget about a crucial meeting or trip on your child’s toy, people object to swearing. The justifications are usually moral, or quasi-moral. We’re often told swearing is disrespectful, impolite, aggressive, intimidating or insulting.

It is also common to hear a pragmatic objection to swearing. We risk wearing out swear words by saying them too often. If overused, swear words will lose their power to shock. Too much swearing will result in a bland, emotionally inert vocabulary.

Is this true? Is it already happening?

This pragmatic worry is well founded. Philosopher Joel Feinberg remarked that swear words “acquire their strong expressive power in virtue of an almost paradoxical tension between powerful taboo and universal readiness to disobey”. We need the taboo to make swear words powerful in the first place. And we need to break the taboo in order to make use of their power.

If we are too eager to disobey a taboo then we risk losing the taboo. This frequently happens in other areas of life, often for the better. Public displays of homosexuality were shocking 20 years ago but – at least in the UK and many other countries – not now, largely thanks to an increasing visibility and openness about sexuality.

This might be happening with swearing too. There are more opportunities to encounter swearing, due to increasingly liberal attitudes and the proliferation of uncensored discussion on the internet. A report by the BBC and the ASA (the Advertising Standards Authority) found that “fuck” – once close to the pinnacle of offensiveness – is less shocking than it used to be.

We probably have a few years to go before the Queen uses her Christmas Day speech to report that she has had a “fucking shit year”.

But this underestimates the complexity of how we shock people by swearing. While “fuck” is pretty ubiquitous in some situations, there remains a strong taboo against using it in other contexts. We probably have a few years to go before the Queen uses her Christmas Day speech to report that she has had a “fucking shit year” rather than an annus horribilis. It will be a while before your doctor breaks news of your terminal illness by saying, in a most sympathetic voice, “You are totally fucked”.

And even in contexts where we can swear more freely, much depends on how we swear. Your Facebook friends may not bat an eyelid at your Saturday night status update, “Fucking wasted again”.

You might, however, put a few noses out of joint if you respond to their cheerful birthday wishes with a “Fuck you!” Using swear words to shock is not purely a matter of the availability of shocking words.

In any case, even if “fuck” really were to lose its shock value we still have plenty else to choose from. Many people who don’t mind “fuck” still draw the line at “cunt”. If you really want to get someone’s attention in these enlightened times, you could utter a racist or homophobic slur. The offensiveness of this sort of language has increased at the same time as the offensiveness of “fuck” has decreased.

There are persuasive moral reasons why you shouldn’t use prejudicial language, but the issue here is not the ethics of offensive language, but whether we have any powerful swear words left. The availability of shocking words tracks what people find offensive. As long as we remain offended by something or other, we will have the capacity to offend people by referring to it. And if offensive ways to refer to it don’t exist, we can invent them.

If you’re looking for a way to shock and offend, to express anger or to help you cope with the pain of stepping barefoot on a piece of Lego, you don’t need to resort to hate speech. You don’t need to swear either. Just break a few taboos.

Go on Facebook and tell your best friend his new baby is ugly. Tell your boss she’s put on weight. Loudly summarise your preferred masturbation techniques for the benefit of everyone in your train carriage.

Hell, you don’t even need to use language. Give your colleagues the middle finger. Turn up to work naked. Take a dump in the aisle during a church service. Write an online essay replete with swear words and disconcerting examples.

With a little imagination we can find limitless and powerful ways to offend people if that’s what we want to do. We don’t need to give a fuck about whether our favourite swear words are declining in their capacity to shock.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Relationships

How to give your new year’s resolutions more meaning

Opinion + Analysis

Health + Wellbeing, Relationships

Living well or comfortably waiting to die?

Opinion + Analysis

Health + Wellbeing

Women’s pain in pregnancy and beyond is often minimised

Opinion + Analysis

Health + Wellbeing, Science + Technology

The ethics of drug injecting rooms

BY Rebecca Roache

Rebecca Roache is a British philosopher and Senior Lecturer at Royal Holloway, University of London, known for her work on the philosophy of language, practical ethics and philosophy of mind.

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Anthem outrage reveals Australia’s spiritual shortcomings

Anthem outrage reveals Australia’s spiritual shortcomings

Opinion + AnalysisHealth + WellbeingRelationships

BY Simon Longstaff The Ethics Centre 2 NOV 2015

This article was originally published on The Age.

The decision by Cranbourne Carlisle Primary School in 2015 to allow some of its students a temporary exemption from singing Australia’s national anthem has sparked outrage in some quarters.

Those exempted all belonged to the Shiite faith, a branch of Islam. But I expect these students usually sang the anthem with as much pride as any other Australian child.

However, on this occasion, the opportunity to sing fell during the month of Muharram – a period of mourning during which Shiites remember and honour their founder, Imam Hussein. This is a month of solemnity in which Shiites are to avoid all joyful acts, including singing. It captures some of the tone of the Christian period of Lent which was traditionally a time devoted to pious reflection and avoiding overtly pleasurable activities.

So what might be said about a school’s decision to let children put religious observance ahead of patriotic duty?

There would have been barely a ripple of dissent if the issue had been one of physical capacity.

The first thing to note is there would have been barely a ripple of dissent if the issue had been one of physical capacity. Imagine a young girl who has recently returned to school after throat surgery. She feels fine. Her voice has returned to normal and all discomfort has gone.

However, her doctor has warned she is not to shout or sing for the next month to protect against scarring. She must also avoid dust and smoke, and stay indoors where possible.

Her first day back coincides with the school assembly. By tradition, the school meets under the spreading oaks that are the its finest feature. The classes are formed up around a central pole where the Australian flag is raised each morning as the national anthem is sung by all.

The student wants to join her classmates at assembly and participate equally in the proceedings. Like every child her age, she does not want to stand out from the crowd. But her mother has explained the situation to the school principal, so instead of singing the national anthem with gusto, she finds herself sitting inside her classroom waiting for the others.

Now, would this student, her parents or the school authorities be blamed for not singing the national anthem or for not being at assembly? I think not.

Yet the analogy between this hypothetical and the Carlisle case is good in all respects but one. The risk faced by students at Carlisle was of a spiritual rather than physical order.

The idea of spiritual risk or disorder has become unfamiliar in an increasingly secular society. For many people, it is perplexing that someone might genuinely fear ‘sinful conduct’ or that such a concern takes precedence over civic duty.

Yet not so long ago a majority of Australians believed in hell and the possibility of ‘eternal perdition’. Indeed there are still people who would choose to be imprisoned or die rather than act against their religious beliefs or conscience.

The fact that the spiritual worldview is so unfamiliar to us does not make it any less real or powerful for those who are pious and concerned for the health of their souls.

One might doubt the validity of the metaphysics but not the sincerity of the believers.

The Shiite children of Cranbourne Carlisle Primary School were neither rejecting nor disrespecting Australia when they temporarily withdrew from their assembly. They were protecting their spiritual integrity. They were also accepting the advantages of living in a liberal democratic society that guarantees their right to the peaceful enjoyment of religious freedom.

The children who remained in assembly were singing the national anthem in support of this ideal. For all Australians are young and free.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Relationships

Courage isn’t about facing our fears, it’s about facing ourselves

WATCH

Relationships

How to have moral courage and moral imagination

Opinion + Analysis

Health + Wellbeing, Relationships

Germaine Greer is wrong about trans women and she’s fuelling the patriarchy

Opinion + Analysis

Relationships

TEC announced as 2018 finalist in Optus My Business Awards

BY Simon Longstaff

Simon Longstaff began his working life on Groote Eylandt in the Northern Territory of Australia. He is proud of his kinship ties to the Anindilyakwa people. After a period studying law in Sydney and teaching in Tasmania, he pursued postgraduate studies as a Member of Magdalene College, Cambridge. In 1991, Simon commenced his work as the first Executive Director of The Ethics Centre. In 2013, he was made an officer of the Order of Australia (AO) for “distinguished service to the community through the promotion of ethical standards in governance and business, to improving corporate responsibility, and to philosophy.” Simon is an Adjunct Professor of the Australian Graduate School of Management at UNSW, a Fellow of CPA Australia, the Royal Society of NSW and the Australian Risk Policy Institute.

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Would you kill baby Hitler?

Would you kill baby Hitler?

Opinion + AnalysisPolitics + Human RightsRelationships

BY Matthew Beard The Ethics Centre 1 NOV 2015

The New York Times Magazine polled its readers. “If you could go back and kill Hitler as a baby, would you do it?” 42% of people said yes.

Why they decided to ask the question is a mystery, but it sparked a meme that’s been bouncing around the internet ever since. The meme reached its zenith when Huffington Post asked Jeb Bush whether he would do the deed.

“Hell yeah, I would,” he declared. “You gotta step up, man.” Bush acknowledged the inherent fragility of time travel – as explored by scholars Marty McFly and Doc Brown, but ultimately conceded, “I’d do it. I mean, Hitler…”

Before you saddle up behind Jeb on the time travel express to Hitler’s nursery, here are a few things to consider.

Baby Hitler is innocent

Most ethical justifications for killing start with the presumption that people don’t deserve to be killed unless they’ve done something to forfeit their right to life. Depending on who you speak to, this might include being involved in an attack against somebody else, being in the military or even trafficking drugs.

Unless baby Hitler is running a Walter White-esque meth operation out of his preschool, he’s done nothing to forfeit his right to life.

Until he does – say, by orchestrating genocide – Hitler retains it. Killing him as a baby would therefore be wrong.

Acts of evil have personal costs

Knowingly doing the wrong thing – like killing an innocent baby – carries a personal cost. When we transgress against deep moral beliefs we can experience debilitating guilt, shame, anxiety and depression. Such actions can even come to define us permanently.

Some academics are now using the term ‘moral injury’ to describe the personal costs of acting against our moral beliefs. “Don’t kill innocent children” is arguably the most deeply held moral belief any of us have. Violating that norm comes at a severe price.

“Don’t kill innocent children” is arguably the most deeply-held moral belief any of us have. Violating that norm comes at a severe price.

Doing something wrong for the greater good doesn’t always work

German philosopher Immanuel Kant rejected the idea that ethics was just about “the greatest good for the greatest number” (a view known as consequentialism). Instead he argued that ethics was about doing what you are duty-bound to do – such as tell the truth and don’t kill.

He once considered the question of whether you could lie to save someone’s life. A murderer asks you for the location of a certain baby because he wants to murder him. Can you lie to save the baby’s life? Kant argued that you couldn’t – because you can’t guarantee that your lie will save the baby.

If you send the murderer to the bowling alley knowing the baby is upstairs, who’s to say the babysitter hasn’t taken the baby to the bowling alley without your knowledge? Suddenly you’ve told a lie and the baby is still dead, so you’ve made the situation worse overall.

In the case of Hitler, you would need to be certain his death would prevent the rise of Nazism and the Holocaust. If – as many historians contend – the rise of Nazism was a product of a range of social factors in Germany at the time, then killing a baby isn’t going to reverse those social factors. Butchering the babe might even allow for the rise of another power – equal to or worse than Hitler.

And you’ve still killed a baby.

Killing isn’t necessary

Some people argue that killing the innocent might be justified when it is the lesser evil. But even in that case it has to be absolutely necessary. If time travel is possible, it seems unlikely to be necessary to kill baby Hitler as opposed to, say, kidnapping him, adopting him out to a Jewish family or offering him a scholarship to the Vienna School of Fine Arts.

If time travel is possible, it seems unlikely to be necessary to kill baby Hitler.

Human lives are of immense, perhaps even infinite value. To take one – especially an innocent one – when it isn’t absolutely necessary is a serious ethical issue.

Dangerous precedent

Where do we draw the line? Once we’re done with Hitler which baby is on the block next? Pol Pot? Stalin? The guy who spoiled the end of Harry Potter and the Order of the Phoenix for me in high school? We would require a set of consistent, universal ethical principles by which to determine which babies deserve death and which don’t.

Giving baby Hitler all of our murderous attention betrays our cognitive and personal bias – surely there are other worthy candidates? How many lives must a person take before their infant self is a legitimate target for killing? What standard will be applied?

For me, I wouldn’t do it. I mean, just look at baby Adolf…

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Explainer

Business + Leadership, Politics + Human Rights

Ethics Explainer: Dirty Hands

Opinion + Analysis

Relationships, Society + Culture

I’m really annoyed right now: ‘Beef’ and the uses of anger

Opinion + Analysis

Relationships

Putting the ‘identity’ into identity politics

Opinion + Analysis

Relationships, Science + Technology

Big tech’s Trojan Horse to win your trust

BY Matthew Beard

Matt is a moral philosopher with a background in applied and military ethics. In 2016, Matt won the Australasian Association of Philosophy prize for media engagement. Formerly a fellow at The Ethics Centre, Matt is currently host on ABC’s Short & Curly podcast and the Vincent Fairfax Fellowship Program Director.

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

David Pocock’s rugby prowess and social activism is born of virtue

David Pocock’s rugby prowess and social activism is born of virtue

Opinion + AnalysisBusiness + LeadershipHealth + Wellbeing

BY Matthew Beard The Ethics Centre 30 OCT 2015

In the Wallabies’ semi-final match against Argentina, David Pocock played over 70 minutes with a broken nose. Although Adam Ashley-Cooper would walk away as man-of-the-match thanks to a hat-trick of tries, most commentators agree Pocock’s heroics had as much impact on the result as anything else.

Pocock played all 80 minutes, made 13 tackles, ran the ball eight times, broke two tackles and made four turnovers. Despite only playing four games in the World Cup, he leads the tournament for turnovers with 14.

Fox Sports News described him as “the world’s best player” whilst the Sydney Morning Herald labelled him “The single most important player to take the field come Sunday morning”.

None of this should come as much surprise – as a back rower, Pocock’s success is derived as much by will power, courage, and perseverance as it is by skill. And Pocock has it in spades. He explains:

“My parents were always clear with my brothers and I when we were growing up that you have to have the courage of your convictions and that when you commit to something you must fully commit.”

That quote didn’t come from a post-match interview but from one of Pocock’s blog posts following his arrest in December 2014. Unlike some other footballers, Pocock’s arrest wasn’t a boozy 3am affair. A spokesperson for the environment and public supporter of Julia Gillard’s Carbon Tax, he was arrested for a nonviolent protest against Whitehaven’s coal mine at Maules Creek.

Pocock spent around 10 hours chained to a farmer who was, in turn, chained to one of Whitehaven’s superdiggers.

This wasn’t much of a surprise to those following Pocock’s career. He has been outspoken on a range of issues for several years. He and his partner, Emma Palandri, refuse to marry until LGBTQIA+ couples in Australia can do the same. Although describing themselves as married, the pair have not signed the legal documents to verify it. “‘I don’t see the logic in excluding people from making loving commitments to each other,” Pocock explains.

It’s not the only time Pocock has stood up for LGBTQIA+ rights. In a match against the NSW Waratahs earlier this year he reported NSW lock Jacques Potgieter for repeatedly using a homophobic slur. Amidst some criticism (and praise) Pocock refused to yield – even as some speculated it would cost him the Wallabies captaincy.

Pocock has repeatedly put his head on the block for the causes he believes in.

Pocock’s on-field success cannot be readily distinguished from his off-field activism. In a sentiment widely attributed to Aristotle (but actually a summary of his views), “We are what we repeatedly do. Excellence, then, is not an act, but a habit.”

Courage – or fortitude as Thomas Aquinas called it – is the virtue that enables you to do what you believe to be right despite the difficulties involved. No matter the cost. Not a surprising trait in a man who fellow Wallaby Michael Hooper says “puts his head in some places that are pretty dangerous and gets the ball out”.

After Maules Creek the Australian Rugby Union issued Pocock with a formal warning. They wrote, “While we appreciate David has personal views on a range of matters, we’ve made it clear that we expect his priority to be ensuring he can fulfil his role as a high-performance athlete”.

It’s a tough ask for someone like Pocock to separate his politics from his rugby. Pocock’s on-field success cannot be readily distinguished from his off-field activism. In a sentiment widely attributed to Aristotle (but actually a summary of his views), “We are what we repeatedly do. Excellence, then, is not an act, but a habit”.

Pocock’s courage under fire, his perseverance and his commitment are habits. What makes him a high-performance athlete isn’t just his physical frame but his mental discipline and personal virtue.

We can’t switch virtues on and off when they suit us – we either have them or we don’t. When Pocock gets up to make a crucial tackle or to reach the breakdown a fraction earlier than his rivals to steal the ball he demonstrates the same commitment that saw him support LGBTQIA+ rights, defend the environment, speak about his eating disorder or discuss his faith publicly.

Pocock could no more remain silent off the field than he could hold back on it. His character disposes him to holding fast to what he believes is good. Doing otherwise would dull both his crucial sporting instincts and what makes him an upstanding human being.

You can’t praise Pocock’s on-field achievements whilst also condemning his off-field activism. They’re children of the same beast – his unwavering commitment.

Though there are no doubt those who disagree with Pocock’s views, you can’t praise his on-field achievements whilst also condemning his off-field activism. They’re children of the same beast – his unwavering commitment.

There is ongoing debate regarding whether or not professional athletes should serve as role models. The ability to play sport well doesn’t translate into the moral virtues required in a role model. As Charles Barkley famously remarked, “Just because I dunk a basketball doesn’t mean I should raise your kids.” If Pocock’s prominence both on and off the field are born of the same character traits, then his example allows us to see the role model debate in a new light.

Legendary NFL coach Vince Lombardi once remarked, “The difference between a successful person and others is not a lack of strength, not a lack of knowledge, but rather, a lack of will”. Had he not died 18 years before David Pocock was born, you’d swear Lombardi was talking about him.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Business + Leadership

360° reviews days are numbered

Opinion + Analysis

Health + Wellbeing, Relationships

Easter and the humility revolution

Opinion + Analysis

Business + Leadership, Relationships

How the Canva crew learned to love feedback

Opinion + Analysis

Health + Wellbeing, Politics + Human Rights

Constructing an ethical healthcare system

BY Matthew Beard

Matt is a moral philosopher with a background in applied and military ethics. In 2016, Matt won the Australasian Association of Philosophy prize for media engagement. Formerly a fellow at The Ethics Centre, Matt is currently host on ABC’s Short & Curly podcast and the Vincent Fairfax Fellowship Program Director.

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Greer has the right to speak, but she also has something worth listening to

Greer has the right to speak, but she also has something worth listening to

Opinion + AnalysisRelationshipsSociety + Culture

BY Aoife Assumpta Hart The Ethics Centre 30 OCT 2015

Early on in my transition I was physically assaulted whilst boarding a bus. My back had been turned, my hands occupied with digging in my purse for a ticket when a solid fist struck me from the side – a sucker punch.

He yelled “TRANNY!” and trotted away at a mild gait, unhindered by any witnesses.

This thug’s annoyance resulted from me having just declined his offer of a nugget of crack cocaine in exchange for an alleyway blowjob. Since I was a transwoman waiting for public transit, I was clearly available to be propositioned for sex.

I know one thing for certain as I look back on that incident. This vicious bloke had never read Simone de Beauvoir. He had never read Germaine Greer.

And yet according to students from Cardiff University, Germaine Greer is somehow responsible for me getting smacked on the skull because of her views about transgender issues. What are these violent ideas? In her own words:

I don’t think that post-operative transgender men – M to F transgender people – are women . . . I’m not saying that people should not be allowed to go through that procedure, what I’m saying is it doesn’t make them a woman.

A petition written by Cardiff University Students’ Union’s women’s officer reads:

Such attitudes contribute to the high levels of stigma, hatred and violence towards trans people – particularly trans women – both in the UK and across the world.

So, an academic lecturing in Wales who understands “woman” to mean “an adult human female” is complicit in the murder of trans women (often poor and of a racial minority) by savage men (almost always by men)?

Let’s be honest about liberals and their armchair activism. Slagging off older women on Twitter or from the ivory tower is a hell of a lot easier than confronting actual male violence.

Greer, following feminists such as Simone de Beauvoir, assesses that male and female sexuation is not a myth or a personal feeling, but material states of embodiment within ethical circumstances. She rejects a world in which a bepenised Caitlyn Jenner is dubbed Woman of the Year without having actually lived as a woman for an entire year. Greer denies feeling you are actually female inside is enough to define you as female.

Greer denies feeling you are actually female inside is enough to define you as female.

I signed a petition in support of Germaine Greer because I support her right to speak. As an academic I’m not afraid of lively and vigorous argument. As a transsexual I’m tired of my experience being erased in service to genderism. As a human person I would like a world without gender where we’re free to express ourselves regardless of sex.

Trans activists tell us “gender is not sex” like a mantra bereft of enlightenment. Well, what is gender? They never answer. Where did it come from? They never answer.

Sexual difference is the reality of how mammals reproduce. Gender is a socially constructed hierarchy of sex-based norms imposed onto bodies. Feminism contends that the specific reproductive capacities of female persons are exploited and dominated by male power, with gender as a mechanism of control.

Transgenderism, however, disavows that biological sex is an actual, real category people can fall into. Instead, trans activists adhere to the claim that being male or female is a matter of arbitrary opinion. A male must really be female if ‘she’ possesses a subjectively-identifiable cache of feminine personality traits. By her own command, she was always female, will always be female because thinking makes it so.

Greer rejects gender identity as a coherent essence. Attentive to the practical circumstances of sexuality and power, Greer defines woman as the female sex, and this by definition is exclusive of males – no matter how arbitrarily feminine their inner disposition might be.

To claim males who express “feminine” preferences must actually be female inside is to try to turn ideology into reality.

By defining sex as a materially determined fact and not imaginary assignment, Greer states an anthropological truth. You may not fancy her tact but objecting to her tone is not sufficient to overcome the feminist analysis of gender that Greer advances.

Gender is a synthetic ideology imposed on sex. To claim males who express “feminine” preferences must actually be female inside is to try to turn ideology into reality. And it is to do so on the basis of sex-based stereotypes.

Because these views can appear harsh, troubling, and oppositional to the worldview of many trans sympathisers, Greer’s opponents turn to the most regressive, chauvinistic tactic – aggressively enforcing silence. Rather than providing cogent arguments concerning gender identity, trans activists choose the tactic of no platforming.

Why are people afraid of Greer? Because she is a woman saying no to gender.

Read a different take on trans women and Germaine Greer here, by Helen Boyd.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Relationships

If women won the battle of the sexes, who wins the war?

Opinion + Analysis

Health + Wellbeing, Relationships

Are there any powerful swear words left?

Explainer, READ

Relationships, Society + Culture

Ethics Explainer: Shame

Opinion + Analysis

Society + Culture

David and Margaret spent their careers showing us exactly how to disagree

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Orphanage ‘voluntourism’ makes school students complicit in abuse

Orphanage ‘voluntourism’ makes school students complicit in abuse

Opinion + AnalysisPolitics + Human Rights

BY Karleen Gribble The Ethics Centre 23 OCT 2015

It’s great that Australian schools want to encourage their students to help others and gain perspective on their privilege. But visits to orphanages overseas are not the answer. To quote from the Friends International campaign, “Children are not tourist attractions”.

The first thing to understand is that orphanage life is damaging to children.

Children in orphanages are cared for as a group rather than as individuals. Life is regimented – each child has many different caregivers and little individual attention. Such care hurts children and may result in psychological damage and developmental delays.

Rates of physical and sexual abuse are also high in orphanages. The detrimental impact of institutional care closed all orphanages in Australia decades ago.

Short-term orphanage volunteers who play with and care for children are just adding to this harm. They increase the number of caregivers a child experiences and are just more people who abandon them.

Most children living in orphanages around the world have at least one living parent.

Visiting students may not see these harms. Necessity has forced children in orphanages to act cute to get scarce attention – something called “indiscriminate affection”. School students easily mistake this for genuine happiness. Some of those who run orphanages will also encourage children to be friendly to the visitors in the hope this will increase donations.

Donations are a big problem. In some cases “orphans” are actually created by unscrupulous organisations who pay families to hand over their children in order to collect visitor donations. In Cambodia, orphanage numbers have doubled during a time when the number of children without parents has declined.

Australian schools sometimes seek to improve conditions in orphanages by funding education or medical resources. This can also draw children into orphanages. It’s a dire state of affairs when a loving family sends their child away because an orphanage is the only option for their child to go to school or get medical care.

This is what happened in Aceh, Indonesia where 17 new orphanages were built for “tsunami orphans”. However, 98% of the children in these orphanages had families and had been placed there to gain an education.

Most children living in orphanages around the world have at least one living parent.

Child protection authorities in Australia would not allow school students to go into the homes of vulnerable children so that they could gain an understanding of their situation. Schools should not take advantage of lower standards in other places to give their students a good experience.

What I know from talking to those involved in orphanage volunteering is that they often believe what they are doing is somehow exempt from these problems. Is it possible for school orphanage volunteering trips to be OK? What might harm mitigation look like?

Due diligence may reduce the possibility of working with orphanages that are exploiting children for financial gain.

Schools should resource orphanages in a way that avoids drawing children away from their families. They can do this by making the education programs or medical care they fund equally available to poor children in the community.

Schools can ensure their students do not interact with children. This prevents the harm to children arising from having too many caregivers. Students can instead take on tasks that free up caregivers to spend more time with children, such as cooking, cleaning or maintenance work.

Child protection authorities in Australia would not allow school students to go into the homes of vulnerable children so they could gain an understanding of their situation.

When visiting the orphanages, school staff might educate their students about orphanages. They might talk about children having at least one parent who could care for them if given support.

Perhaps they could discuss the high rates of physical and sexual abuse within orphanages. Or explain child development principles and the importance of one-on-one care for young children. They can help their students understand why keeping children in families and out of orphanages is important.

Theoretically, it might be possible for schools to do all of these things but I am not aware of any school that has. In particular, not allowing students to interact with children removes what schools seem to consider an essential component of these trips.

Schools should develop sister-school relationships with overseas schools or even schools in disadvantaged communities in Australia. It’s great to see that some schools are already leading the way on this front.

Such arrangements foster understanding in a situation where there is more equality in the relationships and fewer pitfalls. If Australian schools are genuine about cross-cultural exchange, they shouldn’t be fostering last century’s model of child welfare.

Read Rev Dr Richard Umbers‘ counter-argument here.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Explainer

Society + Culture, Politics + Human Rights

Ethics Explainer: Moral Courage

Opinion + Analysis

Politics + Human Rights, Relationships

Who’s your daddy?

Opinion + Analysis

Politics + Human Rights, Relationships, Society + Culture

You won’t be able to tell whether Depp or Heard are lying by watching their faces

Opinion + Analysis

Politics + Human Rights, Relationships

Want #MeToo to serve justice? Use it responsibly.

BY Karleen Gribble

Dr Karleen Gribble is an Adjunct Fellow in the School of Nursing and Midwifery at the University of Western Sydney.

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

The undeserved doubt of the anti-vaxxer

The undeserved doubt of the anti-vaxxer

Opinion + AnalysisHealth + WellbeingScience + Technology

BY Pat Stokes The Ethics Centre 17 OCT 2015

For the last three years or so I’ve been arguing with anti-vaccination activists. In the process I’ve learnt a great deal – about science denial, the motivations of alternative belief systems and the sheer resilience of falsehood.

Since October 2012 I’ve also been actively involved in Stop the AVN (SAVN). SAVN was founded to counter the nonsense spread by the Australian Vaccination-skeptics Network. According to anti-vaxxers SAVN is a Big Pharma-funded “hate group” populated by professional trolls who stamp on their right to free speech.

I’m afraid the facts are far more prosaic. There’s no Big Pharma involvement – in fact there’s no funding at all. We’re just an informal group of passionate people from all walks of life (including several research scientists and medical professionals) who got fed up with people spreading dangerous untruths and decided to speak out.

When SAVN started in 2009, antivax activists were regularly appearing in the media for the sake of “balance”. This fostered the impression of scientific controversy where none existed. Nowadays, the media understand the harm of false balance and the antivaxxers are usually told to stay home.

There’s a greater understanding that scientists are best placed to say whether or not something is scientifically controversial. (Sadly we can’t yet say the same for the discussion around climate change.) And there’s much greater awareness of how wrong – and how harmful – antivax beliefs really are.

No Jab, No Pay

This shift in attitudes has been followed by significant legislative change. Last year NSW introduced ‘No Jab, No Play’ rules. These gave childcare centres the power to refuse to enrol non-vaccinated children. Queensland and Victoria are planning to follow suit.

In April, the Abbott government introduced ‘No Jab, No Pay‘ legislation. Conscientious objectors to vaccination could no longer access the Supplement to the Family Tax Benefit Part A payment.

The payment has been conditional on children being vaccinated since 2012, as was the payment it replaced. But until now vaccination refusers could still access the supplement by having a “conscientious objection” form signed by a GP or claiming a religious belief exemption. The new legislation removes all but medical exemptions.

The change closes loopholes that should never have been there in the first place. Claiming a vaccination supplement without vaccinating is rather like a childless person insisting on being paid the Baby Bonus despite being morally opposed to parenthood.

The new rules also make the Child Care Benefit (CCB) and Child Care Rebate (CCR) conditional on vaccinating children. That’s not a trivial impost – estimates at the time of the announcement suggested some families could lose around $15,000 over four years.

What should we make of this? A necessary response to an entrenched problem or a punitive overreaction?

Much of the academic criticism of the policy has been framed in terms of whether it will in fact improve vaccination rates. Conscientious objector numbers do now seem to be falling, although it remains to be seen whether this is due to the new policies.

Embedded in this line of criticism are three premises:

- Improvements in the overall vaccination rate will come through targeting the merely “vaccine-hesitant” population.

- Targeting the smaller group of hard core vaccine refusers, accounting for around 2% of families, would be counterproductive.

- The hard core is beyond the reach of rational persuasion even via benefit cuts.

These are of course empirical questions and open to testing. I suspect the third assumption is true. It’s hard to see how someone who believes the entire medical profession and research sector is either corrupt, inept, or both, or that government and media deliberately hide “the Truth”, would ever be persuaded by evidence from just those sources.

A few antivaxxers even believe the germ theory of disease itself is false. In such cases no amount of time spent with a GP explaining the facts is going to help.

They base their “choices” on beliefs ranging from the ridiculous to the repugnant, but their fundamental objection is that the new policies are coercive.

In recent years, antivax activists have tended to frame their objections to legislation like No Jab, No Pay in terms of individual rights and freedom of choice.

Yes, they base their “choices” on beliefs ranging from the ridiculous to the repugnant (including the claim that Shaken Baby Syndrome is really the result of vaccination not child abuse), but their fundamental objection is that the new policies are coercive. They make the medical procedure of vaccination compulsory, which they regard as a violation of basic human rights.

Part of this isn’t in dispute – these measures are indeed coercive. Whether they amount to compulsory vaccination is a more complex question. In my view they do not, because they withhold payments rather than issuing fines or other sanctions, although that can still be a serious form of coercive pressure. Such moves also have a disproportionate impact on families who are less well-off, revealing a broader problem with using welfare to influence behaviour.

Nonetheless, it’s not particularly controversial that the state can use some coercive power in pursuit of public health goals. It does so in a range of cases – from taxing cigarettes to fining people for not wearing seatbelts. Of course there is plenty of room for disagreement about how much coercion is acceptable. Recent discussion in Canberra about so-called “nanny state” laws reflects such debate.

But vaccination doesn’t fall into the nanny state category because vaccination decisions aren’t just made by and for individuals. Several different groups rely on herd immunity to protect them. Herd immunity can only be maintained if vaccination rates within the community are kept at high levels. By refusing to contribute to a collective good they enjoy, vaccine refusers provide a classic example of the Free Rider Problem.

No Jab, No Pay legislation is not about people making vaccination decisions for themselves, but on behalf of their children. The suggestion that parents have some sort of absolute right to make health decisions for their children just doesn’t hold water. Children aren’t property, nor are our rights to parent our children how we see fit absolute. No-one thinks the choice to abuse or starve one’s child should be protected, for example.

And that gives lie to the “pro-choice” argument against these laws – not all choices deserve respect.

The suggestion that parents have some sort of absolute right to make health decisions for their children just doesn’t hold water. Children aren’t property, nor are our rights to parent our children how we see fit absolute.

Thinking in a vacuum

The pro-choice argument depends on the unspoken assumption there is room for legitimate disagreement about the harms and benefits of vaccination. That gets us to the heart of what motivates a great deal of anti-vaccination activism – the issue of who gets to decide what is empirically true.

Antivax belief may play on the basic human fears of hesitant parents but the specific contents of those beliefs don’t come out of nowhere. Much of it emerges from what sociologists have called the “cultic milieu” – a cultural space that trades in “forbidden” or “suppressed” knowledge. This milieu is held together by a common rejection of orthodoxy for the sake of rejecting orthodoxy. Believe whatever you want – so long as it’s not what the “mainstream” believes.

This sort of epistemic contrarianism might make you feel superior to the “sheeple”, the unawake masses too gullible, thick or corrupted to see what’s really going on. It might also introduce you to a network of like-minded people who can act as a buffer from criticism. But it’s also a betrayal of the social basis of knowledge – our radical epistemic interdependency.

The thinkers of the Enlightenment bid us sapere aude, to “dare to know” for ourselves. Knowledge was no longer going to be determined by religious or political authority, but by capital-r Reason. But that liberation kicked off a process of knowledge creation that became so enormous specialisation was inevitable. There is simply too much information now for any one of us to know it all.

Talk to antivaxxers and it becomes clear they’re stuck on page one of the Enlightenment project. As Emma Jane and Chris Fleming have recently argued, adherence to an Enlightenment conception of the individual autonomous knower drives much conspiracy theorising. It’s what happens when the Enlightenment conception of the individual as sovereign reasoner and sole source of epistemic authority confronts a world too complex for any individual to understand everything.

As a result of this complexity we are reliant on the knowledge of others to understand the world. Even suspicion of individual claims, persons, or institutions only makes sense against massive background trust in what others tell us.

Accepting the benefits of science requires us to do something difficult – it requires us to accept the word of people we’ve never met who make claims we can never fully assess.

Accepting the benefits of science requires us to do something difficult – something nothing in our evolutionary heritage prepares us to do. It requires us to accept that the testimony of our direct senses no longer has primary authority. And it requires us to accept the word of people we’ve never met who make claims we can never fully assess.

Anti-vaxxers don’t like that loss of authority. They want to think for themselves, but they don’t accept we can’t think in a vacuum. We do our thinking against the background of shared standards and processes of reasoning, argument and testimony. Rejecting those standards by making claims that go against the findings of science without using science isn’t “critical thinking”. No more than picking up the ball and throwing it is “better soccer”.

This point about authority tells us something ethically important too. Targeting the vaccine-hesitant rather than the hard core refusers makes a certain kind of empirical sense.

But it’s important to remember the hard core are the source of the misinformation that misleads the hesitant. In the end, the harm caused by antivax beliefs is due to people who abuse the responsibility that comes with free speech. Namely, the responsibility to only say things you’re entitled to believe are true.

Most antivaxxers are sincere in their beliefs. They honestly do think they’re doing the right thing by their children. That these beliefs are sincere, however, doesn’t entitle them to respect and forbearance. William Kingdon Clifford begins his classic 1877 essay The Ethics of Belief with a particularly striking thought experiment.

A shipowner was about to send to sea an emigrant-ship. He knew that she was old, and not well built at the first; that she had seen many seas and climes, and often had needed repairs. Doubts had been suggested to him that possibly she was not seaworthy. These doubts preyed upon his mind and made him unhappy; he thought that perhaps he ought to have her thoroughly overhauled and refitted, even though this should put him at great expense. Before the ship sailed, however, he succeeded in overcoming these melancholy reflections.

He said to himself that she had gone safely through so many voyages and weathered so many storms that it was idle to suppose she would not come safely home from this trip also. He would put his trust in Providence, which could hardly fail to protect all these unhappy families that were leaving their fatherland to seek for better times elsewhere. He would dismiss from his mind all ungenerous suspicions about the honesty of builders and contractors.

In such ways he acquired a sincere and comfortable conviction that his vessel was thoroughly safe and seaworthy; he watched her departure with a light heart, and benevolent wishes for the success of the exiles in their strange new home that was to be; and he got his insurance-money when she went down in mid-ocean and told no tales.

Note that the ship owner isn’t lying. He honestly comes to believe his vessel is seaworthy. Yet Clifford argues, “the sincerity of his conviction can in no way help him, because he had no right to believe on such evidence as was before him.”

In the 21st century nobody has the right to believe scientists are wrong about science without having earned that right through actually doing science. Real science, mind you, not untrained armchair speculation and frenetic googling. That applies as much to vaccination as it does to climate change, GMOs and everything else.

We can disagree about the policy responses to the science in these cases. We can also disagree about what financial consequences should flow from removing non-medical exemptions for vaccination refusers. But removing such exemptions sends a powerful signal.

We are not obliged to respect harmful decisions grounded in unearned beliefs, particularly not when this harms children and the wider community.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Health + Wellbeing, Relationships, Science + Technology

Hallucinations that help: Psychedelics, psychiatry, and freedom from the self

Opinion + Analysis

Health + Wellbeing, Politics + Human Rights

Vaccines: compulsory or conditional?

Opinion + Analysis

Health + Wellbeing, Relationships

Philosophy must (and can) thrive outside universities

Big thinker

Politics + Human Rights, Science + Technology

Big Thinker: Francesca Minerva

BY Pat Stokes

Dr Patrick Stokes is a senior lecturer in Philosophy at Deakin University. Follow him on Twitter – @patstokes.

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

There’s no good reason to keep women off the front lines

There’s no good reason to keep women off the front lines

Opinion + AnalysisBusiness + LeadershipRelationships

BY Nikki Coleman The Ethics Centre 14 OCT 2015

The US. military may finally be coming around on the question of women on the front lines.

In a confidential briefing on September 30, military leaders presented their recommendations on having women on the front lines to the House Armed Services Subcommittee on Military Personnel, and Defense Secretary Ashton Carter.

In the 1940s, the US military faced similar debates regarding black service personnel. Arguments regarding unit cohesion and operational capability were the most prominent against integration of white and black personnel. With the power of hindsight, we can see those arguments for what they were – scare tactics intended to keep the military segregated.

The same arguments have returned today. At the command of Secretary Panetta, the US Army underwent a two-year study to develop gender-neutral standards for specialist roles currently closed to women. The success of this standardised approach was demonstrated recently when two women graduated from the Army Ranger School.

There have been vocal critics of allowing women to attempt the Army Ranger School course. Some claim the standards were lowered for these women. This was denied by the Army at the Ranger School graduation ceremony. It was rebuked again by the Chief of Army Public Affairs who described the allegations as “pure fiction”.

These allegations are unlikely to settle down any time soon. A Congressman has requested service records of the women who graduated to investigate “serious allegations” of bias and the lowering of standards by Ranger School instructors.

This incident reveals the depth of scepticism regarding women’s ability to serve alongside men within some quarters. The standardised approach has dismissed the issue of operational capacity – the other arguments against female service are equally weak.

The potential for women to be captured and raped has been raised by opponents of women serving in combat units. This discussion ignores the sad reality – women in defence are much more likely to be sexually assaulted by their own troops than by the enemy. The 2013 Department of Defense report into sexual assault found that while women make up 14.5% of the US military, they make up 86% of sexual assault victims.

Women in defence are much more likely to be sexually assaulted by their own troops than by the enemy.

Of the 301 reports of sexual assault in combat zones in 2013 to the Department of Defence, only 12 were by foreign military personnel. The vast majority of sexual abuse victims in combat areas were abused by their own comrades, not the enemy.

Sexual abuse in the military has been a problem for decades. Why would it increase if we allowed women in combat? Rape of captured soldiers has also not been limited to women. Many men have also been sexually assaulted on capture. Sexual assault in this sphere is not about sexual desire or gratification – it’s about power and denigration of your enemy.

The second argument suggests women in combat units will affect unit cohesion. First, “the boys” won’t be able to be themselves. And second, if a woman is injured in battle men will be unable to focus on the mission and instead will be driven to protect their female colleagues.

The first argument raises a question about military culture. Why is behaviour considered inappropriate around women tolerated at all? The second argument is insulting to currently serving soldiers, whose professionalism and commitment to the mission is questioned.

To suggest soldiers would ignore the mission in favour of some other goal undervalues the extent of their military professionalism.

Soldiers overcome a range of powerful instincts in a firefight – including protecting their own lives. To suggest soldiers would ignore the mission in favour of some other goal undervalues the extent of their military professionalism.

There is also an elephant in the room. Women have been serving in combat roles for years – as pilots, on ships, as interpreters and in female engagement teams. For these women a decision regarding the position of women in combat is irrelevant – they are already on the front lines.

The Australian situation sits in stark contrast to that of the US. Gay and lesbian members have served openly for a decade, women have been fully integrated into combat units since 2013, and the Australian Defence Force now actively recruits transgender personnel.

Australia has been able to integrate women, gay, lesbian and transgender soldiers into combat units without affecting operational capability.

Hopefully the US Defense Secretary will follow the advice of his Chiefs of Staff and the leadership of the ADF. He should allow military personnel to serve in all roles in the military according to universal standards rather than chromosomes or genitalia.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Reports

Business + Leadership

Thought Leadership: Ethics at Work, a 2018 Survey of Employees

Opinion + Analysis

Relationships

Want men to stop hitting women? Stop talking about “real men”

Opinion + Analysis

Business + Leadership

Perils of an unforgiving workplace

Opinion + Analysis

Relationships

What we owe to our pets

BY Nikki Coleman

Nikki Coleman is a PhD Candidate and researcher with the Australian Centre for the Study of Armed Conflict and Society at UNSW Canberra. In her free time she is the “Canberra Mum” to many officer cadets and midshipmen at ADFA.

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

HSC exams matter – but not for the reasons you think

HSC exams matter – but not for the reasons you think

Opinion + AnalysisHealth + Wellbeing

BY The Ethics Centre 12 OCT 2015

Every year at around the time of the Higher School Certificate (HSC) exams, the same messages appear. The HSC isn’t everything – don’t stress! One year the then NSW premier Mike Baird weighed in with, “Life isn’t defined by your exams. It begins after they have finished.”

I remember getting those messages when I did the HSC but they seemed hard to swallow at the time. I’d spent 13 years being told of the importance of school marks and HSC results. High achievers earned awards. The importance of ‘rankings’ put me in competition with my peers and I measured success in Band Sixes.

If we’re going to convince students not to stress too much about results we need to do more than tell them to relax.

If we’re going to convince students not to stress too much about results we need to do more than tell them to relax. Years of conditioning makes students believe the HSC and Australian Tertiary Admission Rank (ATAR) scores have the power to determine their future. For some, the numbers can determine their self-worth.

What we need to do is explain the moral place of education in our lives and how the HSC sits in relation to it.

Why do we worry about academic achievement at all?

One reason is because we recognise knowledge and learning as being beneficial to society. Prime minister Malcolm Turnbull talks to anyone who will listen about the importance of an agile innovation economy. Such an economy relies on creative thinking and education.

Sadly, we live in a world where not every person can receive an education. Still, if we’re wise, we can make sure that every person can benefit from education. As French philosopher Michel Foucault wrote, “knowledge is power”.

Knowledge controlled by a privileged few is a recipe for dictatorship. Used wisely it can provide the power to make our imperfect world a little bit better.

We don’t just value knowledge because it’s useful. Not all learning leads to new inventions, helps the poor or changes the world. That doesn’t mean it’s pointless. Knowledge is ‘intrinsically good’. Learning for learning’s sake is a completely reasonable and very human activity.

Excelling in academic life also takes more than just knowledge or intellect. It requires a curious mind, perseverance and open-mindedness among other things.

In this sense, the HSC results do matter. They show the extent to which students have developed certain virtues of mind and character.

The HSC is an opportunity to reflect on the huge amount of knowledge gained over years of education … It does not predict the future.

What can this tell us about the HSC? A few things. First, the praise we heap on high achievers is not only about the number itself but about the virtues demonstrated in achieving the mark. These virtues aren’t unique to students who score high marks.

Some high-achieving students might be getting by on natural ability rather than any special virtue. This means the final result matters less than the way it was achieved.

Second, the HSC is an opportunity to reflect on the huge amount of knowledge gained over years of education. It’s a chance for students to be proud of what they’ve learned. But that’s all it is. The HSC tells students what they have learned up until this point. It does not predict the future.

Many people who have struggled with exams have flourished and many who have excelled in school have struggled in the real world. The markers of success in school, work and life cannot be fully represented in a single number – much less the worth or value of a person.

Finally, excellence in academic life takes more than individual virtue. It takes a decent slice of luck and help from others. Individual academic achievement is the product of collective effort. Teachers, parents, friends and factors beyond our control help determine both our success and our failure. This provides a dash of both perspective and humility.

HSC marks and ATAR scores try to represent a range of complex processes in a useful and efficient way. But it is those processes that really matter – not the final number itself.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Business + Leadership, Health + Wellbeing, Relationships

Ending workplace bullying demands courage

Opinion + Analysis

Health + Wellbeing, Relationships

Academia’s wicked problem

Opinion + Analysis

Business + Leadership, Health + Wellbeing

What your email signature says about you

Opinion + Analysis

Health + Wellbeing, Relationships