Should we abolish the institution of marriage?

Should we abolish the institution of marriage?

Opinion + AnalysisRelationships

BY Anna Goodman 12 OCT 2022

As it stands, in the western world, marriage is the legal union between two people who are typically romantic or sexual partners. Some philosophers are now revisiting the institution of marriage and asking what can be done to reform it, and if it should exist at all.

The institution of marriage has been around for over 4,000 years. Historians first see instances of marriages popping up around 2350 BCE in Mesopotamia, or modern day Iraq. Marriage turned a woman into a man’s object whose primary purpose was producing legitimate offspring.

Throughout the following centuries and millennia, the institution of marriage evolved. As the Roman Catholic church grew in power throughout the 6th, 7th, and 8th centuries, marriage became widely accepted as a sacrament, or a ceremony that imparted divine grace on two people. During the Middle Ages, as land ownership became an important part of wealth and status, marriage was about securing male heirs to pass down wealth and increasing family status by having a daughter marrying a land-owning man.

“The property-like status of women was evident in Western societies like Rome and Greece, where wives were taken solely for the purpose of bearing legitimate children and, in most cases, were treated like dependents and confined to activities such as caring for children, cooking, and keeping house.”

The thinking that a marriage should be about love really only began in the 1500s, during a period now known as the Renaissance. Not much improved with regard to equality for women, but the movement did put forth the idea that two parties should enter a marriage consensually. Instead of women being viewed as property to be bought and sold with a dowry, women had more autonomy which elevated their social status. Into the 1700s, while the working class were essentially free to marry who they wanted (as long as they married people in the same social class), girls born into aristocratic families were betrothed as infants and married as teenagers in financial alliances between families.

But marriage doesn’t look like this anymore, right? It’s easy to forget that interracial marriage was illegal in the United States until 1967 and until 1985 in South Africa. Marital rape only became a crime in all American states in 1993. Australia only legalised gay marriage at the end of 2017, making it one of 31 countries to do so. In the 4,000 years of marriage, most legalised marriage equality has happened in the last 50 years.

The nasty history of marriage has prompted some philosophers to ask: is it time to get rid of the institution of marriage? Or, is it possible to reform?

An argument for the abolition of marriage

“Freedom for women cannot be won without the abolition of marriage.”

In the last hundred years, there has been plenty of discourse about where marriage fits into modern life. One notable voice, Sheila Cronan, a feminist activist who participated actively in the second wave feminist movement in America, argued that marriage is comparable to slavery, as women performed free labour in the home and were reliant on their husbands for financial and social protection.

“Attack on such issues as employment discrimination are superfluous; as long as women are working for free in the home we cannot expect our demands for equal pay outside the home to be taken seriously.”

Cronan believed that it would be impossible to achieve true gender equality as long as marriage remained a dominant institution. The comparison of marriage to slavery was hugely controversial, though, because white women had significantly better living conditions and security than slaves. In a modern, western context, Cronan’s article may seem like a bit of an overstatement on the woes of marriage.

Is there an alternative to abolition?

Another contribution to the philosophy of marriage is the work of Elizabeth Brake. Instead of abolishing marriage, she puts forward a theory in her 2010 paper What Political Liberalism Implies for Marriage Law called “minimal marriage,” which claims that any people should be allowed to get married and enjoy all the legal rights that come with it, regardless of the kind of relationship they are in or the number of people in it.

Brake argues that when the state allows some kinds of marriages but not other kinds, the state is asserting one kind of relationship as more morally acceptable than another. Marriage in the western world provides a number of legal benefits: visitation rights in hospitals if someone gets sick, fewer complications around joint bank accounts, and the right to inherit the estate if a partner dies, to name a few. It is also often viewed as a better or superior kind of relationship, so those who are not allowed to get married are seen as being in an inferior kind of relationship.

Take for example the case of two elderly women who are close friends and have lived together for the last 30 years. If one of the elderly women falls ill and needs to go to hospital, her friend might not be able to visit her because she is not a spouse or next-of-kin. If she passes away and her friend is not in her will, then the friend will have no say over what happens to her estate. The reason these friends were not married was because they felt no romantic attraction to each other. But Brake asks us: why should their relationship be seen as less valuable or less important than a romantic one? Why should their caring relationship not be afforded the legal rights of marriage?

Many legal rights are tied to marriage. In the US, there are over 1000 federal “statutory provisions,” or clauses written in the law, in which marital status is a factor in determining who gets a benefit, privilege, or right. Brake argues that reforming marriage to be “minimal” is the best way to ensure that as many people as possible have these legal rights.

So, what should the future of marriage be?

Many people today will say that the day they got married was one of the best days of their lives. However, just because we have a more positive view of it now does not erase the thousands of years of discriminatory history. In addition, practices such as child marriage and arranged marriages that no longer occur in the western world are still the norm in other parts of the world.

While Cronan presents a strong argument for abolishing marriage and Brake presents a strong argument for its reform , we need to examine the underlying social ills that make marriage so complicated. Additionally, there’s no guarantee that abolishing or reforming marriage will eliminate the sexism, racism, and homophobia that create the conditions for marriage to be so discriminatory in the first place. Marriage may not be creating inequality as much as it is a symptom of inequality. While the question of what to do with marriage is worth interrogating, it’s important to consider the larger role it might play in creating social change and working towards equality.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Health + Wellbeing, Politics + Human Rights, Relationships

People with dementia need to be heard – not bound and drugged

Opinion + Analysis

Relationships, Science + Technology

Should you be afraid of apps like FaceApp?

Big thinker

Relationships

Big Thinker: Jelaluddin Rumi

Opinion + Analysis

Health + Wellbeing, Relationships

Anthem outrage reveals Australia’s spiritual shortcomings

BY Anna Goodman

Anna is a graduate of Princeton University, majoring in philosophy. She currently works in consulting, and continues to enjoy reading and writing about philosophical ideas in her free time.

I'd like to talk to you: 'The Rehearsal' and the impossibility of planning for the right thing

I’d like to talk to you: ‘The Rehearsal’ and the impossibility of planning for the right thing

Opinion + AnalysisRelationshipsSociety + Culture

BY Joseph Earp 6 OCT 2022

Nathan Fielder’s The Rehearsal, perhaps one of the slipperiest works of modern television, aims to solve a very complex, deeply recurrent problem: how do we navigate our interpersonal relations, which are ever-changing, and filled with opportunities to let people down and harm those we love?

In the show, which constantly blends the real with the fake, the documentary with the theatrical, the off-kilter comedian Nathan Fielder’s solution is supposedly simple: he finds people who are preparing to have difficult conversations with friends and loved ones, and gives them the opportunity to rehearse these encounters ahead of time.

The idea behind this ridiculous, though oddly logical practice is thus: if these people have already rehearsed an uncomfortable exchange with a loved one, then they can predict for every variable. They can polish their approach. When conversations branch off into different directions, they will have accounted for that branching already, leaving them to always choose the best, most impactful response.

To aid his mentees in this practice, Fielder uses an ever-escalating series of interventions. He creates dialogue flow trees, in which conversations can be unveiled in their full myriad of possibilities. He stages strange obstructions, ranging from fake babies to simulated drug overdoses. He takes the joyous chaos of being what Jean-Paul Sartre called “a thing in a world” – an agent who is perceived by other agents, and whose actions affect them – and he tries to simplify it.

Saying The Rehearsal is definitively “about” anything is a mistake – it’s too ever-changing, too messy, for that. But certainly, in its focus on trying to do the right thing by simplifying a complex world so that it might be predicted, the show can serve as a model of the pitfalls of trying to rationalise and generalise. It is a warning to those philosophers from the analytic tradition who reduce a world that is precisely so joyous and beautiful because it is so chaotic. So complex. And so filled with the potential for harm.

Fielder’s methods for helping people confront their own mistruths, find love, or fit better into their communities, are guided by the principle of a kind of lopsided rationality. The methods are laughable, of course – Fielder is a comedian. But they follow a strict, internally coherent form of thought.

In essence, what Fielder tries to do is generalise. He takes the nuances of life’s difficult conversations, and he strips them down to their component parts – maps them out on a board, uses actors to play them out ahead of time.

For instance, in the show’s first episode, Fielder recruits Kor, a competitive and trivia-obsessed young man who is preparing to tell his close friends that he has lied for years about getting a master’s degree. Fielder hires an actress to play Kor’s most abrasive friend, gets that actress to uncover as much information as possible about the real person she is stepping into the shoes of, and then puts Kor and this performer in a set that precisely replicates the dimensions of the bar where the actual conversation will go down.

The method – reduce. Simplify. Abstract. And use that generalised version of a real-life situation to guide how the actual situation will play out. This kind of ethical reasoning is highly tempting to us. We often find ourselves drawn to it, as we move through our lives.

Sure, we might not go to the lengths that Fielder does in The Rehearsal. But we do practice tough conversations in the shower with ourselves, ahead of time. We draft and re-draft text messages, and base them on how we might imagine the person we send them to will respond. In essence, we use our “rationality” and “reason” to help us move through the world, drawing on past experiences to help us navigate future ones.

Trivia-obsessed Kor, in fact, is a specific example of this. He is most worried about revealing his deception to his abrasive friend because of how she’s behaved in the past. He rationalises that because he has seen her blow up at others, getting angry at the drop of a hat, that she’ll do the same in the future, and more specifically, do it to him. He starts with a real-world experience – incidents of her temper – and then generalises them to a rule – she will always get angry – using his rationality to try and deduce the future, and thus the best action.

But what this kind of rationality does not take into account is the way that human beings shift and change; the way that they surprise us. How often have we prepared for an outcome that hasn’t come to light? Stressed about confrontations that turn out not to be confrontations at all?

Rather than generalising away from the inherent changeability of those we love, or indeed any of those who we surround ourselves with, we should instead embrace what the philosopher Jurgen Habermas described as “communicative rationality.”

For Habermas, our rational faculties shouldn’t generalise us away from the world – they shouldn’t isolate us. Instead, they should be part of a process of “achieving consensus”, as Habermas put it. We make decisions with other people. While staying in contact with them.

This means, rather than being a witness to the world – viewing it and then reviewing it, and using what we see and learn to guide our ethics – we are an active participant in it. On this model, our thoughts, desires, and ethical behaviours are essentially collaborative. They are grounded in the real world, and the people around us.

Thus, on Habermas’ view, we never stop discussing, talking, engaging. We don’t do as Kor does – using his rationality to effectively step himself away from his abrasive friend, halting in the process of communicating with her. And we don’t do as Fielder does – creating an artificial replica of the world, rather than just living in the actual world.

When we take the Fielder method, instead of adopting Habermas’ position of making everything communicative, we lose that which makes the world what it is: its messiness, its changeability, its dynamic and fluid nature.

There is nothing logically wrong, broadly speaking, about the kind of rationality that involves a step away from the world – that leads us to run through possible outcomes in our head with ourselves. Difficult conversations do move through different points; do branch off. So it makes some kind of sense to imagine that we should be able to predict them. The error here is not one in internal consistency. The error is taking a step backwards from those around us when trying to work out what to do, rather than taking a step forward.

The joke of The Rehearsal is precisely that this internally consistent form of rationality is remarkably, laughably devoid of life. It’s cold. Alien. It aims to solve real world problems, but it does that by turning to a printed board of branching lines of dialogue, instead of other human beings.

And it’s not even useful. As it turns out, Kor, who is highly nervous about the encounter with his abrasive friend, has little to worry about. When he confronts her, rather than the actress he has been rehearsing with, she is largely unfussed. She doesn’t mind that Kor has misrepresented himself. She expresses understanding for his duplicity. It is all pretty chill. Laughably so, in fact.

What Kor shows us is the importance of remaining in the world. That means we might fail them – that we might do the wrong thing. But that’s better than hiding away in a world of Fielder’s whiteboards. Indeed, our failures tell us that we’re human, bungling from one awful mistake to another, trying, and then failing, and then, beautifully, trying again. Guided always by people. Living always in communities. Staying blissfully, painfully connected.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Science + Technology, Business + Leadership, Society + Culture

AI and rediscovering our humanity

Explainer

Health + Wellbeing, Society + Culture

Ethics Explainer: Logical Fallacies

Opinion + Analysis

Relationships

Get mad and get calm: the paradox of happiness

Opinion + Analysis

Relationships

What we owe our friends

BY Joseph Earp

Joseph Earp is a poet, journalist and philosophy student. He is currently undertaking his PhD at the University of Sydney, studying the work of David Hume.

How to improve your organisation’s ethical decision-making

How to improve your organisation’s ethical decision-making

Opinion + AnalysisBusiness + Leadership

BY The Ethics Centre 4 OCT 2022

Are you confident in your organisation’s ability to negotiate difficult ethical terrain? The Ethics Centre’s Decision Lab is a robust process that can help expand your ethical decision-making capability.

Imagine you run a not-for-profit that helps people experiencing problem gambling. You are approached by a high-net-worth individual connected to the gambling industry who is interested in making a substantial donation to your organisation. Funding is always hard to come by and you know you could reach many more people in need with this money. Do you accept the donation?

Or imagine you are the CEO of a publicly listed consulting firm that is deciding whether to take on a new client in the fossil fuel industry. You suspect it would be unpopular with younger members of your staff and some of your other clients, but it’s a very lucrative contract and it would significantly boost your bottom line ahead of reporting season. Do you take on the client?

What if you sat on the board of a major corporation that is planning to make a public statement urging the government to adopt a new progressive social policy. The proposed policy does not impact your business directly, but a majority of your staff support it. However, you personally have misgivings about the policy and suspect some other employees do as well. Do you put your name on the public statement?

What would you do in each of these situations? If you do have an answer, could you explain how you arrived at your decision? Could you defend it in public? Could you defend it on the front page of the newspaper?

Dealing with ethically-charged situations like these is never easy. Not only do our decisions have a material impact on multiple stakeholders, but we also need to be able to communicate and justify them. This is complicated by the fact that many of the influences on our ethical decision-making are implicit, meaning we risk making decisions based on unexamined values or we might struggle to explain how we arrived at a particular conclusion.

This is why The Ethics Centre has developed Decision Lab, a comprehensive ethical decision-making toolkit that surfaces the implicit elements in ethical decision-making and provides a robust process to navigate the ethical dimensions of critical decisions for organisations big and small.

Decision Lab

The Decision Lab process begins by clarifying the organisation’s core purpose, values and principles. The purpose includes the organisation’s overall mission, which is what it is aiming to achieve, and its vision, which is what the world looks like when it has achieved it. The values are what the organisation believes to be good and the principles are the guiderails that guide decision-making.

Even organisations that have published mission statements and codes of conduct will find that employees will have different understandings of purpose, values and principles, and these differences can influence ethical decision-making in a profound way. By bringing these perspectives to the surface, the Decision Lab process enables the diversity to be recognised and engaged with constructively rather than leaving it implicit and having different individuals pulling in different directions.

The Decision Lab also explores the process of decision-making, testing critical assumptions and taking multiple perspectives into account to ensure no key elements are overlooked. Take the hypothetical above about the not-for-profit. It would be easy to focus on the issue of whether it is hypocritical to accept money from those associated with gambling in order to fight problem gambling. But it is also crucial to consider the impact on other stakeholders, such as the beneficiaries of the not-for-profit’s services, their families and communities, or consider whether the perception of hypocrisy might affect future fundraising.

Shadow values

The process also acknowledges common biases and influences that can derail decision-making. A common one is the organisation’s Shadow Values which are the hidden uncodified norms and expectations promoted often out of awareness that can influence how the entire organisation operates. For example, many organisations explicitly subscribe to values such as integrity, but the shadow values might promote loyalty, which could prevent an employee from calling out a senior manager who is misrepresenting the work being done for a client.

The Decision Lab then provides a checklist for decisions that can be used as a ‘no regrets test,’ ensuring that all relevant elements have been considered. For example, should the consulting firm reject the contract with the fossil fuel company, it could suffer a backlash from shareholders, who argue that the board has a responsibility to create value for shareholders within the law rather than pursue political agendas. The Decision Lab checklist would ensure that such eventualities are considered before the decision was made.

The decision-making process is then stress tested against a variety of hypothetical scenarios, such as those above, that are tailored to the organisation’s mission and circumstances. This allows participants to put ethical decision-making into practice, engage in constructive deliberation and learn how to evaluate options and develop implementation plans as a team.

On completion of the Decision Lab, The Ethics Centre provides a customised decision-making framework that is tailored to the organisation and its needs for future reference.

Open book

The Decision Lab is a powerful and practical tool for any organisation looking to improve its ethical decision-making. It also has other benefits, such as increases awareness of the lived organisational culture, including the beliefs, attitudes and practices shared amongst its people. It identifies how the current culture and systems are enabling or constraining the realisation of the organisation’s goals.

By unifying employees around a common purpose and encouraging values-aligned behaviour, it ensures that the entire organisation is working as a unit towards a shared vision. The deliberative process also helps to build a climate of trust within the organisation, which aids in avoiding and resolving conflicts, as well as promoting good decision-making.

Individuals and organisations are constantly making decisions that have wide-reaching impacts. The question is: are you doing it well? The Decision Lab can ensure that your organisation’s decision-making is done in an open, robust and constructive manner, producing more ethical decisions and contributing to a positive work culture.

The Ethics Centre is a thought leader in assessing organisational cultural health and building leadership capability to make good ethical decisions. To arrange a confidential conversation contact the team at consulting@ethics.org.au. Or visit our consulting page to learn more.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Society + Culture, Business + Leadership

How to tackle the ethical crisis in the arts

Opinion + Analysis

Business + Leadership

Ethics of making money from JobKeeper

Reports

Business + Leadership

Trust, Legitimacy & the Ethical Foundations of the Market Economy

Opinion + Analysis

Business + Leadership

What are millennials looking for at work?

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Big tech knows too much about us. Here’s why Australia is in the perfect position to change that

Big tech knows too much about us. Here’s why Australia is in the perfect position to change that

Opinion + AnalysisBusiness + LeadershipScience + Technology

BY The Ethics Alliance Emma Elsworthy 30 SEP 2022

Consumer Rights Data will bring an era of “commercial morality”, experts say.

Who are you? The question springs to mind a list of identity pillars – gender, job title, city, political leaning or perhaps a zany descriptor like “caffeine enthusiast!”. But who does big tech think you are?

Most of the time, we live in digital ignorance of the depth of data being scraped from everything from our Google searches to our Apple Pay purchases. Occasionally, however, we become only too aware of our own surveillance – looking at cute dog videos, for instance, and suddenly seeing ads for designer dog leashes in our Facebook feed.

It gets darker. In the wake of the US rolling back abortion law Roe v Wade, American women were discouraged from tracking their periods using an app on their smartphones. Big tech, pundits warn, could know when you’re pregnant – or more chillingly, whether you remained so.

In July, a report from Australian-US cybersecurity firm Internet 2.0 found popular youth-focused social media app TikTok could see user contact lists, access calendars, scan hard drives (including external ones) and geolocate our phones – and therefore us – on an hourly basis.

It’s “overly intrusive” data harvesting, the report found, considering “the application can and will run successfully without any of this data being gathered”.

Android users are far more exposed than Apple users because iOS significantly limits what information an app can gather. Apple has what is known as a “justification system”, meaning if an app developer wants access to something, it has to justify the requirement before Apple will permit it.

Should we be worried about TikTok’s access to our inner lives? With simmering geotensions between Australia and China – perhaps. The app is owned by ByteDance, a Beijing-based internet company, and the report found that “Chinese authorities can actually access device data”.

Professor of Business Information Systems at the University of Sydney Uri Gal writes that “TikTok’s data can also be used to compile detailed user profiles of Australians at scale”.

“Given its large and young Australian user base, it is quite likely that our country’s future prime minister and cabinet members are being surveilled and profiled by China,” he warned.

Australia is in a strong position to take action on the better protection of consumer data. Our world-leading Consumer Data Right (CDR) is being rolled out across Australia’s banking, energy and telecommunication sectors, placing the right to know about us back into our own hands.

Could our consumer rights expand beyond privacy rights to include specific economic rights too? Almost certainly, under CDR.

For instance, energy consumers would no longer have to wade through confusing fine print to work out whether they’re getting the best (and cheapest) electricity deal – with a click of a button they’d have their energy usage data sent to a new potential supplier, and the supplier would come back with a comparison.

That means no endless forms of information required upfront by a new provider, no lengthy phone calls spent cancelling one’s current provider, and crucially, no last-minute left-field discounts from a provider to keep you as a customer.

“Within five years, it should have transformed commerce, promoted competition in many sectors, and simplified daily life,” according to The University of NSW’s Ross P Buckley and Natalia Jevglevskaja.

“Thirty years ago, most Australian businesses thought charging current customers more than new customers was unfair and the law reflected this – such differential pricing was illegal,” the pair continued.

“Today those standards of behaviour seem to have fallen away and this is reflected in more relaxed consumer laws. In many contexts, CDR should reinstitute a commercial morality, a basic fairness, that modern business practices have set aside.”

A rethink of what it means to operate with transparency is what motivates fintech Flare, which aims at transforming the way Australians earn and engage in the workplace with superannuation, banking, and HR services.

Flare’s Head of Strategy Harry Godber was actually one of the original architects of CDR’s launch, which took place during his time in government as a former senior government advisor to Liberal prime ministers Malcolm Turnbull and Scott Morrison.

“[CDR] is designed to get rid of those barriers, get rid of the information asymmetry and allow you to have as much information about your banking products as someone else in the market as your bank has about you,” Godber said.

It’s a great equaliser, he continues, in that data will no longer separate the “haves and the have-nots” in the consumer world – essentially, financial literacy won’t ensure a consumer gets a better deal on products.

“That is a huge step forward when it comes to distributing financial products in an ethical way,” he continued.

“Because essentially it means if all data is equal, if everybody has access to every financial institution’s open product data and knows exactly how they will be treated then acquiring a customer suddenly becomes a matter of having good products, and very little else.”

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Relationships, Science + Technology, Society + Culture

5 things we learnt from The Festival of Dangerous Ideas 2022

Opinion + Analysis

Business + Leadership, Politics + Human Rights

No justice, no peace in healing Trump’s America

Opinion + Analysis

Business + Leadership, Society + Culture

A win for The Ethics Centre

Opinion + Analysis

Health + Wellbeing, Relationships, Science + Technology

When do we dumb down smart tech?

BY The Ethics Alliance

The Ethics Alliance is a community of organisations sharing insights and learning together, to find a better way of doing business. The Alliance is an initiative of The Ethics Centre.

BY Emma Elsworthy

Before joining Crikey in 2021 as a journalist and newsletter editor, Emma was a breaking news reporter in the ABC’s Sydney newsroom, a journalist for BBC Australia, and a journalist within Fairfax Media’s regional network. She was part of a team awarded a Walkley for coverage of the 2019-2020 bushfire crisis, and won the Australian Press Council prize in 2013.

5 things we learnt from The Festival of Dangerous Ideas 2022

5 things we learnt from The Festival of Dangerous Ideas 2022

Opinion + AnalysisRelationshipsScience + TechnologySociety + Culture

BY The Ethics Centre 20 SEP 2022

Crime, culture, contempt and change – this year our Festival of Dangerous Ideas speakers covered some of the dangerous issues, dilemmas and ideas of our time.

Here are 5 things we learnt from FODI22:

1. Humans are key to combating misinformation

Facebook whistleblower Frances Haugen says the world’s biggest social media platform’s slide into a cesspit of fake news, clickbait and shouty trolling was no accident – “Facebook gives the most reach to the most extreme ideas – and we got here through a series of individual decisions made for business reasons.”

While there are design tools that will drive down the spread of misinformation and we can mobilise as customers to put pressure on the companies to implement them, Haugen says the best thing we can do is have humans involved in the decision-making process about where to focus our attention, as AI and computers will automatically opt for the most extreme content that gets the most clicks and eyeballs.

2. We must allow ourselves to be vulnerable

In an impassioned love letter “to the man who bashed me”, poet and gender non-conforming artist, Alok teaches us the power of vulnerability, empathy and telling our own stories. “What’s missing in this world is a grief ritual – we carry so much pain inside of us, and we have nowhere to put the pain so we put it in each other.”

The more specific our words are the more universally we resonate, Alok says, “what we’re looking for as a people is permission – permission not just to tell our stories, but also to exist.”

3. We have to know ourselves better than machines do

Tech columnist and podcaster, Kevin Roose says “we are all different now as a result of our encounters with the internet.” From ‘recommended for you’ pages to personalisation algorithms, every time we pick up our phones, listen to music, watch Netflix, these persuasive features are sitting on the other side of our screens, attempting to change who we are and what we do. Roose says we must push back on handing all control to AI, even if it’s time consuming or makes us feel uncomfortable.

“We need a deeper understanding of the forces that try to manipulate us online – how they work, and how to engage wisely with them is the key not only to maintaining our independence and our sense of selves, but also to our survival as a species.”

4. We can use shame to change behaviour

Described by writer Jess Hill as “the worst feeling a human can possibly have”, the World Without Rape the panel discuss the universal theme of shame when it comes to sexual violence and its use as a method of control.

Instead of it being a weight for victims to bear, historian Joanna Bourke talks about shame as a tool to change perpetrator behaviour. “Rapists have extremely high levels of alcohol abuse and drug addictions because they actually do feel shame… if we have feminists affirming that you ought to feel shame then we can use that to change behaviour.”

5. Reason, science and humanism are the key to human progress

Steven Pinker believes in progress, arguing that the Enlightenment values of reason, science and humanism have transformed the world for the better, liberating billions of people from poverty, toil and conflict and producing a world of unprecedented prosperity, health and safety.

But that doesn’t mean that progress is inevitable. We still face major problems like climate change and nuclear war, as well as the lure of competing belief systems that reject reason, science and humanism. If we remain committed to Enlightenment values, we can solve these problems too. “Progress can continue if we remain committed to reason, science and humanism. But if we don’t, it may not.”

Catch up on select FODI22 sessions, streaming on demand for a limited time only.

Photography by Ken Leanfore

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Society + Culture, Business + Leadership, Health + Wellbeing

Make an impact, or earn money? The ethics of the graduate job

Opinion + Analysis

Science + Technology

Bladerunner, Westworld and sexbot suffering

Big thinker

Relationships

Big Thinker: Plato

Opinion + Analysis

Climate + Environment, Politics + Human Rights, Relationships, Society + Culture

The youth are rising. Will we listen?

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Why listening to people we disagree with can expand our worldview

Why listening to people we disagree with can expand our worldview

Opinion + AnalysisRelationships

BY Anna Goodman 13 SEP 2022

There will always be people in the world who have different opinions, values, and beliefs from our own. But this shouldn’t stop us from listening to those we know we disagree with, even if we think it’s unlikely that we will change our minds.

During part of the Covid-19 pandemic, I lived with my grandfather in rural Vermont, a small state in the north-east of the US. Being Australian, I had many opportunities to talk to people I would not have otherwise have had a chance to meet. Then, the 2020 presidential election between Joe Biden and Donald Trump rolled around, and it was all anyone could talk about.

My grandfather’s carer voted for Trump in 2020. I got to know her quite well – she’s a life-long rural north-easterner with a strong belief in individual self-sufficiency. We talked a lot about the differences between where we came from. Politics always comes up in conversation around an election, so naturally it came up that we would support different presidential candidates.

Most of the media that I consume and the majority of my social circle reinforce my liberal political views. Talking with my grandfather’s carer gave me a different perspective on why someone would vote for Trump. While her reasoning didn’t convince me to change my vote, I came to understand how my life led me to my beliefs on who should be president, and her life led to hers.

These conversations inspired me to think a little more about what we gain when we take the time to listen thoughtfully to people with different views, perspectives and opinions from ours. Here are three reasons (and a few tools) that can help us to gain the full benefit of listening to someone who has different beliefs from ours.

Be curious about reasons: both your own and others

Our values represent what we believe is good and bad in the world. But it’s uncommon for people to ‘choose’ their values. Instead, we are far more likely to adopt the values that our parents have and the dominant values of the communities we grow up in.

Nevertheless, we hold our values near and dear to our hearts. They form the foundations of our lives and who we are as people. Someone who has different values from us can feel as though they are a world away from us. In reality, it’s likely they just had a different upbringing, with access to different information and abided by different norms.

One tool we can use for finding the reasons behind certain views is to think like a philosopher and ask “why.” The ancient Greek philosopher Socrates is well known for doing this, with what we now call the Socratic method. Essentially, every time a claim is made, we can ask “why” until we get to some root cause or foundational reason.

The Socratic method can be used to interrogate the reasons behind both our own beliefs and the beliefs of others. Another tool was developed by Sakichi Toyoda, the founder of Toyota, who would ask “why” five times in order to get to the root cause of a problem. The same can be done when trying to get to the root of someone else’s beliefs. Asking “why” of our own beliefs and those of people around us (and people who aren’t around us) is an important part of recognising the differences in our experiences, and ultimately helps to paint a clearer picture of how our values and beliefs develop.

Be open to a broader understanding of the world

There is no doubt that we live in an increasingly polarised and divided world. Thanks to the internet, diverse and extreme views can now be easily shared, amplifying voices around the world. Often times, this creates echo chambers that shield us (and can even villainise) dissenting voices.

On top of this, we are creatures of habit. Our social media algorithms show us things we like, we read the same news sources each morning, and we catch up with our friends, who likely have similar values to us. The ‘other perspective’ is often pushed outside of our world view and can feel distant. It’s hard to understand why someone could have such different beliefs to us.

When I took the time to listen with the intention of understanding, I found that I had significantly more impactful and meaningful conversations. Most of my (limited) knowledge of American politics and sociology comes from a classroom, so it’s theory-based knowledge rather than knowledge grounded in experience. It’s one thing to read statistics and understand a theory in a classroom; it’s an entirely different thing to hear a personal story.

Listening to my grandfather’s carer talk about her experiences added a level of humanity into what I had learnt in a lecture hall. As a result, I have more empathy and understanding for people who have different life stories, and therefore different perspectives from mine. Being empathetic doesn’t mean necessarily changing our views, but rather humanising and understanding the multiple ways people form their understanding of the world.

Knowing when not to listen

I don’t want to take away from how difficult it is to really listen to someone who has fundamentally different beliefs from us. It can be emotionally draining and it requires the right headspace. It can also be harmful for individuals of marginalised identities to listen to views that discriminate against them, and be told to give those views equal consideration to non-discriminatory views.

The Socratic method can also be useful for determining when not to listen. If a belief is founded on a discriminatory, hateful, or untrue statement, it can help to provide grounds for not listening to a person’s point of view. Philosophers sometimes think about this through the framework of intellectual virtues, or qualities in a person that promote the pursuit of truth and intellectual flourishing. These virtues (such as empathy, integrity, intellectual responsibility and love of truth) can help us to discern good from bad foundational reasons that we might find by asking why.

At the end of the day, if we’re in the right headspace and feeling ready to learn, it’s a worthwhile practice for us to learn to listen and understand the reasons why people hold different views. In turn, we can reflect on our own views, and increase our empathy for those with different world views.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Explainer

Relationships

Ethics Explainer: Teleology

Opinion + Analysis

Relationships

How to respectfully disagree

Big thinker

Relationships

Big Thinker: Immanuel Kant

Opinion + Analysis

Relationships

We shouldn’t assume bad intent from those we disagree with

BY Anna Goodman

Anna is a graduate of Princeton University, majoring in philosophy. She currently works in consulting, and continues to enjoy reading and writing about philosophical ideas in her free time.

Ethics Explainer: Critical Race Theory

Ethics Explainer: Critical Race Theory

ExplainerPolitics + Human RightsRelationships

BY The Ethics Centre 12 SEP 2022

Critical Race Theory (CRT) seeks to explain the multitude of ways that race and racism have become embedded in modern societies. The core idea is that we need to look beyond individual acts of racism and make structural changes to prevent and remedy racial discrimination.

History

Despite debates about Critical Race Theory hitting the headlines relatively recently, the theory has been around for over 30 years. It was originally developed in the 1980s by Derrick Bell, a prominent civil rights activist and legal scholar. Bell argued that racial discrimination didn’t just occur because of individual prejudices but also because of systemic forces, including discriminatory laws, regulations and institutional biases in education, welfare and healthcare.

During the 1950s and 1960s in America, there were many legal changes that moved the country towards racial equality. Some of the most significant legal changes include the Supreme Court’s decision in Brown v. Board of Education, which explicitly banned racial apartheid in American schools, the Civil Rights Act of 1964 and the Voting Rights Act of 1965.

These rulings and laws formally criminalised segregation, legalised interracial marriage and reduced restrictions in access to the ballot box that had been commonplace in many parts of America since the 1870s. There was also a concerted effort across education and the media to combat racially discriminatory beliefs and attitudes.

However, legal scholars noticed that even in spite of these prominent efforts, racism persisted throughout the country. How could racial equality be legislated by the highest court in America, and yet racial discrimination still occur every day?

Overview

Critical race theory, often shortened to CRT, is an academic framework that was developed out of legal scholarship that wanted to explain how institutions like the law perpetuates racial discrimination. The theory evolved to have an additional focus on how to change structures and institutions to produce a more equitable world. Today, CRT is mostly confined to academia, and while some elements of CRT may inform parts of primary and secondary education, very few schools teach CRT in its full form.

Some of the foundational principles of CRT are:

- CRT asserts that race is socially constructed. This means that the social and behavioural differences we see between different racialised groups are products of the society that they live in, not something biological or “natural.”

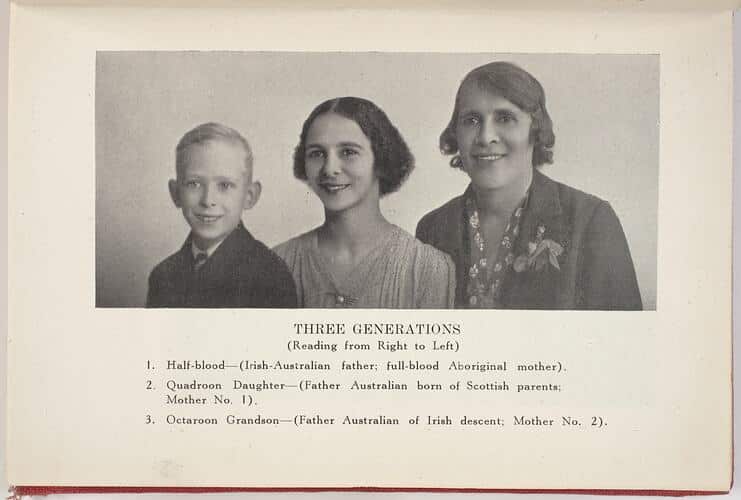

There is a long history of people using science to attempt to prove that there were significant social and psychological differences among people of different racial groups. They claimed these differences justified the poor treatment of people of different ‘inferior races’, or the ‘breeding out’ of certain races. This is how white Australians justified the atrocities committed in the Stolen Generations, such as the attempted ‘breeding out’ of Aboriginal people.

2. Racism is systemic and institutional. Imagine if everyone in the world magically erased all their racial biases. Racism would still exist, because there are systems and institutions that uphold racial discrimination, even if the people within them aren’t necessarily racist.

There are many examples of systemic and institutional racism around the world. They become evident when a system doesn’t have anything explicitly racist or discriminatory about it, but there are still differences in who benefits from that system. One example is the education system: it’s not explicitly racist, but students of different racial backgrounds have different educational outcomes and levels of attainment. In the US, this occurs because public schools are funded by both local and state governments, which means that children going to school in lower socioeconomic areas will be attending schools that receive less funding. Statistically, people of colour are more likely to live in lower socioeconomic areas of America. So, even though the education system isn’t explicitly racist (i.e., treating students of one racial background differently from students of a different racial background), their racial background still impacts their educational outcomes.

3. There is often more than one part of identity that can impact a person’s interaction with systems and institutions in society. Race is just one of many parts of identity that influences how a person will interact with the world. Different identities, including race, gender, sexuality, socioeconomic status, religion and ability, intersect with each other and compound. This is an idea known as “intersectionality.”

Most of the time, it’s not just one part of a person’s identity that is impacting their experiences in the world. Someone who is a Black woman will experience racism differently from a Black man, because gender will impact experience, just like race. A wealthy Chinese-Australian person will have a different experience living in Australia than a working class Chinese-Australian person. Ultimately, CRT tells us that we need to look at race in conjunction with other facets of identity that impact a person’s experience.

Critical Race Theory and racism in Australia

As Australians, it’s easy to point the finger at the US and think “well, at least we aren’t as bad as them.” However, this mentality of only focusing on the worst instances of racism means we often ignore the happenings closer to home. A 2021 survey conducted by the ABC found that 76% of Australians from a non-European background reported experiencing racial discrimination. One-third of all Australians have experienced racism in the workplace and two-thirds of students from non-Anglo backgrounds have experienced racism in school.

In addition to frequent instances of racism, Australia’s history is fraught with racism that is predominantly left out of high school history textbooks. From our early colonial history to racial discrimination during the gold rush in the 1850s to anti-immigration rhetoric today, we don’t need to look far for examples of racial discrimination. A little known part of Australian history is that non-British immigrants from 1901 until the 1960s were told that if they moved to Australia, they had to shed their languages and culture.

Even though CRT originates in the US, it is a useful framework for encouraging a closer analysis of Australia’s racist history and how this has caused the imbalances and inequalities we see today. And once we understand the systemic and institutional forces that promote or sustain racial injustice, we can take measures to correct them to produce more equitable outcomes for all.

If you want to learn more about how race has impacted the world today, here are some good places to start:

- Nell Painter’s Soul Murder and Slavery – her work has focused on the generational psychological impact of the trauma of slavery. Here is an interview where Painter talks a little bit about her work.

- Nikole Hannah-Jones’ 1619 Project, with the New York Times – you can listen to the podcast on Spotify, which has six great episodes on some of the less reported ways that slavery has impacted the functioning of US society.

- Dear White People – a Netflix show that deals with some of the complications of race on a US college campus.

- Ladies in Black – a movie about Sydney c. 1950s, shows many instances of the casual racism towards refugees and immigrants from Europe.

For a deeper dive on Critical Race Theory, Claire G. Coleman presents Words Are Weapons and Sisonke Msimang and Stan Grant present Precious White Lives as Festival of Dangerous Ideas 2022. Tickets on sale now.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Health + Wellbeing, Relationships, Science + Technology

When do we dumb down smart tech?

Opinion + Analysis

Relationships

Enwhitenment: utes, philosophy and the preconditions of civil society

Opinion + Analysis

Politics + Human Rights

An angry electorate

Explainer

Politics + Human Rights

Ethics Explainer: The Panopticon

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Big Thinker: Steven Pinker

Steven Pinker (1954-present) is an experimental psychologist who is “interested in all aspects of language, mind, and human nature.” In 2021, Academic Influence calculated that he was the second-most influential psychologist in the world in the decade 2010-2020.

Steven Pinker is a Canadian-American cognitive psychologist, psycholinguist, popular science author and public intellectual. He grew up in Montreal, earning his Bachelor’s degree in experimental psychology from McGill University and his PhD from Harvard University. He is currently the Johnstone Family Professor in the Department of Psychology at Harvard.

At the start of his graduate studies, Pinker found himself interested in language, and in particular, language development in children. In 1994, he went on to publish the first of his nine books written for a general audience, entitled The Language Instinct. In the book, Pinker introduces the reader to some of the fundamental parts of language, and argues that language itself is an instinct that makes humans unique.

Language, society and the mind

Try and have a thought without any language. It might be an idea or a memory that appears in your mind with no words or internal monologue. It’s quite difficult to switch off the voice in our heads for more than a few seconds. Pinker researches this connection between language and how our minds work.

To date, Pinker is the author of nine books written for a general audience. He covers a wide range of topics and questions that get at the heart of how we learn languages and what this does to our minds. His book The Stuff of Thought (2007) looks at how language shapes the way we think. He begins by suggesting that when we use language, we are doing two things:

- Conveying a message to someone

- Negotiating the social relationship between ourselves and whoever we are speaking to

For example, when a professor stands at the front of a lecture hall and tells her students “may I have your attention, class is about to begin” the professor is doing two things. First, she is alerting her students that class is starting (the message), and second, she is operating within the professor-student hierarchy (the social relationship) in which students should give their attention.

Taking this framework for language, Pinker works to untangle some of the complicated questions around language, such as “Why do so many swear words involve topics like sex, bodily functions or the divine?” and “Why do some children’s names thrive while others fall out of favour?”

Trends of today: is violence declining?

Pinker’s academic interests and research extends beyond language. In 2011, he published The Better Angels of Our Nature, which makes the claim that violence in human societies has generally decreased steadily over time.

“Historical data from past centuries are far less complete, but the existing estimates of death tolls, when calculated as a proportion of the world’s population at the time, show at least nine atrocities before the 20th century (that we know of) which may have been worse than World War II.”

Violence in this case does not just mean war. Pinker also looks at collapsing empires, the slave trade, the murder of native peoples, treatment of children and religious persecution as acts of violence in the world. While it feels like we see and hear about a lot of violence today, he notes that it’s often because these are ‘newsworthy’ events.

“In the case of violence, you never see a reporter with a microphone and a sound truck in front of a high school announcing that the school has not been shot up today, or in an African capital noting that a civil war has not erupted.”

After establishing the trend of declining violence, he looks at historical factors that work to explain why we live in a less violent world. Some of these trends include increasing respect for women, the rise in technological progress, and more application of knowledge and rationality to human affairs.

Current work

Steven Pinker’s work has received a number of prizes for his books, including the William James Book Prize three times, the Los Angeles Times Science Book Prize, the Eleanor Maccoby Book Prize, the Cundill Recognition of Excellence in History Award, and the Plain English International Award. He has also served as an editor and advisor for a variety of scientific, scholarly, media and humanist organisations.

Steven Pinker still spends his time researching a diverse array of topics in psychology, language, historical and recent trends in violence, and neurobiology. One specific area he is currently researching is the role of common knowledge (i.e., things that we know other people know without having to say what we know) in language and other social phenomena.

Steven Pinker presents Enlightenment or Dark Age? as part of Festival of Dangerous Ideas 2022. Tickets on sale now.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Explainer

Relationships

Ethics Explainer: Akrasia

Explainer

Relationships

Ethics Explainer: Moral Absolutism

Explainer

Relationships

Ethics Explainer: Plato’s Cave

Big thinker

Relationships

Big Thinker: Kate Manne

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Breaking news: Why it’s OK to tune out of the news

Breaking news: Why it’s OK to tune out of the news

Opinion + AnalysisRelationships

BY Dr Tim Dean 1 SEP 2022

News is designed to grab your attention but not all of it is relevant to your life. The good news is you needn’t feel guilty for tuning out of news that makes you sad or angry and is out of your power to influence.

Two stories appear on your favourite news website. The first is about an adorable half-tonne walrus named Freya being euthanised in Norway because gormless onlookers refused to heed government advice to keep their distance.

The second covers China’s central bank cutting interest rates to stimulate growth while most other wealthy nations around the world are raising them to fight inflation.

Which of these stories grabs your attention the most? Which are you most likely to click on?

If you answered the first, then you’re not alone. In fact, the ‘popular right now’ lists on most news websites show that rogue wildlife invading metropolitan areas easily outrank stories speculating on China’s economic health.

But which most affects your life?

Even if you happened to be in Norway during the Freya saga, the news would be unlikely to impact your day-to-day goings on (unless it was a warning to steer clear of the beast). On the other hand, if China slips into recession, it could alter the global financial and geopolitical landscape, impacting everything from grocery prices to the prospect of war in Taiwan.

I’m not intending to shame you for clicking on the more sensationalist story. I did. We are only human, and Freya’s story pushes emotional buttons of care for native wildlife as well as outrage directed at the twits whose desire for a selfie caused the death of an innocent animal.

But what are we looking for when we switch on the radio or swipe that phone screen? What really is ‘news’? Through our cumulative clicks on news stories, what are we asking media outlets for? And is what we’re asking for doing us any good?

When we click on a story about horrific crime, a child abduction, a fatal shark attack or one of the countless stories about celebrity dalliances, what does it tell us about the world? Probably not much. In fact, these stories probably do more to distort our understanding of the world rather than clarifying it.

Horrific crimes do happen, but a lot less frequently than we might think. Child abductions by strangers are incredibly rare (that’s what makes them newsworthy when they happen). Shark attacks do happen, but you’re more likely to be injured driving to the beach than you are by in the toothy maw of a cartilaginous fish. And when it comes to celebrities, no-one is surprised that high status individuals living in a wealth and fame bubble behave just like us, only more so.

The deeper impact of news like this is that it normalises an abnormal image of the world, one coloured by our natural fascination with violence, tragedy, mortality, injustice, sexual indiscretion and scandal. Many of these things make the news because they’re exceptional in the modern world; it’s precisely because our society is more peaceful and stable than at just about any point in history that conflict and instability stand out.

But through constant exposure to the exceptional through news headlines, it eventually becomes normal. Studies have shown that Australians consistently overestimate the levels of violent crime in their neighbourhoods, due at least in part to the way crime, conflict and injustice are reported but lawfulness, peace and justice are not.

The torrent of sensational news also comes at an emotional cost, especially when we’re confronted with a daily litany of injustices from around the world. We are naturally inclined to experience outrage when we hear about how women are mistreated in Afghanistan, about civilians being killed in Ukraine or another mass shooting in school in the United States, and this outrage motivates us to take action to rectify it. But the simple fact is that we have limited power to act on most of the injustices that we encounter in the news. Mass media has expanded our sphere of perception to be global but our sphere of influence remains largely local.

This, in turn, can inspire feelings of disempowerment and hopelessness. And it can encourage us to seek to regain our sense of agency wherever we can. However, it’s far easier to regain a sense of agency, such as by complaining or calling people out on social media, than it is to have power over grand or distant events. Social media can make us feel like we’re doing something, like we’re fighting back against injustice, but much of that is illusory. Often all we’re doing is sharing the injustices around, getting other people outraged and further eroding their sense of agency.

Life is cacophonous. News is supposed to filter out the noise and reveal what is important, relevant and impactful so we can focus our attention on what really matters. But news has descended into its own form of cacophony. We often fall back on our gut to sense what is important, but our gut is far from impartial.

The point is not to stop engaging with the news. It’s not to blame ourselves or others for being human. It’s to remember that the news isn’t always healthy. It isn’t always relevant. It doesn’t always reveal the whole of the world as it really is.

The good news is we do retain some control, and we have some responsibility over what we choose to view, which outlets to follow, which stories to click on, which ones to share. It takes some discipline, but that’s what living ethically means: cultivating the discipline to do what’s right, not just what’s easy.

Sometimes it means choosing not to engage with certain news. We shouldn’t feel guilty about that. We can pick our battles and choose where to invest our emotional energy. If we choose wisely, we can engage with the portions of the world over which we have greater influence and actually change it for the better.

We might still click on that story about a rogue walrus being killed because of inconsiderate onlookers or be amused by the latest celebrity scandal, but we can also remain wise enough to know that these do not reflect the world as it is, only the world as it appears through the imperfect filter of the news.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Explainer

Relationships

Ethics explainer: Normativity

Opinion + Analysis

Politics + Human Rights, Relationships

Whose home, and who’s home?

Big thinker

Climate + Environment, Relationships

Big Thinker: Ralph Waldo Emerson

Opinion + Analysis

Health + Wellbeing, Relationships, Science + Technology

Philosophically thinking through COVID-19

BY Dr Tim Dean

Dr Tim Dean is Philosopher in Residence at The Ethics Centre and author of How We Became Human: And Why We Need to Change.

Read before you think: 10 dangerous books for FODI22

Read before you think: 10 dangerous books for FODI22

Opinion + AnalysisSociety + Culture

BY The Ethics Centre 29 AUG 2022

Truth, trust, tech, tattoos and taboos – The Festival of Dangerous Ideas returns live to Sydney 17-18 September with big ideas, dicey topics and critical conversations.

From history and science, to art, politics, and economics, FODI holds issues up to the light – challenging, celebrating and debating some of the most complex questions of our times.

In partnership with Gleebooks, these 10 reads from this year’s line-up of thinkers, artists, experts and disruptors will sharpen your mind, put your mettle to the test and help you stay ahead of the discussion:

Beyond the Gender Binary by Alok Vaid-Menon

Talking from their own experiences as a gender non-conforming artist, Alok Vaid-Menon challenges the world to see gender in full colour.

Alok // Live at FODI22 // Beyond the Gender Binary // Sat 17 Sept // 7:15pm

Lies, Damned Lies by Claire G. Coleman

A deeply personal exploration of Australia’s past, present and future, and the stark reality of the ongoing trauma of Australia’s violent colonisation.

Claire G. Goleman // Live at FODI22 // Words are Weapons // Sun 18 Sept // 11am

See What You Made Me Do by Jess Hill

A confronting and deeply researched account uncovering the ways in which abusers exert control in the darkest, and most intimate, ways imaginable.

Jess Hill // Live at FODI22 // World Without Rape // Sun 18 Sept // 2pm

Enlightenment Now by Steven Pinker

Exploring the formidable challenges we face today – rather than sinking into despair we must treat them as problems we can solve.

Steven Pinker // Live at FODI22 // Enlightenment or a dark age? // Sun 18 Sept // 6pm

Futureproof: 9 rules for humans in the age of automation by Kevin Roose

A hopeful, pragmatic vision for how we can thrive in the age of AI and automation.

Kevin Roose // Live at FODI22 // Caught in a Web // Sat 17 Sept // 3pm

Rebel with a cause by Jacqui Lambie

The Senator’s memoir that is as fascinating, honest, surprising and headline-grabbing as the woman herself.

Jacqui Lambie // Live at FODI22 // On Blowing Things Up // Sat 17 Sept // 11am

The Uncaged Sky by Kylie Moore-Gilbert

The extraordinary true story of Moore-Gilbert’s fight to survive 804 days imprisoned in Iran, exploring resilience, solidarity and what it means to be free.

Kylie Moore-Gibert // Live at FODI22 // Expendable Australians // Sat 17 Sept // 4pm

Quarterly Essay 87: The Ethics and Politics of Public Debate by Waleed Aly & Scott Stephens

In this edition of Quarterly Essay, Aly and Stephens explore why public debate is increasingly polarised – and what we can do about it.

Waleed Aly & Scott Stephens present a special edition of The Minefield live at FODI22 // Contempt is Corroding Democracy // Sun 18 Sept // 3pm

Strongmen by Ruth Ben-Ghiat

A fierce and perceptive history, and a vital step in understanding how to combat the forces which seek to derail democracy and seize our rights.

Ruth Ben-Ghiat // Live at FODI22 // Return of the Strongman // Sat 17 Sept // 5pm

When America Stopped Being Greatby Nick Bryant

The history of Trump’s rise is also a history of America’s fall – not only are we witnessing America’s post-millennial decline, but also the country’s disintegration.

Nick Bryant // Live at FODI22 // American Decadence // Sun 18 Sept // 12pm

These titles, plus more will be available at the FODI Dangerous Books popup – running 10am-8pm across 17-18 September at Carriageworks, Sydney. Check out the full FODI program at festivalofdangerousideas.com

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Business + Leadership, Society + Culture

The Ethics Centre: A look back on the highlights of 2018

Opinion + Analysis

Society + Culture, Climate + Environment

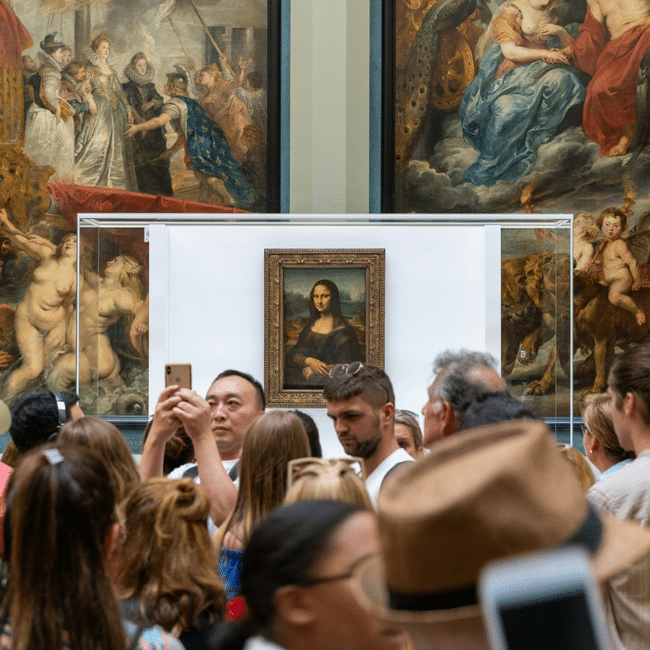

Who’s to blame for overtourism?

Opinion + Analysis

Society + Culture

Ask an ethicist: Is it OK to steal during a cost of living crisis?

Explainer

Society + Culture, Politics + Human Rights