How to build good technology

How to build good technology

WATCHBusiness + LeadershipClimate + EnvironmentScience + Technology

BY Matthew Beard 2 MAY 2019

Dr Matthew Beard explains the key principles to guide the development of ethical technology at the Atlassian 2019 conference in Las Vegas.

Find out why technology designers have a moral responsibility to design ethically, the unintended ethical consequences of designs such as Pokemon Go, and the the seven guiding principles designers need to consider when building new technology.

Whether editing a genome, building a driverless car or writing a social media algorithm, Dr Beard says these principles offer the guidance and tools to do so ethically.

Download ‘Ethical By Design: Principles For Good Technology ‘

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Business + Leadership

Survivor bias: Is hardship the only way to show dedication?

Opinion + Analysis

Science + Technology

Why the EU’s ‘Right to an explanation’ is big news for AI and ethics

Opinion + Analysis

Relationships, Science + Technology

Should you be afraid of apps like FaceApp?

Opinion + Analysis

Business + Leadership

Is employee surveillance creepy or clever?

BY Matthew Beard

Matt is a moral philosopher with a background in applied and military ethics. In 2016, Matt won the Australasian Association of Philosophy prize for media engagement. Formerly a fellow at The Ethics Centre, Matt is currently host on ABC’s Short & Curly podcast and the Vincent Fairfax Fellowship Program Director.

Why ethics matters for autonomous cars

Why ethics matters for autonomous cars

Opinion + AnalysisScience + Technology

BY The Ethics Centre 14 APR 2019

Whether a car is driven by a human or a machine, the choices to be made may have fatal consequences for people using the vehicle or others who are within its reach.

A self-driving car must play dual roles – that of the driver and of the vehicle. As such, there is a ‘de-coupling’ of the factors of responsibility that would normally link a human actor to the actions of a machine under his or her control. That is decision to act and the action itself are both carried out by the vehicle.

Autonomous systems are designed to make choices without regard to the personal preferences of human beings, those who would normally exercise control over decision-making.

Given this, people are naturally invested in understanding how their best interests will be assessed by such a machine (or at least the algorithms that shape – if not determine – its behaviour).

In-built ethics from the ground up

There is a growing demand that the designers, manufacturers and marketers of autonomous vehicles embed ethics into the core design – and then ensure that they are not weakened or neutralised by subsequent owners.

We can accept that humans make stupid decisions all the time, but, we hold autonomous systems to a higher standard.

This is easier said than done – especially when one understands that autonomous vehicles are unlikely ever to be entirely self-sufficient. For example, autonomous vehicles will often be integrated into a network (e.g. geospatial positioning systems) that complements their integrated, onboard systems.

A complicated problem

This will exacerbate the difficulty of assigning responsibility in an already complex network of interdependencies.

If there is a failure, will the fault lie with the designer of the hardware, or the software, or the system architecture…or some combination of these and others? What standard of care will count as being sufficient when the actions of each part affects the others and the whole?

This suggests that each design element needs to be informed by the same ethical principles – so as to ensure as much ethical integrity as possible. There is also a need to ensure that human beings are not reduced to the status of being mere ‘network’ elements.

What we mean by this is to ensure the complexity of human interests are not simply weighed in the balance by an expert system that can never really feel the moral weight of the decisions it must make.

For more insights on ethical technology, make sure you download our ‘Ethical by Design‘ guide where we take a detailed look at the principles companies need to consider when designing ethical technology.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Politics + Human Rights, Science + Technology

Ukraine hacktivism

Big thinker

Science + Technology

Seven Influencers of Science Who Helped Change the World

Opinion + Analysis

Relationships, Science + Technology

Big tech’s Trojan Horse to win your trust

Opinion + Analysis

Health + Wellbeing, Relationships, Science + Technology

Parent planning – we should be allowed to choose our children’s sex

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

The Ethics of In Vitro Fertilization (IVF)

The Ethics of In Vitro Fertilization (IVF)

Opinion + AnalysisScience + Technology

BY The Ethics Centre 8 APR 2019

To understand the ethics of IVF (In vitro fertilisation) we must first consider the ethical status of an embryo.

This is because there is an important distinction to be made between when a ‘human life’ begins and when a ‘person’ begins.

The former (‘human life’) is a biological question – and our best understanding is that human life begins when the human egg is fertilised by sperm or otherwise stimulated to cause cell division to begin.

The latter is an ethical question – as the concept of ‘person’ relates to a being capable of bearing the full range of moral rights and responsibilities.

There are a range of other ethical issues IVF gives rise to:

- the quality of consent obtained from the parties

- the motivation of the parents

- the uses and implications of pre-implantation genetic diagnosis

- the permissibility of sex-selection (or the choice of embryos for other traits)

- the storage and fate of surplus embryos.

For most of human history, it was held that a human only became a person after birth. Then, as the science of embryology advanced, it was argued that personhood arose at the moment of conception – a view that made sense given the knowledge of the time.

However, more recent advances in embryology have shown that there is a period (of up to about 14 days after conception) during which it is impossible to ascribe identity to an embryo as the cells lack differentiation.

Given this, even the most conservative ethical position (such as those grounded in religious conviction) should not disallow the creation of an embryo (and even its possible destruction if surplus to the parents’ needs) within the first 14 day window.

Let’s further explore the grounds of some more common objections. Some people object to the artificial creation of a life that would not be possible if left entirely to nature. Or they might object on the grounds that ‘natural selection’ should be left to do its work. Others object to conception being placed in the hands of mortals (rather than left to God or some other supernatural being).

When covering these objection it’s important to draw attention existing moral values and principles. For example, human beings regularly intervene with natural causes – especially in the realm of medicine – by performing surgery, administering pharmaceuticals and applying other medical technologies.

A critic of IVF would therefore need to demonstrate why all other cases of intervention should be allowed – but not this.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Climate + Environment, Relationships, Science + Technology

From NEG to Finkel and the Paris Accord – what’s what in the energy debate

Opinion + Analysis

Business + Leadership, Relationships, Science + Technology, Society + Culture

Who does work make you? Severance and the etiquette of labour

Opinion + Analysis

Relationships, Science + Technology

With great power comes great responsibility – but will tech companies accept it?

Opinion + Analysis

Science + Technology

Australia, we urgently need to talk about data ethics

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Is technology destroying your workplace culture?

Is technology destroying your workplace culture?

Opinion + AnalysisBusiness + LeadershipScience + Technology

BY Matthew Beard 6 APR 2019

If you were to put together a list of all the buzzwords and hot topics in business today, you’d be hard pressed to leave off culture, innovation or disruption.

They might even be the top three. In an environment of constant technological change, we’re continuously promised a new edge. We can have sleeker service, faster communication or better teamwork.

This all makes sense. Technology is the future of work. Whether it’s remote work, agile work flows or AI enhanced research, we’re going to be able to do more with less, and do it better.

For organisations who are doing good work, that’s great. And if those organisations are working for the good of society (as they should), that’s great for us all.

Without looking a gift horse in the mouth though, we should be careful technology enhances our work rather than distracting us from it.

Most of us can probably think of a time when our office suddenly had to work with a totally new, totally pointless bit of software. Out of nowhere, you’ve got a new chatbot, all your info has been moved to ‘the cloud’ or customer emails are now automated.

This is usually the result of what the comedian Eddie Izzard calls “techno-joy”. It’s the unthinking optimism that technology is a cure for all woes.

Unfortunately, it’s not. Techno-joyful managers are more headache than helper. But more than that, they can also put your culture – or worse, your ethics – in a tricky spot.

Here’s the thing about technology. It’s more than hardware or code. Technology carries a set of values with it. This happens in a few ways.

Techno-logic

All technology works through a worldview we call ‘techno-logic’. Basically, technology aims to help us control things by making the world more efficient and effective. As we explained in our recent publication, Ethical by Design:

Techno-logic sees the world as though it is something we can shape, control, measure, store and ultimately use. According to this view, techno-logic is the ‘logic of control’. No matter the question, techno-logic has one overriding concern: how can we measure, alter, control or use this to serve our goals?

Whenever you’re engaging with technology, you’re being invited and encouraged to see the world in a really narrow way. That can be useful – problem solving happens by ignoring what doesn’t matter and focussing on what’s important. But it can also mean we ignore stuff that matters more than just getting the job done as fast or effectively as we can.

A great example of this comes from Up in the Air, a film in which Ryan Bingham (George Clooney) works for a company who specialise in sacking people. When there are mass layoffs to be made, Bingham is there. Until technology comes to call. Research suggests video conferencing would be cheaper and more effective. Why fly people around America when you can sack someone from the comfort of your own office?

As Bingham points out, you do it because sometimes making something efficient destroys it. Imagine going on an efficient date or keeping every conversation as efficient as possible. We’d lose something essential, something rich and human.

With so much technology available to help with recruitment, performance management and customer relations, we need to be mindful that technology is fit for purpose. It’s very easy for us to be sucked into the logic of technology until suddenly, it’s not serving us, we’re serving it. Just look at journalism.

Drinking the affordance Kool-Aid

Journalism has always evolved alongside media. From newspaper to radio, podcasting and online, it’s a (sometimes) great example of an industry adapting to technological change. But at times, it over adapts, and the technological cart starts to pull the journalistic horse.

Today, online articles are ‘optimised’ to drive engagement and audience. This means stories are designed to hit a sweet spot in word count to ensure people don’t tune out, they’re given titles that are likely to generate clicks and traffic, and the kinds of things people are likely to read tend to get more attention.

A lot of that is common sense, but when it turns out that what drives engagement is emotion and conflict, this can put journalists in a bind. Are they impartial reporters of truth, lacking an audience, or do they massage journalistic principles a little so they can get the most readers they can?

I’ll leave it to you to decide which way journalism as an industry has gone. What’s worth noting is that many working in media weren’t aware of some of these changes whilst they were happening. That’s partly because they’re so close to the day-to-day work, but it can also be explained by something called ‘affordance theory’.

Affordance theory suggests that technological design contains little prompts, suggesting to users how they should interact with it. They invite users to behave in certain ways and not others. For example, Facebook makes it easier for you to respond to an article with feelings than thinking. How? All you need to do to ‘like’ a post is click a button but typing out a thought requires work.

Worse, Facebook doesn’t require you to read an article at all before you respond. It encourages quick, emotional, instinctive reactions and discourages slow thinking (through features like automatic updates to feeds and infinite scroll).

These affordances are the water we swim in when we’re using technology. As users, we need to be aware of them, but we also need to be mindful of how they can affect purpose.

Technology isn’t just a tool, it’s loaded with values, invitations and ethical judgements. If organisations don’t know what kind of ethical judgements are in the tools they’re using, they shouldn’t be surprised when they end up building something they don’t like.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Business + Leadership, Politics + Human Rights

Can philosophy help us when it comes to defining tax fairness?

Opinion + Analysis

Business + Leadership

Getting the job done is not nearly enough

Opinion + Analysis

Business + Leadership

Corporate tax avoidance: you pay, why won’t they?

Opinion + Analysis

Business + Leadership

Ask the ethicist: Is it ok to tell a lie if the recipient is complicit?

BY Matthew Beard

Matt is a moral philosopher with a background in applied and military ethics. In 2016, Matt won the Australasian Association of Philosophy prize for media engagement. Formerly a fellow at The Ethics Centre, Matt is currently host on ABC’s Short & Curly podcast and the Vincent Fairfax Fellowship Program Director.

Can robots solve our aged care crisis?

Can robots solve our aged care crisis?

Opinion + AnalysisBusiness + LeadershipHealth + WellbeingScience + Technology

BY Fiona Smith 21 MAR 2019

Would you trust a robot to look after the people who brought you into this world?

While most of us would want our parents and grandparents to have the attention of a kindly human when they need assistance, we may have to make do with technology.

The reason is: there are just not enough flesh-and-blood carers around. We have more seniors entering aged care than ever before, living longer and with complex needs, and we cannot adequately staff our aged care facilities.

The percentage of the Australian population aged over 85 is expected to double by 2066 and the aged care workforce would need to increase between two and three times before 2050 to provide care.

The looming dilemma

With aged care workers among the worst-paid in our society, there is no hope of filling that kind of demand. The Royal Commission into aged care quality and safety is now underway and we are facing a year of revelations about the impacts of understaffing, underfunding and inadequate training.

Some of the complaints already aired in the commission include unacceptably high rates of malnutrition among residents, lack of individualised care and cost-cutting that results in rationing necessities such as incontinence pads.

While the development of “assistance robots” promises to help improve services and the quality of life for those in aged care facilities, there are concerns that technology should not be used as a substitute for human contact.

Connection and interactivity

Human interaction is a critical source of intangible value for the development of human beings, according to Dr Costantino Grasso, Assistant Professor in Law at Coventry University and Global Module Leader for Corporate Governance and Ethics at the University of London.

“Such form of interaction is enjoyed by patients on every occasion in which a nurse interacts with them. The very presence of a human entails the patient value recognising him or her as a unique individual rather than an impersonal entity.

“This cannot be replaced by a robot because of its ‘mechanical’, ‘pre-programmed’ and thus ‘neutral’ way to interact with patients,” Grasso writes in The Corporate Social Responsibility And Business Ethics Blog.

The loss of privacy and autonomy?

An overview of research into this area by Canada’s McMaster University shows older adults worry the use of socially assistive robots may lead to a dehumanised society and a decrease in human contact.

“Also, despite their preference for a robot capable of interacting as a real person, they perceived the relationship with a humanoid robot as counterfeit, a deception,” according to the university.

Older adults also perceived the surveillance function of socially assistive robots as a threat to their autonomy and privacy.

A potential solution to the crisis

The ElliQ, a “home robot” now on the market, is a device that looks like a lamp (with a head that nods and moves) that is voice activated and can be the interface between the owner and their computer or mobile phone.

It can be used to remind people to take their medication or go for a walk, it can read out emails and texts, make phone calls and video calls and its video surveillance camera can trigger calls for assistance if the resident falls or has a medical problem.

The manufacturer, Intuition Robotics, says issues of privacy are sorted out “well in advance”, so that the resident decides whether family or anyone else should be notified about medical matters, such as erratic behaviour.

Despite having a “personality” of a helpful friend (who willingly shoulders the blame for any misunderstandings, such as unclear instructions from the user), it is not humanoid in appearance.

While ElliQ does not pretend to be anything but “technology”, other assistance robots are humanoid in appearance or may take the form of a cuddly animal. There are particular concerns about the use of assistance robots for people who are cognitively impaired, affected by dementia, for instance.

While it is a guiding principle in the artificial intelligence community that the robots should not be deceptive, some have argued that it should not matter if someone with dementia believes their cuddly assistance robot is alive, if it brings them comfort.

Ten tech developments in Aged Care

1. Robotic transport trolleys:

The Lamson RoboCart delivers meals, medication, laundry, waste and supplies.

2. Humanoid companions:

AvatarMind’s iPal is a constant companion that supplements personal care services and provides security with alerts for many medical emergencies such as falling down. Zora, a robot the size of a big doll, is overseen by a nurse with a laptop. Researchers in Australia found that it improved the mood of some patients, and got them more involved in activities, but required significant technical support.

3. Emotional support:

Paro is an interactive robotic baby seal that responds to touch, noise, light and temperature by moving its head and legs or making sounds. The robot has helped to improve the mood of its users, as well as offers some relief from the strains of anxiety and depression. It is used in Australia by RSL LifeCare.

4. Memory recovery:

Dthera Sciences has built a therapy that uses music and images to help patients recover memories. It analyses facial expressions to monitor the emotional impact on patients.

5. Korongee village:

This is a $25 million Tasmania facility for people with dementia, comprising 15 homes set within a small town context, with streets, a supermarket, cinema, café, beauty salon and gardens. Inspired by the dementia village of De Hogeweyk in the Netherlands, where residents have been found to live longer, eat better, and take fewer medications.

6. Pub for people with dementia:

Derwen Ward, part of Cefn Coed Hospital in Wales, opened the Derwen Arms last year to provide residents with a safe, but familiar, environment. The pub serves (non-alcoholic) beer, and has a pool table, and a dart board.

7. Pain detection:

PainChek is a facial recognition software that can detect pain in the elderly and people living with dementia. The tool has provided a significant improvement in data handling and simplification of reporting.

8. Providing sight:

IrisVision involves a Samsung smartphone and a virtual reality (VR) headset to help people with vision impairment see more clearly.

9. Holographic doctors:

Community health provider Silver Chain has been working on technology that uses “holographic doctors” to visit patients in their homes, creating a virtual clinic where healthcare professionals can have access to data and doctors.

10. Robotic suit:

A battery-powered soft exoskeleton helps people walk to restore mobility and independence.

MOST POPULAR

BY Fiona Smith

Fiona Smith is a freelance journalist who writes about people, workplaces and social equity. Follow her on Twitter @fionaatwork

Blockchain: Some ethical considerations

Blockchain: Some ethical considerations

Opinion + AnalysisBusiness + LeadershipScience + Technology

BY Simon Longstaff 16 MAR 2019

The development and application of blockchain technologies gives rise to two major ethical issues to do with:

- Meeting expectations – in terms of security, privacy, efficiency and the integrity of the system, and

- The need to avoid the inadvertent facilitation of unconscionable conduct: crime and oppressive conduct that would otherwise be offset by a mediating institution

Neither issue is unique to blockchain. Neither is likely to be fatal to its application. However, both involve considerable risks if not anticipated and proactively addressed.

At the core of blockchain technology lies the operation of a distributed ledger in which multiple nodes independently record and verify changes on the block. Those changes can signify anything – a change in ownership, an advance in understanding or consensus, an exchange of information. That is, the coding of the blockchain is independent and ‘symbolic’ of a change in a separate and distinct real-world artefact (a physical object, a social fact – such as an agreement, a state of affairs, etc.).

The potential power of blockchain technology lies in a form of distribution associated with a technically valid equivalent of ‘intersubjective agreement’. Just as in language the meaning of a word remains stable because the agreement of multiple users of that word, so blockchain ‘democratises’ agreement that a certain state of affairs exists. Prior to the evolution of blockchain, the process of verification was undertaken by one (or a few) sources of authority – exchanges and the like. They were the equivalent of the old mainframe computers that formerly dominated the computing landscape until challenged by PC enabled by the internet and world wide web.

Blockchain promises greater efficiency (perhaps), security, privacy and integrity by removing the risk (and friction) that arises out of dependence on just one or a few nodes of authority. Indeed, at least some of the appeal of blockchain is its essentially ‘anti-authoritarian’ character.

However, the first ethical risk to be managed by blockchain advocates is that they not over-hype the technology’s potential and then over-promise in terms of what it can deliver. The risk of doing either can be seen at work in an analogous field – that of medical research. Scientists and technologists often feel compelled to announce ‘breakthroughs’ that, on closer inspection, barely merit that description. Money, ego, peer group pressure – these and other factors contribute to the tendency for the ‘new’ to claim more than can be delivered.

“However, the first ethical risk to be managed by blockchain advocates is that they not over-hype the technology’s potential and then over-promise in terms of what it can deliver.”

It’s not just that this can lead to disappointment – very real harm can befall the gullible. One can foresee an indeterminate period of time during which the potential of blockchain is out of step with what is technically possible. It all depends on the scope of blockchain’s ambitions – and the ability of the distributed architecture to maintain the communications and processing power needed to manage and process an explosion in blockchain related information.

Yet, this is the lesser of blockchain’s two major ethical challenges. The greater problem arises in conditions of asymmetry of power (bargaining power, information, kinetic force, etc.) – where blockchain might enable ‘transactions’ that are the product of force, fear and fraud. All three ‘evils’ destroy the efficiency of free markets – and from an ethical point of view, that is the least of the problems.

“The greater problem arises in conditions of asymmetry of power (bargaining power, information, kinetic force, etc.) – where blockchain might enable ‘transactions’ that are the product of force, fear and fraud.”

One advantage of mediating institutions is that they can provide a measure of supervision intended to identify and constrain the misuse of markets. They can limit exploitation or the use of systems for criminal or anti-social activity. The ‘dark web’ shows what can happen when there is no mediation. Libertarians applaud the degree of freedom it accords. However, others are justifiably concerned by the facilitation of conduct that violates the fundamental norms on which any functional society must be based. It is instructive that crypto-currencies (based on blockchain) are the media of exchange in the rankest regions of the dark web.

So, how do the designers and developers of blockchain avoid becoming complicit in evil? Can they do better than existing mediating institutions? May they ‘wash their hands’ even when their tools are used in the worst of human deeds?

This article was first published here. Dr Simon Longstaff presented at The ADC Global Blockchain Summit in Adelaide on Monday 18 March on the issue of trust and the preservation of ethics in the transition to a digital world.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Business + Leadership

David Gonski on corporate responsibility

Opinion + Analysis

Business + Leadership, Relationships

The future does not just happen. It is made. And we are its authors.

Opinion + Analysis

Business + Leadership

Ethics of making money from JobKeeper

Explainer

Science + Technology

Ethics Explainer: The Turing Test

BY Simon Longstaff

Simon Longstaff began his working life on Groote Eylandt in the Northern Territory of Australia. He is proud of his kinship ties to the Anindilyakwa people. After a period studying law in Sydney and teaching in Tasmania, he pursued postgraduate studies as a Member of Magdalene College, Cambridge. In 1991, Simon commenced his work as the first Executive Director of The Ethics Centre. In 2013, he was made an officer of the Order of Australia (AO) for “distinguished service to the community through the promotion of ethical standards in governance and business, to improving corporate responsibility, and to philosophy.” Simon is an Adjunct Professor of the Australian Graduate School of Management at UNSW, a Fellow of CPA Australia, the Royal Society of NSW and the Australian Risk Policy Institute.

Not too late: regaining control of your data

Not too late: regaining control of your data

Opinion + AnalysisBusiness + LeadershipPolitics + Human RightsScience + Technology

BY The Ethics Centre 15 MAR 2019

IT entrepreneur Joanne Cooper wants consumers to be able to decide who holds – and uses – their data. This is why Alexa and Siri are not welcome in her home.

Joanne won’t go to bed with her mobile phone on the bedside table. It is not that she is worried about sleep disturbances – she is more concerned about the potential of hackers to use it as a listening device.

“Because I would be horrified if people heard how loud I snore,” she says.

She is only half-joking. As an entrepreneur in the field of data privacy, she has heard enough horror stories about the hijacking of devices to make her wary of things that most of us now take for granted.

“If my device, just because it happened to be plugged in my room, became a listening device, or a filming device, would that put me in a compromising position? Could I have a ransomware attack?”

(It can happen and has happened. Spyware and Stalkerware are openly advertised for sale.)

Taking back control

Cooper is the founder of ID Exchange – an Australian start-up aiming to allow users to control if, when and to whom they will share their data. The idea is to simplify the process so that people will be able to visit one platform to control access.

This is important because, at present, it is impossible to keep track of who has your data and how much access you have agreed to and whether you have allowed it to be used by third parties. If you decide to revoke that access, the process is difficult and time-consuming.

Big data is big business

The data that belongs to you is liquid gold for businesses wanting to improve their offerings and pinpoint potential customers. It is also vital information for government agencies and a cash pot for hackers.

Apart from the basic name, address, age details, that data can reveal the people to whom you are connected, your finances, health, personality, preferences and where you are located at any point in time.

That information is harvested from everyday interactions with social media, service providers and retailers. For instance, every time you answer a free quiz on Facebook, you are providing someone with data.

Google Assistant uses your data to book appointments

With digital identity and personal data-related services expected to be worth $1.58 trillion in the EU alone by 2020, Cooper asks whether we have consciously given permission for that data to be shared and used.

A lack of understanding

Do we realise what we have done when we tick a permission box among screens of densely-worded legalese? When we sign up to a loyalty program?

A study by the Consumer Policy Research Centre finds that 94 per cent of those surveyed did not read privacy policies. Of those that did, two-thirds said they still signed up despite feeling uncomfortable and, of those, 73 per cent said they would not otherwise have been able to access the service.

And, what we are getting in return for that data? Do we really want advertisers to know our weak points, such as when we are in a low mood and susceptible to “retail therapy”? Do we want them to conclude we are expecting a new baby before we have had a chance to announce it to our own families?

Even without criminal intent, limited control over the use of our data can have life-altering consequences when it is used against us in deciding whether we may qualify for insurance, a loan, or a job.

“It is not my intention to create fear or doubt or uncertainty about the future,” explains Cooper. “My passion is to drive education about how we have to become “self-accountable” about the access to our data that will drive a trillion-dollar market,” she says.

“Privacy is a Human Right.”

Cooper was schooled in technology and entrepreneurialism by her father, Tom Cooper, who was one of the Australian IT industry’s pioneers. In the 1980s, he introduced the first IBM Compatible DOS-based computers into this country.

She started working in her father’s company at the age of 15 and has spent the past three decades in a variety of IT sectors, including the PC market, consulting for The Yankee Group, as a cloud specialist for Optus Australia, and financial services with Allianz Australia.

Starting ID Exchange in 2015, Cooper partnered with UK-based platform Digi.me, which aims to round up all the information that companies have collected on individuals, then hand it over those individuals for safekeeping on a cloud storage service of their choosing. Cooper is planning to add in her own business, which would provide the technology to allow people to opt in and opt out of sharing their data easily.

Cooper says she became passionate about the issue of data privacy in 2015, after watching a 60 Minutes television segment about hackers using mobile phones to bug, track and hack people through a “security hole” in the SS7 signaling system.

This “hole” was most recently used to drain bank accounts at Metro Bank in the UK, it was revealed in February.

Lawmakers aim to strengthen data protection

The new European General Data Protection Regulation is a step forward in regaining control of the use of data. Any Australian business that collects data on a person in the EU or has a presence in Europe must comply with the legislation that ensures customers can refuse to give away non-essential information.

If that company then refuses service, it can be fined up to 4 per cent of its global revenue. Companies are required to get clear consent to collect personal data, allows individuals to access the data stored about them, fix it if it is wrong, and have it deleted if they want.

The advance of the “internet of things” means that everyday objects are being computerised and are capable of collecting and transmitting data about us and how we use them. A robotic vacuum cleaner can, for instance, record the dimensions of your home. Smart lighting can take note of when you are home. Your car knows exactly where you have gone.

For this reason, Cooper says she will not have voice-activated assistants – such as Google’s Home, Amazon Echo’s Alexa or Facebook’s Portal – in her home. “It has crossed over the creepy line,” she says.

“All that data can be used in machine learning. They know what time you are in the house, what room you are in, how many people are in the conversation, keywords.”

Your data can be compromised

Speculation that Alexa is spying on us by storing our private conversations has been dismissed by fact-checking website Politifact, although researchers have found the device can be hacked.

The devices are “always-on” to listen for an activating keyword, but the ambient noise is recorded one second at a time, with each second dumped and replaced until it hears a keyword like “Alexa”.

However, direct commands to those two assistants are recorded and stored on company servers. That data, which can be reviewed and deleted by users, is used to a different extent by the manufacturers.

Google uses the data to build out your profile, which helps advertisers target you. Amazon keeps the data to itself but may use that to sell you products and services through its own businesses. For instance, the company has been granted a patent to recommend cough sweets and soup to those who cough or sniff while speaking to their Echo.

In discussions about rising concerns about the use and misuse of our data, Cooper says she is frustrated by those who tell her that “privacy is dead” or “the horse has bolted”. She says it is not too late to regain control of our data.

“It is hard to fix, it is complex, it is a u-turn in some areas, but that doesn’t mean that you don’t do it.”

It was not that long ago that publicly disagreeing with your employer’s business strategy or staging a protest without the protection of a union, would have been a sackable offence.

But not today – if you are among the business “elite”.

Last year, 4,000 Google employees signed a letter of protest about an artificial intelligence project with the Department of Defense. Google agreed not to renew the contract. No-one was fired.

Also at Google, employees won concessions after 20,000 of them walked out protesting the company’s handling of sexual harassment cases. Everyone kept their jobs.

Consulting firms Deloitte and McKinsey & Company and Microsoft have come under pressure from employees to end their work with the US Department of Immigration and Customs Enforcement (ICE), because of concerns about the separation of children from their illegal immigrant parents.

Amazon workers demanded the company stop selling its Rekognition facial recognition software to law enforcement.

Examples like these show that collective action at work can still take place, despite the decline of unionism, if the employees are considered valuable enough and the employer cares about its social standing.

The power shift

Charles Wookey, CEO of not-for-profit organisation A Blueprint for Better Business says workers in these kinds of protests have “significant agency”.

“Coders and other technology specialists can demand high pay and have some power, as they hold skills in which the demand far outstrips the supply,” he told CEO Magazine.

Individual protesters and whistle-blowers, however, do not enjoy the same freedom to protest. Without a mass of colleagues behind them, they can face legal sanction or be fired for violating the company’s code of conduct – as was Google engineer James Damore when he wrote a memo criticising the company’s affirmative action policies in 2017.

Head of Society and Innovation at the World Economic Forum, Nicholas Davis, says technology has enabled employees to organise via message boards and email.

“These factors have empowered employee activism, organisation and, indeed, massive walkouts –not just around tech, by the way, but around gender and about rights and values in other areas,” he said at a forum for The Ethics Alliance in March.

Change coming from within

Davis, a former lawyer from Sydney, now based in Geneva, says even companies with stellar reputations in human rights, such as Salesforce, can face protests from within – in this case, also due to its work with ICE.

“There were protesters at [Salesforce annual conference] Dreamforce saying: ‘Guys, you’re providing your technology to customs and border control to separate kids from their parents?,” he said.

Staff engagement and transparency

Salesforce responded by creating Silicon Valley’s first-ever Office of Ethical and Humane Use of Technology as a vehicle to engage employees and stakeholders.

“I think the most important thing is to treat it as an opportunity for employee engagement,” says Davis, adding that listening to employee concerns is a large part of dealing with these clashes.

“Ninety per cent of the problem was not [what they were doing] so much as the lack of response to employee concerns,” he says. Employers should talk about why the company is doing the work in question and respond promptly.

“After 72 hours, people think you are not taking this seriously and they say ‘I can get another job, you know’, start tweeting, contact someone in the ABC, the story is out and then suddenly there is a different crisis conversation.”

Davis says it is difficult to have a conversation about corporate social activism in Australia, where business leaders say they are getting resistance from shareholders.

“There’s a lot more space to talk about, debate, and being politically engaged as a management and leadership team on these issues. And there is a wider variety of ability to invest and partner on these topics than I perceive in Australia,” says Davis, who is also an adjunct professor with Swinburne University’s Institute for Social Innovation.

“It’s not an issue of courage. I think it’s an issue with openness and demand and shifting culture in those markets. This is a hard conversation to have in Australia. It seems more structurally difficult,” he says.

“From where I stand, Australia has far greater fractures in terms of the distance between the public, private and civil society sectors than any other country I work in regularly. The levels of distrust here in this country are far higher than average globally, which makes for huge challenges if we are to have productive conversations across sectors.”

MOST POPULAR

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

If humans bully robots there will be dire consequences

If humans bully robots there will be dire consequences

Opinion + AnalysisRelationshipsScience + Technology

BY Fiona Smith 13 MAR 2019

HitchBOT was a cute hitchhiking robot made up of odds and ends as an experiment to see how humans respond to new technology. Two weeks into its journey across the United States, it was beheaded in an act of vandalism.

For most of its year-long “world tour” in 2015, the Wellington-boot wearing robot was met with kindness, appearing in “selfies” with the people who had picked it up by the side of the road, taking it to football games and art galleries.

However, the destruction of HitchBOT points to a darker side of human psychology – where some people will act out their more violent and anti-social instincts on a piece of human-like technology.

A target for violence

Manufacturers of robots are well aware that their products can become a target, with plenty of reports of wilful damage. Here’s a brief timeline of the types of bullying human’s have inflicted on our robotic counterparts in recent years.

- The makers of a wheeled robot that delivers takeaway food in business parks reported that people kick or flip over the machines for no apparent reason.

- Homeless people in the US threw a tarpaulin over a patrolling security robot in a carpark and smeared barbeque sauce over its lenses.

- Google’s self-driving cars have been attacked. Children in Japan have reportedly attacked robots in shopping malls, leading their designers to write programs to help them avoid small people.

- In less than 24 hours after its launch, Microsoft’s chatbot “Tay” had been corrupted into a racist by social media users who encouraged its antisocial pronouncements.

Researchers speculated to the Boston Globe that the motives for these attacks could be boredom or annoyance at how the technology was being used. When you look at those examples together, is it fair to say we are we becoming brutes?

Programming for human behaviour

While manufacturers want us to be kind to their robots, researchers are examining the ways human behaviour is changing in response to the use of technology.

Take the style of discourse on social media, for example. You don’t have to spend long on a Facebook or Twitter discussion before you are confronted with an example of written aggression.

“I think people’s communications skills have deteriorated enormously because of the digital age,” says Tania de Jong, founder and executive producer of the Creative Innovation summit, which will be held in Melbourne in April.

“It is like people slapping each other – slap, slap slap. It is like common courtesies that we took for granted as human beings are being bypassed in some way.”

Clinical psychologist Louise Remond says words typed online are easily misinterpreted. “The verbal component is only 7 per cent of the whole message and the other components are the tone and the body language and those things you get from interacting with a person.”

The dark power of anonymity

“The disinhibition of anonymity, where people will say things they would never utter if they knew they were being identified and observed, is another factor in poor online behaviour. But, even when people are identifiable, they sometimes lose sight of how many people can see their messages.” says Remond, who works at the Kidman Centre in Sydney.

Text messaging is abbreviated communication, says Dr Robyn Johns, Senior Lecturer in Human Resource Management at the University of Technology, Sydney. “So you lose that tone and the intention around it and it can come across as being quite coarse,” she says.

Is civility at risk?

If we are rude to machines, will we be rude to each other?

If you drop your usual polite attitude when dealing with a taxi-ordering chatbot are you more likely to treat a human the same way? Possibly, says de Jong. The experience of call centre workers could be a bad omen: “A lot of people are rude to those workers, but polite to the people who work with them.”

“Perhaps there is a case to be made that we all need to be a lot more respectful,” says Jong, who founded the non-profit Creativity Australia, which aims to unlock the creativity of employees.

“A general rule, if we are going to act with integrity as whole human beings, we are not going to have different ways of talking to different things.”

The COO of “empathetic AI” company Sensum, Ben Bland, recently wrote that his company’s rule-of-thumb is to apply the principle of “don’t be a dick” to its interactions with AI.

“ … we should consider if being mean to machines will encourage us to become meaner people in general. But whether or not treating [digital personal assistant] Alexa like a disobedient slave will cause us to become bad neighbours, there’s a stickier aspect to this problem. What happens when AI is blended with ourselves?,” he asks in a column published on Medium.com.

“With the adoption of tools such as intelligent prosthetics, the line between human and machine is increasingly blurry. We may have to consider the social consequences of every interaction, between both natural and artificial entities, because it might soon be difficult or unethical to tell the difference.”

Research Specialist at the MIT Media Lab, Dr Kate Darling, told CBC news in 2016 that research shows a relationship between people’s tendencies for empathy and the way they treat a robot.

“You know how it’s a red flag if your date is nice to you, but rude to the waiter? Maybe if your date is mean to Siri, you should not go on another date with that person.”

Research fellow at MIT Sloan School’s Center for Digital Business, Michael Schrage, has forecast that “ … being bad to bots will become professionally and socially taboo in tomorrow’s workplace”.

“When “deep learning” devices emotionally resonate with their users, mistreating them feels less like breaking one’s mobile phone than kicking a kitten. The former earns a reprimand; the latter gets you fired, he writes in the Harvard Business Review.

Need to practise human-to-human skills

Johns says we are starting to get to a “tipping point” where that online style of behaviour is bleeding into the face-to-face interactions.

“There seems to be a lot more discussion around people not being able to communicate face-to-face,” she says.

When she was consulting to a large fast food provider recently, managers told her they had trouble getting young workers to interact with older customers who wanted help with the automated ordering system.

“They [the workers] hate that. They don’t want to talk to anyone. They run and hide behind the counter,” says Johns, a doctor of Philosophy with a background in human resources.

The young workers vie for positions “behind the scenes” whereas, previously, the serving positions were the most sought-after.

Johns says she expects to see etiquette classes making a comeback as employers and universities take responsibility for training people to communicate clearly, confidently and politely.

“I see it with graduating students, those who are able to communicate and present well are the first to get the jobs,” she says.

We watch and learn

Remond specialises in dealing with young people – immersed in cyber worlds since a very young age – and says there is a human instinct to connect with others, but the skills have to be practised.

“There is an element of hardwiring in all of us to be empathetic and respond to social cues,” she says.

Young people can practice social skills in a variety of real-life environments, rather than merely absorbing the poor role models they find of reality television shows.

“There are a lot of other influences. We learn so much from the social modelling of other people. You can walk into a work environment and watch how other people interact with each other at lunchtime.”

Remond says employers should ensure people who work remotely have opportunities to reconnect face-to-face. “If you are part of a team, you are going to work at your best when you feel a genuine connection with these people and you feel like you trust them and you feel like you can engage with them.”

This article was originally written for The Ethics Alliance. Find out more about this corporate membership program. Already a member? Log in to the membership portal for more content and tools here.

MOST POPULAR

BY Fiona Smith

Fiona Smith is a freelance journalist who writes about people, workplaces and social equity. Follow her on Twitter @fionaatwork

How will we teach the robots to behave themselves?

How will we teach the robots to behave themselves?

Opinion + AnalysisScience + Technology

BY Simon Longstaff 5 MAR 2019

The era of artificial intelligence (AI) is upon us. On one hand it is heralded as the technology that will reshape society, making many of our occupations redundant.

On the other, it’s talked about as the solution that will unlock an unfathomable level of processing efficiency, giving rise to widespread societal benefits and enhanced intellectual opportunity for our workforce.

Either way, one thing is clear – AI has an ability to deliver insights and knowledge at a velocity that would be impossible for humans to match and it’s altering the fabric of our societies.

The impact that comes with this wave of change is remarkable. For example, IBM Watson has been used for early detection of melanoma, something very close to home considering Australians and New Zealanders have the highest rates of skin cancer in the world. Watson’s diagnostic capacity exceeds that of most (if not all) human doctors.

Technologists in the AI space around the world are breaking new ground weekly – that is an exciting testament to humankind’s ability. In addition to advancements in healthcare, 2018 included milestones in AI being used for autonomous vehicles, with the Australian government announcing the creation of a new national office for future transport technologies in October.

However, the power to innovate creates proportionately equal risk and opportunity – technology with the power to do good can, in almost every case, be applied for bad. And in 2019 we must move this conversation from an interesting dinner-party conversation to a central debate in businesses, government and society.

AI is a major area of ethical risk. It is being driven by technological design processes that are mostly void of robust ethical consideration – a concern that should be the top of the agenda for all of us. When technical mastery of any kind is divorced from ethical restraint the result is tyranny.

The knowledge that’s generated by AI will only ever be the cold logic of the machine. It lacks the nuanced judgment that humans have. Unless AI’s great processing power is met and matched with an equal degree of ethical restraint, the good it creates is not only lost but potentially damaging.The lesson we need to learn is this: just because we can do something doesn’t mean that we should.

Ethical knowledge

As citizens, our priority must be to ensure that AI works in the interests of the many rather than the few.

Currently, we’re naively assuming that the AI coders and developers have the ethical knowledge, understanding and skills to navigate the challenges that their technological innovations create.

In these circumstances, sound ethical judgment is just as important a skill as the ability to code effectively. It is a skill that must be learned, practised and deployed. Yet, very little ethical progress or development has been made in the curriculum to inform the design and development of AI.

This is a “human challenge” not a “technology challenge”. The role of people is only becoming more important in the era of AI. We must invest in teaching ethics as applied to technological innovation.

Building a platform of trust

In Australia, trust is at an all-time low because the ethical infrastructure of our society is largely damaged – from politics to sport to religious institutions to business. Trust is created when values and principles are explicitly integrated into the foundations of what is being designed and built. Whatever AI solution is developed and deployed, ethics must be at the core – consciously built into the solutions themselves, not added as an afterthought.

Creating an ethical technologically advanced culture requires proactive and intentional collaboration from those who participate in society: academia, businesses and governments. Although we’re seeing some positive early signs, such as the forums that IBM is creating to bring stakeholders from these communities together to debate and collaborate on this issue, we need much more of the same – all driven by an increased sense of urgency.

To ensure responsible stewardship is at the centre of the AI era, we need to deploy a framework that encourages creativity and supports innovation, while holding people accountable.

This story first appeared on Australian Financial Review – republished with permission.

MOST POPULAR

BY Simon Longstaff

Simon Longstaff began his working life on Groote Eylandt in the Northern Territory of Australia. He is proud of his kinship ties to the Anindilyakwa people. After a period studying law in Sydney and teaching in Tasmania, he pursued postgraduate studies as a Member of Magdalene College, Cambridge. In 1991, Simon commenced his work as the first Executive Director of The Ethics Centre. In 2013, he was made an officer of the Order of Australia (AO) for “distinguished service to the community through the promotion of ethical standards in governance and business, to improving corporate responsibility, and to philosophy.” Simon is an Adjunct Professor of the Australian Graduate School of Management at UNSW, a Fellow of CPA Australia, the Royal Society of NSW and the Australian Risk Policy Institute.

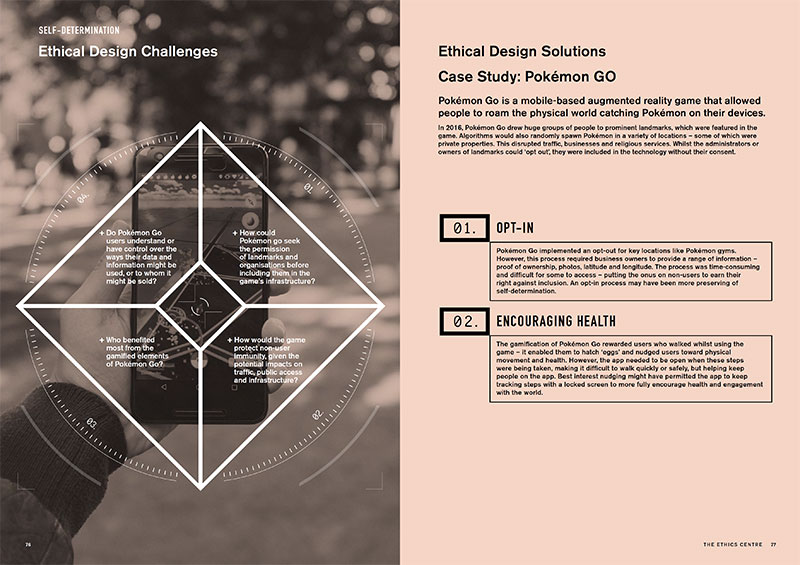

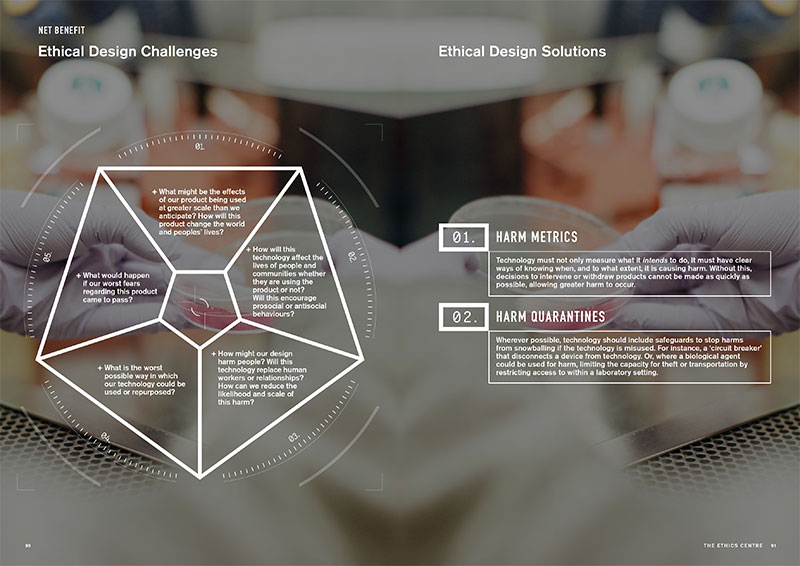

Ethical by Design: Principles for Good Technology

Ethical by Design: Principles for Good Technology

Ethical By Design: Principles for Good Technology

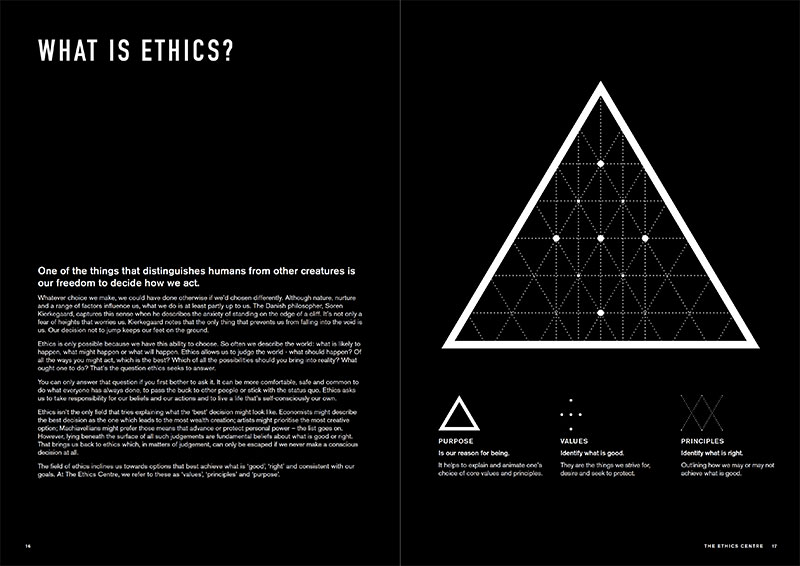

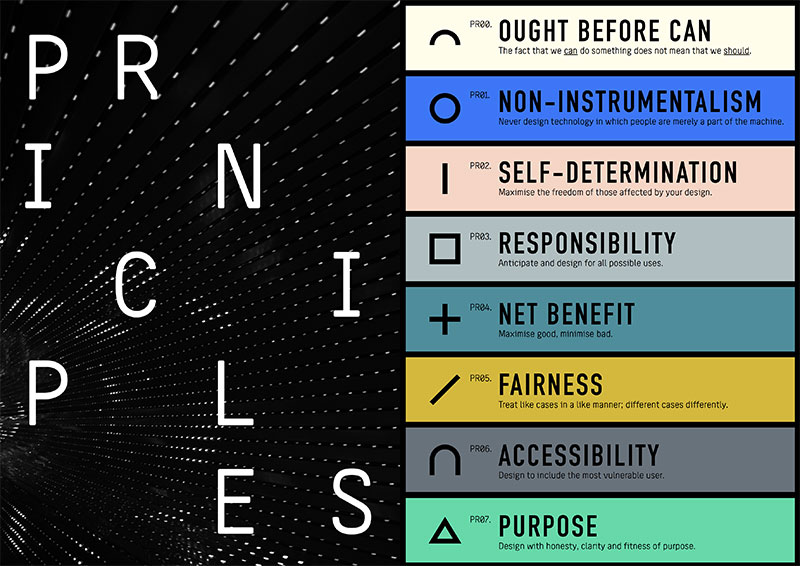

Learn the principles you need to consider when designing ethical technology, how to balance the intentions of design and use, and the rules of thumb to prevent ethical missteps. Understand how to break down some of the biggest challenges and explore a new way of thinking for creating purpose-based design.

You’re responsible for what you design – make sure you build something good. Whether you are editing a genome, building a driverless car or writing a social media algorithm, this report offers the knowledge and tools to do so ethically. From Facebook to a brand new start-up, the responsibility begins with you. In this guide we offer key principles to help guide ethical technology creation and management.

"Technology seems to be at the heart of more and more ethical crises. So many of the ethical scandals we’re seeing in the technology sector are happening because people aren’t well-equipped to take a holistic view of the ethical landscape."

DR MATTHEW BEARD

WHATS INSIDE?

What is ethics + ethical theories

Techno-ethical myths

The value of ethical frameworks

Rules of thumb to embed ethics in design

Case studies + ethical breakdowns

Core ethical design principles

Design challenges + solutions

The future of ethical technology

Whats inside the guide?

AUTHORS

Authors

Dr Matt Beard

is a moral philosopher with an academic background in applied and military ethics. He has taught philosophy and ethics at university for several years, during which time he has been published widely in academic journals, book chapters and spoken at national and international conferences. Matt’s has advised the Australian Army on military ethics including technology design. In 2016, Matt won the Australasian Association of Philosophy prize for media engagement, recognising his “prolific contribution to public philosophy”. He regularly appears on television, radio, online and in print.

Dr Simon Longstaff

has been Executive Director of The Ethics Centre for over 25 years, working across business, government and society. He has a PhD in philosophy from Cambridge University, is a Fellow of CPA Australia and of the Royal Society of NSW, and in June 2016 was appointed an Honorary Professor at ANU – based at the National Centre for Indigenous Studies. Simon co-founded the Festival of Dangerous Ideas and played a pivotal role in establishing both the industry-led Banking and Finance Oath and ethics classes in primary schools. He was made an Officer of the Order of Australia (AO) in 2013.

DOWNLOAD A COPY

You may also be interested in...

Nothing found.