Can there be culture without contact?

Can there be culture without contact?

Opinion + AnalysisBusiness + Leadership

BY The Ethics Alliance 24 JAN 2022

COVID-19 has stripped offices everywhere of employees and disrupted and transformed workplace culture. Fiona Smith investigates if office conduct has suffered a COVID fall-out.

Human Resource executives all across Australia share one burning question: How can their companies lure employees back into the office?

In little more than 14 months, COVID-19 has overturned decades of corporate culture – one in which employees sat at their desks during work hours, communed in canteens and coffee shops and partied in pubs and wine bars before taking part in the traditional commute. Some thought the end of lockdown would bring them flocking back to the cities.

Instead, working from home has spawned a new world of options, brought families closer together, made life partners work partners and sparked the redesigning of our homes to permanently include everything needed to telecommute.

It’s a subject that’s dominating headlines and research reports, and the results of The Ethics Alliance Business Pulse confirm it – employees and leaders alike now place a high value on flexibility. Sixty-three per cent of survey respondents say they prefer a hybrid model that blends the benefits of working from home and face-to-face time.

41% of Australians with a job work from home at least once a week

16% of people working remotely say they struggling with loneliness

14% of global employees say they work for an organisation with a strong ethical culture

77% of people say that being able to work from home post-COVID-19 would make them happier

(2021 data from ABS, Buffer State of Remote Work survey, Ethics and Compliance Initiative, and Owl Labs)

The survey finding subverts the idea that executive teams are in favour of employees returning to the office over any other workplace model. Seventy-seven per cent of respondents hold senior roles from managerial to board director positions and only 14 per cent can be considered to be ‘workers’.

At stake is more than just the use of real estate – it’s how organisations can continue to provide a satisfying workplace for their employees and how they can lay the foundation for future success. Many believe workplace culture – the neurodiversity effect of being among many of different abilities and opinions – is an essential driving force that creates new initiatives, gives projects their impetus and is the petri dish of business ideas. Others say new management techniques are needed to respond to a pandemic generational change.

New management techniques are needed to respond to a pandemic generational change.

Business leaders are coming to terms with the fact that a sizeable proportion of their workforces now comprise ‘COVID hires’ – people recruited in the past 18 months who haven’t set foot in the office.

Consultancy firm and The Ethics Alliance member Accenture is a case in point. The firm replenishes its ranks by hiring 100,000 people worldwide every year, a number that amounts to almost 20 per cent of its total workforce. That’s a lot of people to integrate into a workplace culture over 12 months – especially when done remotely and in a time of crisis.

Each new hire is screened for their ‘cultural fit’ and receives an induction into Accenture’s workplace systems, as well as its code of conduct.

This onboarding process gets staff ready to work and aims to ensure that they undertake their work at Accenture in the right way. When workplace culture is designed around contact, how can it be maintained when 20 per cent of the workplace have never been face-to-face with their new colleagues? And does it matter?

“The only cultural reference framework for employees is a conversation over these virtual meetings.They do the training, but they don’t see it in action.”

Bob Easton, Chairman of Accenture Australia & New Zealand, says people are slowly coming back to the office in Australia, but there are still many new Accenture employees around the world who have never met a colleague or client face-to-face.

“The only cultural reference framework for them is a conversation over these virtual meetings,” he says. “They do the training, but they don’t see it in action.”

Leaders question whether it is possible to embed an organisational culture when people can’t meet face-to-face. Before and after physical meetings, employees engage in small talk that can help promote a sense of communal belonging. When Zoom meetings end, the screen goes dark.

Dr Marc Stigter, Associate Director at Melbourne Business School, says managers are warning that the pandemic has created a ‘pressure cooker’, particularly for top managers and middle managers who are dealing with isolation, ‘Zoom fatigue’ and job insecurity.

“They have many kinds of challenges, but they still need to mobilise their teams and take those people with them,” says Dr Stigter, an international strategist who recently completed research for the Australian Human Resources Institute on the impact of the pandemic. “The workforce, in general, is under pressure to demonstrate value all the time,” he says.

Elisabeth Shaw, CEO of Relationships Australia NSW, believes companies now have two workplace cultures. There’s one group of employees who know each other well from working in the office and can draw on their past work stories and continue to create certain rituals, like sharing Friday night drinks in person or on Zoom. And another group who only know each other online. As they have never met physically, they will have to draw on their virtual relationship and Zoom meetings to build a bank of group memories.

One way of bridging the two work cultures is to have a buddy from each group looking after and creating cultural learnings and rituals to hold the group together. She believes the days of working full-time in the office may well be over as more employees opt to work part-time in the office and the rest at home. Increasingly, employers will have to manage a hybrid work model and create a more flexible work culture.

“The pandemic lockdown which forced employees to work from home, has broken all the old rules,” she says. “The hybrid model of working part-time in the office and part-time at home is going to be more important. It has also benefited more people than expected as many employees do not feel torn or stressed, as they can have a better work-life balance. They can now pick up their kids from school as they are not spending so much time travelling to and from work.

“This will mean a more diverse workplace where employers will be able to employ interstate workers or people working remotely from the country region which they previously would not have considered.”

A hybrid model “will mean a more diverse workplace where employers will be able to employ interstate workers or people working remotely”.

Shaw, who is a clinical and counselling psychologist, also suggested the hybrid model may lead to more business savings as employers can downsize their office space and rent large conference rooms when staff are required to attend whole day seminars or meetings.

However, employers will have to build certain business rules so that staff do not take undue advantage of flexible working hours. “We will have to navigate the needs of our customers, employers and employees as we move to a more flexible workplace,” she said.

However, she admitted that a flexible workplace is not the ‘Holy Grail’ for everybody. Some people still prefer face-to-face meetings, especially when they have to discuss a difficult workplace situation. “For online workers, it is not easy to navigate and read the signs that some people are not connected,” she said.

The office as ‘honeypot’

Domino Risch, workplace designer and Principal at design studio Hassell, says it’s possible to create a cohesive workplace even while adopting a hybrid work-from-home/work-from-office model. She says an appealing workplace can renew workplace culture on those days that employees are back in the office.

Risch says workplaces need to become more like ‘collective clubhouses’ if they are to create the sense of belonging that humans have developed over millions of years as social, group-based creatures who almost always work better together than alone.

Aside from creating workplaces that have been designed with human wellbeing in mind – that cater to our biophilia (our tendency to seek connections with nature and other forms of life) and our need for sensory diversity – they also need to deal in intangibles that create a more human-centric environment.

“What we’ve all missed from working from home is not our office or desk chair,” says Risch. Surveys around the world have found “people have missed people. They’ve missed contact, incidental conversation, debriefs on the way out of a meeting, overheard conversations in corridors and the opportunity to talk to people without it needing to be scheduled or online.”

Surveys around the world have found “people have missed people”.

She says these findings give us a clue as to how workplaces need to shift in terms of their fundamental purpose. Attracting people back into the office means creating spaces for collaboration, co-creation, synchronous thinking and shared storytelling. It’s only the very best design firms that can take a client’s strategic aspiration and intent, and use them to create a humanistic design solution, she adds.

The alternative to the collective idea, says Risch, are “factories of individual productivity”. These are offices that are simply a property and accommodation tool, and which lack all the requisite human aspects of good workplace design.

“Many of the organisations we work for ask us to think about ways to test, experiment, plan for and strategise exactly what the ‘collective clubhouse’ idea means for them,” she says.

“It’s super important to note though, that there is no magic wand. There is no one-size-fits-all solution – every organisation is different, with different values, culture, leadership and capability (and appetite!) for change.”

One thing’s for certain, Risch says, “fifteen months of a pandemic is never going to reverse the desire we have for belonging and contact – if anything it’s stronger now than ever before”.

Reflection from John Neil, Director of Innovation, The Ethics Centre

The idea that employees should return to the office represents a watershed – our response to immediate post-COVID challenges will set a course for what the future of work itself will look like.

Leaders can start by embracing the opportunity to reimagine what a creative, adaptable and human-centred working world can look like. They should be mindful of the powerful sunk cost biases and status quo at play. Our formative ways of working during COVID helped to dispel many of these, such as the belief that productivity is tethered to surveillance and control and that trust between employees and their employees can only be maintained when sharing the same four walls.

Culture is a manifestation of the physical environment and human relationships. Regardless of the relative configuration of office versus remote hours, the ability to be adaptive and responsive, to innovate and effectively deliver value, is closely correlated to culture – and particularly to levels of psychological safety.

Leaders therefore can have the biggest immediate impact in responding to their post-COVID challenges by doing three things:

• Be consultative – seek input from their teams on issues that directly affect them

• Be supportive – show empathy and concern for their people as individuals, not simply as employees

• Be challenging – invite their teams to think differently by re-examining assumptions about their work and how they can best fulfil their potential.

This article was published as part of Matrix Magazine, an initiative of The Ethics Alliance.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Business + Leadership

Economic reform must start with ethics

Opinion + Analysis

Business + Leadership

The Ethics Alliance: Why now?

Explainer

Business + Leadership, Politics + Human Rights, Relationships

Ethics Explainer: Power

Opinion + Analysis

Business + Leadership, Society + Culture

Access to ethical advice is crucial

BY The Ethics Alliance

The Ethics Alliance is a community of organisations sharing insights and learning together, to find a better way of doing business. The Alliance is an initiative of The Ethics Centre.

Big Thinker: Kate Manne

Kate Manne (1983 – present) is an Australian philosopher who works at the intersection of feminist philosophy, metaethics, and moral psychology.

While Manne is an academic philosopher by training and practice, she is best known for her contributions to public philosophy. Her work draws upon the methodology of analytic philosophy to dissect the interrelated phenomena of misogyny and masculine entitlement.

What is misogyny?

Manne’s debut book Down Girl: The Logic of Misogyny (2018), develops and defends a robust definition of misogyny that will allow us to better analyse the prevalence of violence and discrimination against women in contemporary society. Contrary to popular belief, Manne argues that misogyny is not a “deep-seated psychological hatred” of women, most often exhibited by men. Instead, she conceives of misogyny in structural terms, arguing that it is the “law enforcement” branch of patriarchy (male-dominated society and government), which exists to police the behaviour of women and girls through gendered norms and expectations.

Manne distinguishes misogyny from sexism by suggesting that the latter is more concerned with justifying and naturalising patriarchy through the spread of ideas about the relationship between biology, gender and social roles.

While the two concepts are closely related, Manne believes that people are capable of being misogynistic without consciously holding sexist beliefs. This is because misogyny, much like racism, is systemic and capable of flourishing regardless of someone’s psychological beliefs.

One of the most distinctive features of Manne’s philosophical work is that she interweaves case studies from public and political life into her writing to powerfully motivate her theoretical claims.

For instance, in Down Girl, Manne offers up the example of Julia Gillard’s famous misogyny speech from October 2012 as evidence of the distinction between sexism and misogyny in Australian politics. She contends that Gillard’s characterisation of then Opposition Leader Tony Abbott’s behaviour toward her as both sexist and misogynistic is entirely apt. His comments about the suitability of women to politics and characterisation of female voters as immersed in housework display sexist values, while his endorsement of statements like “Ditch the witch” and “man’s bitch” are designed to shame and belittle Gillard in accordance with misogyny.

Himpathy and herasure

One of the key concepts coined by Kate Manne is “himpathy”. She defines himpathy as “the disproportionate or inappropriate sympathy extended to a male perpetrator over his similarly, or less privileged, female targets in cases of sexual assault, harassment, and other misogynistic behaviour.”

According to Manne, himpathy operates in concert with misogyny. While misogyny seeks to discredit the testimony of women in cases of gendered violence, himpathy shields the perpetrators of that misogynistic behaviour from harm to their reputation by positioning them as “good guys” who are the victims of “witch hunts”. Consequently, the traumatic experiences of those women and their motivations for seeking justice are unfairly scrutinised and often disbelieved. Manne terms the impact of this social phenomenon upon women, “herasure.”

Manne’s book Entitled: How Male Privilege Hurts Women (2020) illustrates the potency of himpathy by analysing the treatment of Brett Kavanaugh during the Senate Judiciary Committee’s investigation into allegations of sexual assault levelled against Kavanaugh by Professor Christine Blassey Ford. Manne points to the public’s praise of Kavanaugh as a brilliant jurist who was being unfairly defamed by a woman who sought to derail his appointment to the Supreme Court of the United States as an example of himpathy in action.

She also suggests that the public scrutiny of Ford’s testimony and the conservative media’s attack on her character functioned to diminish her credibility in the eyes of the law and erase her experiences. The Senate’s ultimate endorsement of Justice Kavanaugh’s appointment to the Supreme Court proved Manne’s thesis – that male entitlement to positions of power is a product of patriarchy and serves to further entrench misogyny.

Evidently, Kate Manne is a philosopher who doesn’t shy away from thorny social debates. Manne’s decision to enliven her philosophical work with empirical evidence allows her to reach a broader audience and to increase the accessibility of philosophy for the public. She represents a new generation of female philosophers – brave, bold, and unapologetically political.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Business + Leadership, Relationships

There’s no good reason to keep women off the front lines

Opinion + Analysis

Business + Leadership, Relationships

There are ethical ways to live with the thrill of gambling

Opinion + Analysis

Relationships

The ethics of friendships: Are our values reflected in the people we spend time with?

Opinion + Analysis

Relationships, Society + Culture

Meet David Blunt, our new Fellow exploring the role ethics can play in politics

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Freedom and disagreement: How we move forward

Freedom and disagreement: How we move forward

Opinion + AnalysisRelationships

BY Georgia Fagan 17 JAN 2022

As it stands, the term “freedom” is being utilised as though it means the same thing across a variety of communities.

In the absence of a commitment to expand discourse between disagreeing parties, we may regrettably find ourselves occupying an increasingly polarised society, stricken with groups which take communication with one another as being a definitively hopeless exercise.

Freedom and its nuances have come poignantly into focus over the past two and a half years. Ongoing deliberation about pandemic rules and regulations have seen the notion being employed in myriad ways.

For some, freedom, and one’s right to it, has meant demanding that particular methods of curtailing viral spread remain optional and never be mandated. For others, the freedom to retain access to secure healthcare systems and avoid acquiring illness has meant calling for preventative methods to be enforced, heavily monitored, and in some cases made mandatory. Across most perspectives, individual freedoms are taken as having been directly impacted to degrees not previously experienced.

The concept of freedom, for better or worse, reliably takes centre stage in much political debate. The ways we conceive of it and deliberate on it impacts our evaluation of governmental action. It appears however that the term often comes into conflict with itself, forming what may be characterised as a linguistic impasse, where one usage of freedom is evidently incompatible with another.

Canonical political philosopher Hannah Arendt addresses the largely elusive (albeit valorised) term in her paper, ‘What is Freedom?’. In it, she emphasises its inherent confusion: “…it becomes as impossible to conceive of freedom or its opposite as it is to realize the notion of a square circle.” Despite the linguistic and conceptual red flags which freedom bears, Arendt, and many others, persist in grappling with the topic in their work.

I am also sympathetic to the commitment to ongoing deliberation on the matter of freedom. Devoting time to understanding visible impasses which arise in its usage appears vital. Doing so aids in encouraging productive discussions on matters of how states should act, and to what extent a populace should comply with political directives.

As these are discussions central to the maintenance and progression of liberal democratic societies, we should feel motivated to formulate responses in situations where one conception of freedom comes into conflict with another.

The seemingly inevitable inaccuracies embedded within the concept of freedom and the difficulties inherent in the project of discriminating between the emancipatory and the oppressive remains evident across recent political rhetoric and subsequent public response. From 2020, many politicians began using the term freedom to emphasise the need to make present sacrifice for future gain, and to secure safety for vulnerable populations by curtailing the spread of COVID-19. For some, this placed politicians in the camp of the emancipators, working to defend our freedom against a looming viral force. In contrast, those who opposed measures such as lockdowns took the relevant enforcers to be oppressors, acting in total opposition to a treasured freedom of movement and individuated determination.

Both those in favour of and those opposed to lockdowns were seen utilising the term freedom in the public arena, yet freedom to the former necessarily required a certain degree of political intervention, and freedom to the latter firmly required a sovereignty from political reach. This self-oriented sovereignty is one in which freedom is experienced not in our relation to others, but individualistically, through the deployment of free will and a safety from political non-interference. While these two differing utilisations of freedom are broad and not immutable, they do provide a useful starting point from which to assess contemporary impasses.

Commitment to sovereignty as necessary for a commitment to freedom is not a new position, nor is it reliably misplaced. The individual who decries re-introduced mask mandates, or vaccines being made compulsory in workplaces evidently takes these actions as being incompatible with the maintenance of freedom, and a free society more broadly. Their sovereignty from political interference is necessary for their freedom to persist.

Both historically and contemporarily, many have seen it essential to measure their own freedoms by the degree to which states did not unduly intervene upon realms of education, religion, or health. We know countless instances where political reach has chocked public freedoms to undesirable extents. Those in opposition to vaccine mandates, for example, may take freedom to begin wherever politics ends, thinking it best to safeguard their liberties with one hand while defending against political reach with the other.

In contrast, politicians and individuals who deem actions such as vaccine mandates, lockdowns, and the like as necessary for the maintenance of long-term social freedoms are seen as upholding a competing notion of freedom. For this group, politics and freedom are beyond compatible, they are deeply contingent upon one another. On this conception, emancipation from political reach would result in a breakdown of society, where inevitably some personal liberties would be infringed upon by others.

This position of the compatibility between freedom and politics is articulated and advocated for by Arendt. Arendt argues that sovereignty itself must be surrendered if a society is ever to be comprehensively free. This is because we do not occupy earth as individuals, but as communities, moreover, political communities, which have been formed and continue to be maintained due to the freedom of our wills. “If men wish to be free,” she writes, “it is precisely sovereignty they must renounce.”

The point here is not to say that individual rights are of no importance to political systems, or to freedom more broadly. Rather, it suggests that a comprehensive freedom cannot flourish in systems in which individuals remain committed to sovereignty above all else. Freedom is not located within the individual, but rather in the systems, or community, within which an individual operates.

We do not envy the freedom a prisoner possesses to retreat into the recesses of their own mind, we envy the person who is free to leave their home, and is safe in doing so, because a system has been politically and socially established to make it as such.

When debates are being waged over freedom, we must begin with the acknowledgement that we (as individuals) are only ever as free as the broader communities in which we operate. Our own freedoms are contingent upon the political systems that we exist in, actively engage with, and mutually construct.

Assessing the disagreement, or linguistic impasse, which exists between those who take political action as central to securing freedom and those who take freedom to begin to where politics ends has certainly not fully allowed us to realise the notion of a square circle to which Arendt alludes. Though from here, we may be better equipped to engage in discourse when we inevitably find one conception of freedom being pinned against another.

We are luckily not resigned to let present linguistic impasses on the matter of freedom mark the end of meaningful discourse. Rather, they can mark the beginning, as we are able to make efforts to rectify impasses that turn on this single word. Importantly, we have more language and words at our disposal, and many methods by which to use them. It is vital that we do.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Explainer

Relationships

Ethics Explainer: Particularism

Opinion + Analysis

Health + Wellbeing, Relationships

Anzac Day: militarism and masculinity don’t mix well in modern Australia

Explainer

Relationships

Ethics Explainer: Ethical judgement and moral intuition

Opinion + Analysis

Relationships

Struggling with an ethical decision? Here are a few tips

BY Georgia Fagan

Georgia has an academic and professional background in applied ethics, feminism and humanitarian aid. They are currently completing a Masters of Philosophy at the University of Sydney on the topic of gender equality and pragmatic feminist ethics. Georgia also holds a degree in Psychology and undertakes research on cross-cultural feminist initiatives in Bangladeshi refugee camps.

Social media is a moral trap

Rarely a day goes by without Twitter, Facebook or some other social media platform exploding in outrage at some perceived injustice or offence.

It could be aimed at a politician, celebrity or just some hapless individual who happened to make an off-colour joke or wear the wrong dress to their school formal.

These outbursts of outrage are not without cost for everyone involved. Many people, especially women and people from minority backgrounds, have received death threats for simply expressing themselves online. Many more people have chosen to censor themselves for fear of a backlash from an online mob. And when the mob does go after a perceived wrongdoer, all too often the punishment far exceeds the crime. Targeted individuals have been ostracised from their communities, sacked from their jobs, and in some cases taken their own lives.

How did we get here?

Social media was supposed to unite us. It was supposed to forge stronger social connections. It was meant to bridge conventional barriers like wealth, class, ethnicity or geography. It was supposed to be a platform where we could express ourselves freely. Where did it all go so horribly wrong?

It’s tempting to say that something must be broken, either the social media platforms or ourselves. But in fact, both are working as intended.

When it comes to the social media platforms, they and their owners thrive on the traffic generated by viral outrage. The feedback mechanisms – ‘like’ buttons, comments and sharing – only serve to amplify it. Studies have shown that posts expressing anger or outrage are shared at a significantly higher rate than more measured posts.

In short, outrage generates engagement, and for social media companies, engagement equals profit.

When it comes to us, it turns out that our minds are working as intended too. At least, working as evolution intended.

Our minds are wired for outrage.

It was one of the moral emotions that evolution furnished us with to keep people behaving nicely tens of thousands of years ago, along with other emotions like empathy, guilt and disgust.

We may not think of outrage as being a ‘moral’ emotion, but that’s just what it is. Outrage is effectively a special kind of anger that we feel when someone does something wrong to us or someone else, and it motivates us to punish the wrongdoer. It’s what we feel when someone pushes in front of us in a queue or defrauds an elderly couple of their life savings. It’s also what we feel just about any time we log on to Twitter and look at the hashtags that are doing the rounds.

Well before the advent of social media, outrage served our ancestors well. It helped to keep the peace in small-scale hunter-gatherer societies. When someone stole, cheated or bullied other members of their band, outrage inspired the victims and onlookers to respond. Its contagious nature spread word of the wrongdoing throughout the band, creating a coalition of the outraged that could confront the miscreant and punish them if necessary.

Outrage wasn’t just something that individuals experienced. It was built to be shared. It inspired ‘strategic action’, where a number of people – possibly the whole band – would threaten or punish the wrongdoer. A common punishment was social isolation or ostracism, which was often tantamount to a death sentence in a world where everyone depended on everyone else for their survival. The modern equivalent would be ‘cancelling’.

But take this tendency to get fired up at wrongdoing and drop it on social media, and you have a recipe for misery.

All our minds needed was a platform that could feed them a steady stream of injustice and offence and they quickly became addicted to it.

Another problem with social media is that many of the injustices we witness are far removed from us, and we have little or no power to prevent them or to reform the wrongdoers directly. But that doesn’t stop us trying. Because we are rarely able to engage with the wrongdoer face-to-face, we resort to more indirect means of punishment, such as getting them fired or cancelling them.

In small-scale societies, the intense interdependence of each individual on everyone else in the band meant there were good reasons to limit punishment to only what was necessary to keep people in line. Actually, following through with ostracism could remove a valuable member of the community. Often just the threat of ostracism was enough to prevent harmful behaviour.

Not so on social media. The online target is usually so far removed from the outraged mob that there is little or no cost for the mob if the target is extricated from their lives. The cost is low for the punishers but not necessarily for the punished.

Social media outrage isn’t only bad for the targets of the mob’s ire – it’s bad for the mob too. Unlike ancestral times, we now have access to an entire world of injustice about which to get outraged. We even have a word for the new tendency to seek out and overconsume bad news: doomscrolling. This can leave us with an impression that the world is filled with evil, corrupt and bad actors when, in fact, most people are genuinely decent.

And the mismatch between the unlimited scope of our perception, and the limited ability for us to genuinely effect change, can inspire despondency. This, in turn, can motivate us to seek out some way for us to recapture a sense of agency, even if that is limited to calling someone out on Twitter or sharing a dumb quote from a despised politician on Facebook. But what have we actually achieved, except to spread the outrage further?

The good news is that while we’re wired for outrage, and social media is built to exploit it, we are not slaves to our nature. We also evolved the ability to unshackle ourselves from the psychological baggage of our ancestors. That makes it possible for us to avoid the outrage trap.

If we care about making the world a better place – and saving ourselves and others from being constantly tired, angry and miserable – we can change the way we use social media. And this means we must change some of our habits.

It’s hard to resist outrage when we see it, like it’s hard to resist that cookie that you left on the kitchen counter. So put the cookie away. This doesn’t mean getting off social media entirely. But it does mean being careful about whom you follow. If the people you follow are only posting outrage porn, then you can choose to unfollow them. Follow people who share genuinely new or useful information instead. Replace the cookie with a piece of fruit.

And if you do come across something outrageous, you can decide what to do about it. Think about whether sharing it is going to actually fix the problem or whether you’re just seeking validation for your own feelings of fury.

Sometimes there are things we can share that will do good – there’s a role for increasing awareness of certain systemic injustices, as we’ve seen with the #metoo and Black Lives Matter movements. But if it’s just a tasteless joke, a political columnist trolling the opposition or someone who refuses to wear a mask at Bunnings, you can decide whether spreading it further is going to actually make things better. If not, don’t share it.

It’s not easy to inoculate ourselves against viral outrage. Our evolved moral minds have a powerful grip on our hearts. But if we want to genuinely combat injustice and harm, we need to take ownership of our behaviour and push back against outrage.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Relationships, Society + Culture

Greer has the right to speak, but she also has something worth listening to

Opinion + Analysis

Relationships

Why we find conformity so despairing

Explainer

Business + Leadership, Relationships

Ethics Explainer: Moral injury

Opinion + Analysis

Relationships

Why morality must evolve

BY Dr Tim Dean

Dr Tim Dean is Philosopher in Residence at The Ethics Centre and author of How We Became Human: And Why We Need to Change.

Ethics Explainer: Pragmatism

Pragmatism is a philosophical school of thought that, broadly, is interested in the effects and usefulness of theories and claims.

Pragmatism is a distinct school of philosophical thought that began at Harvard University in the late 19th century. Charles Sanders Pierce and William James were members of the university’s ‘Metaphysical Club’ and both came to believe that many disputes taking place between its members were empty concerns. In response, the two began to form a ‘Pragmatic Method’ that aimed to dissolve seemingly endless metaphysical disputes by revealing that there was nothing to argue about in the first place.

How it came to be

Pragmatism is best understood as a school of thought born from a rejection of metaphysical thinking and the traditional philosophical pursuits of truth and objectivity. The Socratic and Platonic theories that form the basis of a large portion of Western philosophical thought aim to find and explain the “essences” of reality and undercover truths that are believed to be obscured from our immediate senses.

This Platonic aim for objectivity, in which knowledge is taken to be an uncovering of truth, is one which would have been shared by many members of Pierce and James’ ‘Metaphysical Club’. In one of his lectures, James offers an example of a metaphysical dispute:

A squirrel is situated on one side of a tree trunk, while a person stands on the other. The person quickly circles the tree hoping to catch sight of the squirrel, but the squirrel also circles the tree at an equal pace, such that the two never enter one another’s sight. The grand metaphysical question that follows? Does the man go round the squirrel or not?

Seeing his friends ferociously arguing for their distinct position led James to suggest that the correctness of any position simply turns on what someone practically means when they say, ‘go round’. In this way, the answer to the question has no essential, objectively correct response. Instead, the correctness of the response is contingent on how we understand the relevant features of the question.

Truth and reality

Metaphysics often talks about truth as a correspondence to or reflection of a particular feature of “reality”. In this way, the metaphysical philosopher takes truth to be a process of uncovering (through philosophical debate or scientific enquiry) the relevant feature of reality.

On the other hand, pragmatism is more interested in how useful any given truth is. Instead of thinking of truth as an ultimately achievable end where the facts perfectly mirror some external objective reality, pragmatism instead regards truth as functional or instrumental (James) or the goal of inquiry where communal understanding converges (Pierce).

Take gravity, for example. Pragmatism doesn’t view it as true because it’s the ‘perfect’ understanding and explanation for the phenomenon, but it does view it as true insofar as it lets us make extremely reliable predictions and it is where vast communal understanding has landed. It’s still useful and pragmatic to view gravity as a true scientific concept even if in some external, objective, all-knowing sense it isn’t the perfect explanation or representation of what’s going on.

In this sense, truth is capable of changing and is contextually contingent, unlike traditional views.. Pragmatism argues that what is considered ‘true’ may shift or multiply when new groups come along with new vocabularies and new ways of seeing the world.

To reconcile these constantly changing states of language and belief, Pierce constructed a ‘Pragmatic Maxim’ to act as a method by which thinkers can clarify the meaning of the concepts embedded in particular hypotheses. One formation of the maxim is:

Consider what effects, which might conceivably have practical bearings, we conceive the object of our conception to have. Then, our conception of those effects is the whole of our conception of the object.

In other words, Pierce is saying that the disagreement in any conceptual dispute should be describable in a way which impacts the practical consequences of what is being debated. Pragmatic conceptions of truth take seriously this commitment to practicality. Richard Rorty, who is considered a neopragmatist, writes extensively on a particular pragmatic conception of truth.

Rorty argues that the concept of ‘truth’ is not dissimilar to the concept of ‘God’, in the way that there is very little one can say definitively about God. Rorty suggests that rather than aiming to uncover truths of the world, communities should instead attempt to garner as much intersubjective agreement as possible on matters they agree are important.

Rorty wants us to stop asking questions like, ‘Do human beings have inalienable human rights?’, and begin asking questions like, ‘Should we work towards obtaining equal standards of living for all humans?’. The first question is at risk of leading us down the garden path of metaphysical disputes in ways the second is not. As the pragmatist is concerned with practical outcomes, questions which deal in ‘shoulds’ are more aligned with positing future directed action than those which get stuck in metaphysical mud.

Perhaps the pragmatists simply want us to ask ourselves: Is the question we’re asking, or hypothesis that we’re posing, going to make a useful difference to addressing the problem at hand? Useful, as Rorty puts it, is simply that which gets us more of what we want, and less of what we don’t want. If what we want is collective understanding and successful communication, we can get it by testing whether the questions we are asking get us closer to that goal, not further away.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Relationships, Society + Culture

Where are the victims? The ethics of true crime

Opinion + Analysis

Health + Wellbeing, Relationships

Anzac Day: militarism and masculinity don’t mix well in modern Australia

Opinion + Analysis

Politics + Human Rights, Relationships

The Dark Side of Honour

Opinion + Analysis

Business + Leadership, Relationships

It’s time to take citizenship seriously again

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Nothing But A Brain: The Philosophy Of The Matrix: Resurrections

Nothing But A Brain: The Philosophy Of The Matrix: Resurrections

Opinion + AnalysisRelationshipsSociety + Culture

BY Joseph Earp 26 DEC 2021

It is one of the most iconic scenes in modern cinema, Neo (Keanu Reeves) sits before the sage-like Morpheus (Laurence Fishburne) in a room slathered with shadows, and is offered a choice.

Warning: this article contains spoilers for The Matrix Resurrections

Either he can take the blue pill, and continue his life of drudgery – a digital front, as it turns out, to stop human beings from realising they are nothing but batteries to power a race of vicious machines – or take the red pill, and awaken from what has only been a dream.

The image of this fateful choice has been co-opted by conspiracy theorists, endlessly picked over by film scholars, and referenced in a thousand parodies. But perhaps the most interesting critique of the scene comes from Slovenian philosopher Slavoj Zizek. Why, Zizek asks, is there this binary between the imagined or “fake” life, and the real one? What is the distinction between fantasy and reality; how can one state ever exist without the other? There is no clean separation between the lives we live in our heads, and the so-called external world, no line that we can draw between the artificial and the authentic.

Maybe Lana Wachowski, the director of the newest iteration in the franchise, The Matrix: Resurrections, heard Zizek’s words. Early on in the film, Resurrections re-stages a version of Neo’s fateful choice from the first instalment. But this time the falseness of the choice has been revealed: he is offered only the red pill. The binary between fake and real has been destroyed. Whatever path he takes – whether he comes frightfully into consciousness in his vat of goo, his body tended to by the tendrils of machine, or continues to pad through a life of capitalist turmoil – he is only ever in his own head.

The Cage of Our Own Heads

Solipsism, the belief that only the mind exists – and not any old mind, your mind – has its roots in Cartesian skepticism. It was René Descartes who found himself plagued by a nagging worry: what if everything that he could see, smell, and hear was merely the conjurings of a demon, tricking him into sensations that he could not prove are real? Or, in the language of the Matrix: what if our entire world is a construct, as cage-like and bleak as the containers that cattle are exported to the abattoir in?

Descartes, doubting the existence of reality itself, came to believe that there were only two things one could be sure of. The first, as he famously pronounced, is the existence of at least one mind: “I think therefore I am.” After all, if there wasn’t a mind to wonder about the nature of reality, then there wouldn’t be any wondering about the nature of reality. The other, less frequently discussed foundation of truth that Descartes believed in was the existence of God: if at least one mind exists, then God must have created it, Descartes thought.

Resurrections accepts only the first premise. There is no hope that God might exist out there, in the ether, an entity to pin some sense of certainty upon.

It is a film about being entirely trapped in a subjective experience that you cannot fully verify; held captive in the shaky cage of your own mind.

When we meet Neo, he is seeing a therapist, in recovery from a suicide attempt. The source of his suffering? That he is plagued constantly by memories that don’t seem to belong to him; that he is filled, always, with a nostalgia for a past he is not sure he has even lived; that he is concerned the fictional stories that he tells as a game designer might in fact as authentic as the desk he sits at, the boss that he serves. Or vice versa: perhaps the desk, the boss, are the entities to be trusted, and the sprawling lines of code that make up the video game are just a joyful illusion.

Neo has no way of verifying the reality of any of these thoughts. They are all just mental constructs, representations that are slowly fed to him for reasons that he cannot fathom, each carried with the same epistemic force. Desperate, he tries to use his therapist, played by Neil Patrick Harris, as his watermark; in what might be fits of paranoid delusion, he calls the man, raggedly trying to work out if he is losing his mind, hoping to dredge apart dreams and the complex mental representation we call “life”.

But as Resurrections later reveals, the therapist is the least trustworthy source that Neo could have turned to. The therapist is not just part of a fantasy that might be a reality, and vice versa: he is its very creator. And his whims, when they are explained at all, are vague and confusing. He is no adjudicator of what is fictive and what is corporeal. He is just one more layer of fantasy-as-reality, and reality-as-fantasy, a mess of whims, and desires, and dreams that exists in two states at once.

Loneliness and Hope

This is, on some level, a comment on our essential loneliness. We might feel as though we are surrounded by people, that there are lives being lived alongside ours. But, Resurrections says, we have no way of understanding the minds of our friends, families, and strangers – they are mysteries to us. Neo’s journey in Resurrections is one of finding a community, the rag-tag group of machines and humans that are hoping for a better world. And yet this community acts in ways he cannot predict; that surprise him. And more than that, he has no way of knowing if they are even real – throughout, he constantly questions whether he has actually awoken, or if he is merely living in complex whorls of fantasy.

But there is hope here too. Resurrections is, amongst other things, a paean to the power of storytelling. Those characters who attempt to dismiss our ability to spin fictions – chiefly the therapist, and the capitalistic Agent Smith, who wants to turn narratives into more products to be sold – are the film’s villains. Its heroes are those who fully embrace the power of the stories that we spin for ourselves, whether they be video games or complex narratives about our own pasts. After all, though it might be bleak to imagine that the external world is always filtered through a shaky subjective experience, that means that our fantasies are as powerful – as life-altering – as anything “real.” The world is forever what we make it.

The Power of Fear and Desire

If we are in total charge of our own destinies, able to spin ourselves into whichever corners that we choose, then what motivates us? After all, if everything is able to be re-written, then what reason do we have for doing any one thing over another?

Total freedom comes with a price, after all; there is a kind of terrible laziness that can descend upon us when we know that we can do whatever we want, a kind of malaise of submission, where, instead of rewriting the world, we sit back, and let it unfurl however it wants.

It is this state that Neo’s fellow video game designers have fallen into, a kind of overwhelming boredom that narrows their scope of possibilities and makes them one more cog in a machine that is completely out of their own control.

But Resurrections has a rebuttal to this laziness. In a key moment in the film, Neo’s therapist explains that the world he has created – the world of the Matrix – is driven, quite simply, by two states. The first is fear; the fear that we will lose what we have, whether that be our minds, in the case of Neo, or our freedoms, in the case of his fellow guerillas. And the second is desire; the world-making force that drives us to move fast, to want more, to continually strive for a different kind of world.

There is a bleak reading to this thesis statement, one that aligns with the philosophy of Baruch Spinoza. Spinoza believed that we are at our least free when motivated by causes outside of control; when our own striving for perfection, what he called our conatus, becomes putrefied and affected by those around us. After all, if we are petrified by fear, and if our hope for a different world is contingent upon the behaviour of others, then we will perpetually be buffeted around by fictions, by memories, by states that are causally connected to forces outside our control. We will be, simply put, trapped, stuck in the ugly cycles of code that Neo spends the first 20 minutes of Resurrections designing.

But there is still, even here, hope. After all, that fear need not be necessary painful; that desire need not be necessarily linked to unstable foundations. If we combine the notion that we are only within our own minds, that our fantasies have as much explanatory power as our “realities”, and this cycle of fear and desire, we can begin to understand how we might rewrite everything. We can make of fear and desire as we wish; we can alter and shape the people who we love, and we dream of.

That is the message encoded in the final shot of the film. Neo and Trinity (Carrie-Anne Moss) have given up on the search of epistemic foundations. They do not kill the therapist who has kept them in the bondage of The Matrix. Instead, they thank him. After all, through his work, they have discovered the great power of re-description, the freedom that comes when we stop our search for truth, whatever that nebulous concept might mean, and strive forever for new ways of understanding ourselves. And then, arm in arm, they take off, flying through a world that is theirs to make of.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Society + Culture, Politics + Human Rights

What happens when the progressive idea of cultural ‘safety’ turns on itself?

Opinion + Analysis

Politics + Human Rights, Relationships

A critical thinker’s guide to voting

Opinion + Analysis

Health + Wellbeing, Relationships

Seven COVID-friendly activities to slow the stress response

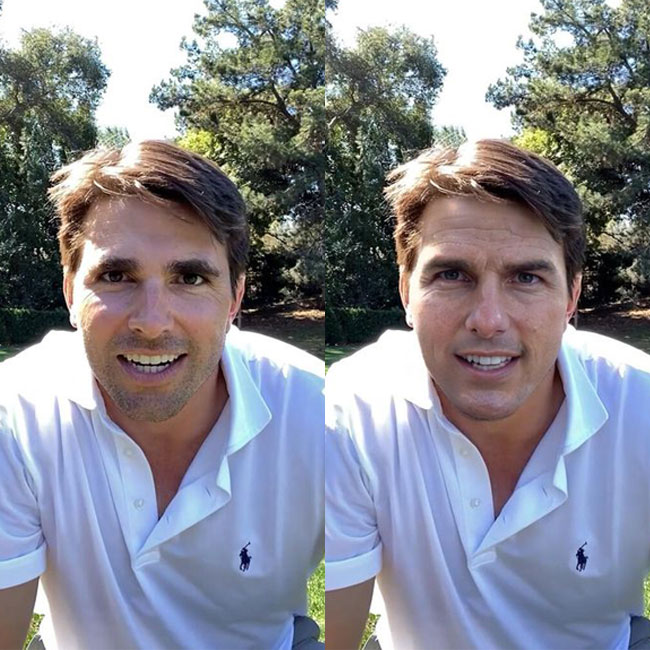

Opinion + Analysis

Relationships, Science + Technology

To see no longer means to believe: The harms and benefits of deepfake

BY Joseph Earp

Joseph Earp is a poet, journalist and philosophy student. He is currently undertaking his PhD at the University of Sydney, studying the work of David Hume.

A parade of vices: Which Succession horror story are you?

A parade of vices: Which Succession horror story are you?

Opinion + AnalysisRelationshipsSociety + Culture

BY Joseph Earp 20 DEC 2021

There is a singular thrill that comes from watching very bad people do very bad things.

The anti-hero has been a staple of modern television and cinema for decades, made popular by Tony Soprano splashing about in a swimming pool with a brace of ducks, taking some much needed “me time” after overseeing a truly astonishing number of murders.

This kind of art might have some therapeutic aspects – it teaches us how not to be, so we might learn how to be – but that’s not its purpose. Its purpose is entertainment, the sick, giddy feeling that comes over us when we watch people throw off the entirely artificial rules of morality, and behave however they want.

Moreover, this kind of art is a way of teaching us the manners by which our moral outlooks are shaped by repetition: habit and practice. When we see someone like Soprano do the same evil things, over and over again, we learn about the compounding nature of vice, the way that one bad action spawns a myriad of others.

No show exemplifies that thrill better than Succession. Its characters are vicious, and in both meanings of the term: each week, they tear each other apart, sacrificing even familial bonds for the sake of victories that almost immediately sour in their mouths. They live in a world that is constantly in the process of ratifying, and, briefly, rewarding them; they are shaped by their wealth, and by the uneasy collective they form with each other, in which power is everything and weakness is to be avoided at all costs.

But this gaggle of do-badders are not alike in their foibles. Each principal member of the cast displays a different vice, and has a different way of working towards the same unpleasant ends. Here is a kind of “pick your horror” list of the show’s central players, outlining each of their worst qualities. Which deviant are you?

Logan Roy: The Happy Capitalist

As Peter Singer noted, capitalism thrives on individuation; the idea that we are made up of communities of one, and that it is always better to sacrifice the well-being of others in order to get ahead. And how better to sum up that belief that you should, at all times, consider yourself the number one priority than the behaviour of Logan Roy? Logan has no loyalty – he will hurt whoever he needs to hurt. He is one of the few purely, uncomplicatedly immoral characters of the show, being openly unremorseful. He is, as Aristotle would put it, in total vicious alignment – he feels no urge to do the right thing, and his behaviours line up perfectly with his moral universe, of which he is the centre.

Kendall Roy: The Coward

Speaking of alignment, the character in Succession whose behaviours are most out-of-sync with their desires is Kendall Roy. Unlike Logan, he is not without remorse. Time and time again, he repents – one of the most affecting moments of the recent season was the man on his hands and knees, saying, in a voice of exhaustion, that he has tried. He suffers from a tension that we all feel, one between moral behaviour and immoral behaviour. He wants to be courageous – that is how he sees himself. But his base level desires, many of which he hasn’t even analysed within himself, are in constant conflict with the globalised outlook he has on his moral character. There is a gulf between how he considers himself in the abstract, and how he actually acts, moment by moment.

The problem, in essence, is that Kendall moves too fast. His decisions come too quick, and they are guided by his misplaced desires to appease his father and to feed into the pre-existing drama of the family. Iris Murdoch once wrote that we should train ourselves to live a moral life, habituating good action so we can unthinkingly help others when the time comes. When the time comes for Kendall, as it does with insistent regularity, he unthinkingly makes the wrong choice, sacrificing his own systems of values to appease a man who considers him less than dirt. That’s cowardice in its purest form.

Roman Roy: The Casually Cruel

When we think of evil, we tend to imagine oversized portraits of crooked megalomaniacs, stealing candy from babies and kicking the backsides of puppies. But as philosopher Hannah Arendt tells us, evil need not be enacted by larger-than-life villains. Indeed, Arendt believed that vicious behaviour can be performed in a myriad of tiny ways by the most unassuming of individuals. That is Roman Roy to a tee.

Through the series, Roman appears to be nothing more than a happy-go-lucky hedonist, a man filled to the brim with pleasures, who enjoys the finer things in life. But that happiness also extends to the vicious behaviour of himself and of others. He loves suffering and rejoices in the chaos of his family life. His horrors are pulled off with a smiling face, as though they are nothing but briefly disarming attractions, as inconsequential as a county fair.

Shiv Roy: The Manipulator

It was Immanuel Kant who once wrote that we should always treat those around us as ends in themselves, never as means. Kant thought it one of the great immoralities capable of being enacted by human beings for us to see those around us tools, whose internal lives we need never to consider. After all, for Kant, human beings are the creators of value – there is no goodness intrinsic in the world, and it exists only in the eye of the beholder. Try telling that to Shiv Roy. Shiv sees those around her as mere means of getting what she wants, to be used and discarded on a whim – even her husband is one more bridge to be shockingly burnt after she has crossed it.

Not that Shiv is without redemption. Kant also believed that there is always good will: an iron-wrought and rational understanding of the correct thing to do in any moral situation. His was a virtue ethics founded on principles, and Shiv does, despite herself, have those. Take, for instance, her complicated introduction to the world of politics in season three. She is offered what Peter Singer would call the ultimate choice – the option of winning the race against her siblings for her father’s affections, if she endorses a particularly slimy Republican candidate for President. There are, to our surprise – and maybe even to hers – lines that Shiv will not cross. Turns out even the most manipulative of us can find there are things that we simply will not do.

Cousin Greg: The False Innocent

Innocence can have an intrinsic value: it can be good for itself, in itself. But Cousin Greg, Succession’s scheming dope, uses his innocence instrumentally. He presents himself as being the dumbest person in the room, forever in the process of duping others with his blandness. But there is nothing innocent to the way he acts.

His is a vice that comes from its very duplicitousness – he presents himself one way, as though he never quite understands the situation, and then acts very differently in another. It’s proof, if any more was needed, that virtues can be a disguise that we can drape ourselves in the illusion of good behaviour, for nothing but our own benefit.

Tom Wambsgans: The Sycophant

Loyalty is a morally neutral character trait. It can be virtuous, as when we are loyal to our friends, and it can be vicious, as when we unbendingly act in accordance with an evil benefactor. Tom Wambsgans started Succession as one more foot soldier, a buffoon kicked around by forces much greater than him: no wonder he found a twisted kind of kinship with Cousin Greg, another duplicitous fool. But his loyalty to Logan – his unwavering belief that the sole purpose of his life was to be in the good books of the elder Roy – eventually transformed him into something much more nefarious.

Tom is unwavering in his belief system, utterly obsessed with power, and firmly of the opinion, contra to the writings of Michel Foucault, that it only moves in one direction. Tom wants total power, and he wants it totally. He does not consider, as Foucault did, that the person over whom we hold power also holds power over us. If all of history is a boot stomping on a human face, then that’s Logan’s spit-shined boot, and Tom’s smugly smiling face.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Politics + Human Rights, Relationships

Free markets must beware creeping breakdown in legitimacy

Explainer

Relationships, Science + Technology

Ethics Explainer: Post-Humanism

Opinion + Analysis

Society + Culture

A message from Dr Simon Longstaff AO on the Bondi attack

Opinion + Analysis

Relationships

Is masculinity fragile? On the whole, no. But things do change.

BY Joseph Earp

Joseph Earp is a poet, journalist and philosophy student. He is currently undertaking his PhD at the University of Sydney, studying the work of David Hume.

How can we travel more ethically?

How can we travel more ethically?

Opinion + AnalysisClimate + EnvironmentHealth + Wellbeing

BY Simon Longstaff 14 DEC 2021

I used to be an inveterate traveller – so much so that I would take, on average, a minimum of two flights per week. That is no longer the case.

I have trouble recalling the last time I was on an aeroplane. That will change this week, when I board a flight for Tasmania – my third attempt, in two years, to join family there. So, is this the beginning of a return to a life of endless travel – both at home and abroad? Or will a trip to the airport continue to be a relatively rare experience?

Of course, such questions apply to all modes of transport – whether they be by road, rail, air or sea. Perhaps we have become conditioned to think that the answers lie in the hands of public health officials and government ministers who, between them, can stop us in our tracks.

However, every journey begins with us – with a personal decision to travel. So, what should we take into account when making such a decision?

Perhaps one of the most important considerations is to do with who bears the burden of our decision. A 2018 article published in Nature Climate Change estimated that tourism contributes to 8% of the world’s climate emissions, with those emissions likely to grow at the rate of 4% per annum. Of these emissions, 49% were attributable to transport alone. And this was just tourism.

One also needs to add to this the emissions of people, like me, largely travelling for reasons of work rather than leisure. One of the effects of the current global pandemic has been to reduce, to a massive degree, the level of emissions caused by travel. This is welcome news to those most likely to be affected by predicted changes caused by anthropogenic warming – notably those living on low-lying islands and others whose lives (and livelihoods) are at risk of devastating harm.

Yet, it’s easy enough to find advertisements enticing us to travel to the very same low-lying islands whose economies rely on tourism. The paradoxes don’t end there. Another criticism of tourism is that it tends to commodify the cultures of those most visited – and in some cases does so to the point of corruption. Those who mount this criticism argue that we should value the diversity of ‘pristine’ cultures in the same way that we value pristine diversity in nature. Retaining one’s culture free from influences from the wider world is not necessarily the choice that people living within those cultures would make for themselves. Some hold fast to an unsullied form of life. Others are keen to share their experience with the world – not only as a source of income, but also out of a very human sense of curiosity and a desire to share the human experience.

To make matters even more complex, not all travel is for reasons of leisure or work. It is sometimes driven by necessity or a sense of obligation to others, such as when joining a loved one who is sick or dying, or to attend a family ceremony. Even those obliged to travel have, of late, been asked to ‘think twice’ or, in some cases, had the decision taken out of their hands due to border closures that have allowed few (if any) exemptions. And even those few exemptions tend to have been offered only to the very rich, powerful or popular.

This might seem to suggest that we should calibrate our decisions about travel according to its purpose. If one can achieve the same outcome by other means (such as using a video conference rather than meeting in person), then that should be the preferred option. However, I have discovered that this approach only gets you so far (excuse the pun). Some meetings only really work if people are in the same room – especially if the issues require nuanced judgement and there are some obligations (like those owed to a sick or dying relative) that can only be discharged in person.

Despite this, the causes of climate change or life-threatening viruses don’t have any regard for our motives for travelling. A virus can just as easily hitch a ride with a person rushing to the side of an ailing parent as a person off to relax on a beach. All other things being equal, they have the same impact on the environment.

Given this, how should we approach the ethical dimension of travel?

First, I think we need to be ‘mindful travellers’. That is, we should not simply ‘get up and go’ just because we can. We need to think about the implications of our doing so – including the unintended, adverse consequences of whatever decision we make.

Second, we should seek to minimise the unintended, adverse consequences. For example, can we choose a mode of travel that has minimal negative impact? It is this kind of question driving people to explore new forms of low-carbon transport options.

Third, can we mitigate unintended harm – for example, by purchasing offsets (for carbon) or looking to support Indigenous cultures where we might encounter them?

Finally, can we maximise the good that might be done by our travelling?

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Health + Wellbeing

I’m an expert on PTSD and war trauma but I won’t do the 22 push up challenge

Opinion + Analysis

Climate + Environment, Relationships, Science + Technology

From NEG to Finkel and the Paris Accord – what’s what in the energy debate

Opinion + Analysis

Business + Leadership, Health + Wellbeing, Relationships

Moving on from the pandemic means letting go

Opinion + Analysis

Society + Culture, Climate + Environment, Politics + Human Rights

Why you should change your habits for animals this year

BY Simon Longstaff

Simon Longstaff began his working life on Groote Eylandt in the Northern Territory of Australia. He is proud of his kinship ties to the Anindilyakwa people. After a period studying law in Sydney and teaching in Tasmania, he pursued postgraduate studies as a Member of Magdalene College, Cambridge. In 1991, Simon commenced his work as the first Executive Director of The Ethics Centre. In 2013, he was made an officer of the Order of Australia (AO) for “distinguished service to the community through the promotion of ethical standards in governance and business, to improving corporate responsibility, and to philosophy.” Simon is an Adjunct Professor of the Australian Graduate School of Management at UNSW, a Fellow of CPA Australia, the Royal Society of NSW and the Australian Risk Policy Institute.

Why you should care about where you keep your money

Why you should care about where you keep your money

Opinion + AnalysisBusiness + Leadership

BY Jack Thompson 13 DEC 2021

Most of us try to do the right things in life.

From a young age we’re taught to treat people and the environment with respect, being conscious of the impact our actions have on the world around us. We’re often told of the importance of minimising single-use plastics or buying fair-trade products and, for some, these can be really important ways to spend our money consciously.

While we can spend our money on things that resonate with our personal values and the type of world we want to see in the future, we also need to consider the impact that the banks we support are having. As a conscious consumer, sometimes we can forget one simple thing that can make a bigger difference in this world than most of our other monetary choices – our bank.

From the factory our shoes were made in, to the houses we want to buy and the energy that powers them. Everything in this world costs money. But where does this money come from? Most finance tends to come from banks, and other financial institutions. And it’s your choice of financial institution that actually influences where that money goes.

Sometimes we can feel as though we don’t have the power to change things, that we are part of a larger system and people in positions of power behave in a way that conflicts with our own values and principles. As individuals, we can make a difference.

Where we choose to put our money matters. And as Liza Minnelli so eloquently puts it – money makes the world go round.

Most of us probably still have the same bank account we had as a kid. There seems to be this combination of taboo and apathy that makes talking about money, frankly, a bit awkward. However, it shouldn’t be and it’s a conversation that we should have more openly. We have choice and agency when it comes to where we bank and finding an institution that aligns with our values and principles is really important.

There’s something tragic and ironic as a conscious consumer when we spend so much effort to live a more sustainable life only to have our bank, and inadvertently our money, undo all our hard work by funding, for example, a battery hen farm. It’s a moral contradiction that is very real and needs our attention.

To illustrate this point more clearly, a recent survey by the Lowy Institute found that roughly 6 in 10 Australians believe climate change is a very serious issue that needs to be dealt with. Despite this majority opinion, Australian banks have lent more than $44B to the fossil fuel industry since the 2015 Paris Agreement (to limit global warming to 1.5C), with more than $8.9B coming in 2020 alone. Overlay the fact that the four major banks in Australia hold more than 80% market share and we can immediately see that there’s a strong moral contradiction at play.

As we become more aware of the issues that are important to us it’s important to ask the question – is the money in my bank contributing to the problem or helping to solve it?

Banks can create a lot of good, but they can also create a lot of bad. For example, they can use their customer’s money (our money) and the interest paid to them on loans to help move young people with disabilities out of nursing homes and into purpose-built disability accommodation. And equally, they can just as easily provide funding to gambling companies to open new facilities.

There are plenty of examples on both sides and it can be complicated and difficult to find out what your institutions is doing (and it’s most likely a combination of both). But we do have the power to ask. There are also websites like MarketForces.org.au and Dontbankonthebomb.com that provide information that can assist in our decision-making.

We all need to realise the responsibility and opportunity that we have as customers of these large financial institutions. They need our money and our loyalty to operate at the scale they desire. We can use our power as customers to hold them to account and to drive change that positively effects people and the environment.

If there’s one thought to leave you with, it’s that what you choose to do with your money will have an impact on what the world looks like in 20-years. Where are you going to put your money?

Jack Thompson is a Banking and Finance Oath Young Ambassador. If you’re interested in taking the Oath visit www.bfo.org

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Business + Leadership

Why we need land tax, explained by Monopoly

Reports

Business + Leadership

Thought Leadership: Ethics at Work, a 2018 Survey of Employees

Opinion + Analysis

Business + Leadership

The truth isn’t in the numbers

Opinion + Analysis

Business + Leadership, Relationships, Science + Technology, Society + Culture

Who does work make you? Severance and the etiquette of labour

BY Jack Thompson

Jack is a purpose driven marketer currently working in finance [and proud pawrent to two groodles]. He is also a Banking and Finance Oath Young Ambassador.

Of what does the machine dream? The Wire and collectivism

Of what does the machine dream? The Wire and collectivism

Opinion + AnalysisPolitics + Human RightsRelationshipsSociety + Culture

BY Joseph Earp 10 DEC 2021

This week, a group of more than a dozen Rohingya refugees launched a civil suit against Facebook, alleging that the social media giant was responsible for spreading hate speech.

The victims of an ongoing military crackdown in Myanmar, the refugees claimed not merely that Facebook allowed users to express their anti-Rohingya views, but that Facebook radicalised users – that, in essence, the platform changed beliefs, rather than merely providing a conduit to express them.

The suit is, in many ways, the first of a kind. It targets the manner in which systems – whether they be social media giants, video streaming sites like YouTube, or the myriad of bureaucracies that we all engage with in one way or another almost every day – warp and change beliefs.

But what if the suit underestimates the power of these systems? What if it’s not merely that social and financial enterprises alter beliefs, but that these enterprises have belief sets entirely of their own? More and more, as capitalism continues to ratify itself, we are finding ourselves swept up in communities that operate on the basis of desires that are distinct from the views of any one member of those communities. We are all part of a great, groaning machinery – and it doesn’t want what we want.

Pawns in a Game

There is a key sequence in David Simon’s critically adored television series The Wire that sums up this perspective perfectly. In it, three young men, all of them members of a rickety enterprise of crime, find themselves playing chess. The least experienced man does not understand the game – how, he wants to know, does he get to become the king? He doesn’t, the most experienced man explains. Everyone is who they are.

Still, the younger man wants to know, what about the pawns? Surely when they reach the other side of the board, and get swapped out for queens, they have made it – they have beat the system. No, the experienced man explains. “The pawns get capped quick,” he says, simply.

There is a deep, sad irony to the scene: the three men are all pawns. They have no way of beating the system. They will not even live to become queens. When one of them dies a few episodes later, shot to death by his friend, there is a grim finality to the murder. He did, as expected, get capped quick.

This is the focus of The Wire – the observation that members of any community are expendable when weighed against the desires of that community. The game of chess is bigger than any of the pawns could imagine, a system with its own rules that they are merely contingent parts of. And so it goes with the business of crime.

Not only crime, either. The genius of The Wire is the way that it draws parallels between those who operate outside the law, and those who uphold it. The cops who spend the series cracking down on the drug trade are also pawns, in their way: lowly members of a system that they are utterly unable to change. No matter what side of the law that you fall on, you will find yourself submerged in bureaucracy, The Wire says – in the machinations of a vast system of power relations with a goal to constantly perpetuate itself, at your expense.

These are the systems that Sigmund Freud wrote of in his seminal work, Civilization and Its Discontents. For Freud, there is an essential disconnect between the desires of individuals and the desires of the social communities that they unwillingly become a part of. There are things at foot that are bigger than any of us.

Bureaucracies are not the sum total of the desires and beliefs of the members of those bureaucracies. These systems have a life – a value set – entirely of their own.

The Game Never Changes

If that is the case, then how does change occur? The Wire offers only dispiriting answers. The show’s idealists – renegade cop Jimmy McNulty, rogue crime boss Omar Little – either find themselves subsumed by the system that lords over them or eliminated. There is a hopelessness to their rebellion. They uselessly throw themselves into the path of a giant piece of machinery, hoping that their mangled bodies slow the inevitable march of progress.

It doesn’t work. Those who thrive are those who give themselves over entirely to the system, who align their values perfectly with the values of their community and embrace their own insignificance. Snoop, the show’s most hideous and intimidating villain, is a happy pawn, one who has never once considered changing the rules of the game that will send her too into an early, dismal grave.