Who does work make you? Severance and the etiquette of labour

Who does work make you? Severance and the etiquette of labour

Opinion + AnalysisBusiness + LeadershipRelationshipsScience + TechnologySociety + Culture

BY Joseph Earp 1 AUG 2022

There are certain things that some of us choose and do not choose, to tell those who we work with.

You come in on a Monday, and you stand around the coffee machine (the modern-day equivalent of the water cooler), and somebody asks you: “so, what did you get up to this weekend?”

Then you have a choice. If you fought with your partner, do you tell your colleague that? If you had sex, do you tell them that? If your mother is sick, or you’re dealing with a stress that society has broadly considered “intimate” to reveal, do you say something? And if you do, do you change the nature of the work relationship? Do you, in a phrase, “freak people out?”

These social conditions – norms, established and maintained by systems – are not specific to work, of course. Most spaces that we enter into and share with other people have an implicit code of conduct. We learn these codes as children – usually by breaking the rules of the codes, and then being corrected. And then, for the rest of our lives, we maintain these codes, often without explicitly realising what we are doing.

There are things you don’t say at church. There are things you do say in a therapist’s office. This is a version of what is called, in the world of politics, the “Overton Window”, a term used to describe the range of ideas that are considered “normal” or “acceptable” to be discussed publicly.

These social conditions are formed by us, and are entirely contingent – we could collectively decide to change them if we wanted to. But usually – at most workplaces, importantly not all – we don’t. Moreover, these conditions go past certain other considerations, about, say honesty. It doesn’t matter that some of us spend more time around our colleagues than those we call our partners. This decision about what to withhold in the office is frequently described as a choice about “professionalism”, which is usually a code word for “politeness.”

Severance, the new Apple television show which has been met with broad critical acclaim, takes the way that these concepts of professionalism and politeness shape us to its natural endpoint. The sci-fi show depicts an office, Lumon Industries, where employees are implanted with a chip that creates “innie” and “outie” selves.

Their innie self is their work self – the one who moves through the office building, and engages in the shadowy and disreputable jobs required by their employer. Their outie self is who they are when they leave the office doors. These two selves do not have any contact with, or knowledge of each other. They could be, for all intents and purposes, strangers, even though they are – on at least one reading – the “same person.”

The chip is thus a signifier for a contingent code of social practices. It takes something that is implicit in most workplaces, and makes it explicit. We might not consider it a “big deal” when we don’t tell Roy from accounts that, moments before we walked in the front door of the office, we had a massive blow-up over the phone with our partner. Which may help Roy understand why we are so ‘tetchy’ this morning. But it is, in some ways, a practice that shapes who we are.

According to the social practices of most businesses, it is “professional” – as in “polite” – not to, say, sob openly at one’s desk. But what if we want to sob? When we choose not to, we are being shaped into a very particular kind of thing, by a very particular form of etiquette which is tied explicitly to labor.

And because these forms of etiquette shape who we are, they also shapes what we know. This is the line pushed by Miranda Fricker, the leading feminist philosopher and pioneer in the field of social epistemology – the study of how we are constructed socially, and how that feeds into how we understand and process the world.

For Fricker, social forces alter the knowledge that we have access to. Fricker is thinking, in particular, about how being a woman, or a man, or a non-binary person, changes the words we have access to in order to explain ourselves, and thus how we understand things. That access is shaped by how we are socially built, and when we are blocked from access, we develop epistemic blindspots that we are often not even aware that we have.

In Severance, these social forces that bar access are the forces of capitalism. And these forces make the lives of the characters swamped with blindspots. Mark, the show’s hero, has two sides – his innie, and his outie. Things that the innie Mark does hurt and frustrate the desires of the outie Mark.

Both versions of him have such significant blindspots, that these “separate” characters are actively at odds. Much of the show’s first few episodes see these two separate versions of the same person having to fight, and challenge one another, with Mark striving for victory over outie Mark.

The forces of etiquette are always for the benefit of those in power. We, the workers at certain organisations, might maintain them, but their end result is that they meaningfully commodify us – make us into streamlined, more effective and efficient workers.

So many of us have worked a job that has asked us to sacrifice, or shape and change certain parts of ourselves, so as to be more “professional”. Which is a way of saying that these jobs have turned us into vessels for labour – emphasised the parts of us that increase productivity, and snipped off the parts that do not.

The employees of Lumon live sad, confused lives full of pain, riddled with hallucinations. The benefit of the code of etiquette is never to them. They get paid, sure. But they spend their time hurting each other, or attempting suicide, or losing their minds. Their titular severance helps the company, never them.

This is what the theorist Mark Fisher refers to when he writes about the work of Franz Kafka, one of our greatest writers when it comes to the way that politeness is weaponised against the vulnerable and the marginalized. As Fisher points out, Kafka’s work examines a world in which the powerful can manipulate those that they rule and control through the establishment of social conduct; polite and impolite; nice and not nice.

Thus, when the worker does something that fights back against their having become a vessel for labour, the worker can be “shamed”, the structure of etiquette used against them. This happens all the time in the world of Severance. As the season progresses, and the characters get involved in complex plots that involve both their innie and outie selves, the threat is always that the code of conduct will be weaponised against them, in a way that further strips down their personality; turns them into more of a vessel.

And, as Fisher again points out, because these systems of etiquette are for the benefit of the powerful, the powerful are “unembarrassable.” Because they are powerful – because they are the employer – whatever they do is “right” and “correct” and “polite.” Again, the rules of the game are contingent, which means that they are flexible. This is what makes them so dangerous. They can be rewritten underneath our feet, to the benefit of those in charge.

Moreover, in the world of the show, the characters “choose” to strip themselves of agency and autonomy, because of the dangling carrot of profit. This sharpens the satirical edge of Severance. It’s not just that the snaking rules of the game that we talk about when we talk about “good manners” make them different people. It’s that the characters of the show submit to these rules. They themselves maintain them.

Nobody’s being “forced”, in the traditional sense of that word, into becoming vessels for labour. This is not the picture of worker in chains. They are “choosing” to take the chip, and to work for Lumon. But are they truly free? What is the other alternative? Poverty? And what, actually, makes Lumon so different? A swathe of companies have these rules of etiquette. Which means a swathe of companies do precisely the same thing.

This is a depressing thought. But the freedom from this punishment lies, as it usually does, in the concept of contingency. Etiquette enforces itself; it punishes, through social isolation and exclusion, those who break its rules.

But these rules are not written on a stone tablet. And the people who are maintaining them are, in fact, all of us. Which means that we can change them. We can be “unprofessional.” We can be “impolite”. We can ignore the person who wants to alter our behaviour by telling us that we are “being rude.” And in doing so, we can fight back against the forces that want to make us one kind of vessel. And we can become whatever we’d like to be.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Health + Wellbeing, Relationships

Send in the clowns: The ethics of comedy

Explainer

Politics + Human Rights, Relationships

Ethics Explainer: Social philosophy

Opinion + Analysis

Relationships, Society + Culture

If we’re going to build a better world, we need a better kind of ethics

Opinion + Analysis

Relationships

The ethics of friendships: Are our values reflected in the people we spend time with?

BY Joseph Earp

Joseph Earp is a poet, journalist and philosophy student. He is currently undertaking his PhD at the University of Sydney, studying the work of David Hume.

We are being saturated by knowledge. How much is too much?

We are being saturated by knowledge. How much is too much?

Opinion + AnalysisRelationshipsScience + Technology

BY The Ethics Centre 28 JUL 2022

We’re hurtling into a new age where the notion of evidence and knowledge has become muddied and distorted. So which rabbit hole is the right one to click through?

Our world is deeply divided, and we have found ourselves in a unique moment in history where the idea of rational thought seems to have been dissolved. It’s no longer as clear cut what is right and just to believe….So what does it mean to truly know something anymore?

According to Emerson, the number one AI chat bot in the world, “rational thought is the process of forming judgements based on evidence” which sounds simple enough. It’s easy to form judgements based on evidence when the object of judgement is tangible — this cheese pizza is delicious, a cat playing a keyboard wearing glasses is funny. These are ideas on which people from all sides of the political spectrum can come to some form of agreement (with a little friendly debate).

But what happens when the ideas become a little more lofty? It’s human nature to believe that our one’s ideas are rational, well reasoned, and based on evidence. But what constitutes evidence in these information rich times?

We’re hurtling into a new age where even the notion of “evidence” has become muddied and distorted.

So where do we even begin — which news is the real news and what’s the responsible way to respond to the news that someone as intelligent as you, in possession of as much evidence as you, believes a different conclusion?

Philosopher Eleanor Gordon-Smith suggests, “There are many, many circumstances in which we decline to avail ourselves of certain beliefs or certain candidate truths because we’re being very Cartesionally responsible and we’re declining to encounter the possibility of doubt. And meanwhile, those of us who are sort of warriors for the enlightenment of being very epistemically polite and trying to only believe those things that we’re allowed to do so on. The conspiracy theorists, meanwhile, consider themselves a veil of knowledge, which then they deploy in a very different way.”

Who gets to be smart?

Before the Enlightenment, the common man had no real business in the pursuit of knowledge, knowledge was very fiercely guarded by the Church and the Crown. But once it dawned on those honest folk that they could in fact have knowledge, it almost became a moral imperative to seize it, regardless of the obstacles.

Nowadays avoiding knowledge is an impossibility impossible. Access to information has been wholly democratised, if you’ve got a smart phone handy, any Google search will result in billions of possible pages and answers. It’s now your choice to determine which rabbit hole is the right one to click. To the untrained eye, there’s no differentiating between information that is thoroughly researched and fact checked and fake news. And according to Eleanor Gordon-Smith it is this saturation of knowledge has come to define our generation. So much so that the value of knowledge “has been subject to a kind of inflation”.

She asks, “how do you restore the emancipatory potential of knowledge that the Enlightenment founders saw? How do you get back the kind of bravery, the self development, the political resistance, the independence in the act of knowing in this particular moment…is there a way to restore the bravery and the value of knowledge in an environment where it’s so cheap and so readily available?”

It is this saturation of knowledge has come to define our generation. So much so that the value of knowledge “has been subject to a kind of inflation”.

Philosopher Slavoj Žižek suggests that perhaps our mistake is putting the Englightment on a pedestal, that those involved in the Enlightment’s pursuit of knowledge mischaracterised those who came before them as naive idiots. “Modernity is not just knowledge. It’s also on the other side, a certain regression to primitivity. The first lesson of good enlightenment is don’t simply fight your opponent. And that’s what’s happening today.”

Perhaps ignorance really is bliss

In the age of disinformation, misinformation and everything in between, admitting you don’t know something almost feels like an act of rebellion. American philosopher Stanley Cavell, boldly asked “how do we learn that what we need is not more knowledge but the willingness to forgo knowledge” (it’s worth noting that this line of questioning saw his publications banned from a number of American university libraries).

Sometimes it is better to be ignorant because, “true knowledge hurts. Basically, we don’t want to know too much. If we get to know too much about it, we will objectivise ourselves, we will lose our personal dignity, so to retain our freedom it’s better not to know too much.” – Žižek

Žižek however admits that we are in a the middle of the fight. We are not at the end of the story. We cannot afford ourselves this retroactive view in the sense of: who cares what is done is already done? “Philosophers have only interpreted the world, we have to change it. We tried to change the world too quickly without really adequately interpreting it. And my motto would have been that 20th century leftists were just trying to change the world. The time is also to interpret it differently. That’s the challenge.”

When the AI chat bot Emerson is asked, “how can you know if something is true” it responds, “truth is a matter of opinion” and given these tumultuous and divided times we are living through, there is perhaps no truer statement.

To delve deeper into the speakers and themes discussed in this article, tune into FODI: The In-Between The Age of Doubt, Reason and Conspiracy.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Health + Wellbeing, Relationships

Confirmation bias: ignoring the facts we don’t fancy

Opinion + Analysis

Business + Leadership, Health + Wellbeing, Science + Technology

Can robots solve our aged care crisis?

WATCH

Relationships

Purpose, values, principles: An ethics framework

Opinion + Analysis

Health + Wellbeing, Relationships

Eight questions to consider about schooling and COVID-19

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Is debt learnt behaviour?

Debt means different things to different people. While some are confident to juggle huge amounts of debt spread across a few credit cards, others start hyperventilating at the mere notion of paying a bill a day late.

Debt is also intrinsically linked to our emotional state of mind. Purchasing a new outfit using Buy now, pay later services might trigger an immediate dopamine hit, and leave the trouble of those four pesky payments to a future version of yourself. Or on the larger scale, buying an apartment means taking on the biggest debt most will take on in their lifetime, but it marks a momentous life milestone.

Why is debt so emotional? And what hidden psychological forces shape our attitudes and relationships towards it?

Keep it in the family

Our attitudes towards debt are largely inherited from our family, according to Jess Brady, a financial advisor at Fox and Hare Financial and founder of online community Ladies Talk Money. Brady says debt is not just numbers on a spreadsheet, but rather a complex emotional relationship informed by how we saw our families and friends interact with their finances when we were kids. “It might be shaped by parents separating and having to move from middle class life, to potentially a period where things became really tight from a monetary perspective. And so now, fear and insecurity drive decision making in your money, beliefs or behaviour.”

“It might be that you watched your parents make reckless decisions, which has made you quite fearful about making any decisions. Or quite the opposite that you’re used to having a lot of money and a lot of freedom. Meaning that you spend money without really considering what the consequences are so often it is what we did or didn’t see in a home life environment.”

What’s clear is, there’s no rulebook when it comes to debt and financial management. Whether you’ve grown up with examples of responsible spending or not, the moment you get your first job and your own bank account – you’re on your own, which is why Brady thinks it’s important to supercharge your financial literacy.

“We wrap so much shame and guilt around debt.” If we’re going to start normalising talking about money, then the lessons of accepting and reflecting on the decision-making that got you to this point are valuable.

Jess Brady’s key financial messages for getting ahead of debt and improving financial literacy are:

- Stop identifying as someone who is, “bad with money”: this negative self-talk creates a belief system around excusing bad behaviour.

- The buck stops with you: don’t offload large financial decisions onto others whether that be a partner or a parent.

- Working 9-5: Take responsibility for your own income and embrace the mantra “I decide where and how to spend my own money.”

It’s all about the sell

For some, accumulating debt can feel like sacrificing freedom while for others it’s exactly the opposite. For a lot of people debt is an opportunity, it’s the promise of more, being one step closer to your dreams. Our differing perceptions of taking on debt has a lot to do with how it is marketed.

Taking on debt to go to university, to buy a car or an apartment are all seen as responsible debt associated with big life milestones, but debt is no longer just about buying your dream home, or taking out a credit card for the frequent flyer points. It’s about wanting a new pair of shoes… And thanks to Buy now, pay later services, getting them immediately.

According to Adam Ferrier, a behavioural psychologist and co-founder of Sydney based advertising agency, Thinkerbell, money is marketed with a sledgehammer. “Money used to be marketed by a promise of aspiration. But it feels like that aspirational side of money has been chipped away at, and it’s almost a bit gauche to promise an aspirational lifestyle with money. Debt in this country is marketed very much as an issue and something that you have to get out of and create a sense of urgency, often targeting the less financially literate people in the marketplace.”

But all debt was not created equal, and it’s the rise of Buy now, pay later type debts amongst the younger generations that have a number of financial advisors and writers concerned. According to Jonathan Shapiro, journalist and author of Buy now pay later, the extraordinary story of Afterpay, these services didn’t exactly set out to be unethical. “I think what’s happened is that we convinced ourselves that they are providing some sort of a win-win solution and they are of the belief that something so good and so popular cannot be bad.”

The introduction of companies like Afterpay to the financial lending market means it’s never been easier to fall into the red. And because of the clever way they’re marketed as payment services rather than lenders means they have largely dodged regulation, leading to heavy ethical scrutiny.

“A lot of its success is built around a behavioural hack. If something is $100, it might intimidate a consumer. But if it’s leading to $25 payments over six weeks, it makes it more palatable.”

The dangers of these services are that consumers will spend way more than they had originally intended because when a large price tag is divided over the span of six weeks it feels more manageable. “It’s put the burden on consumer groups to educate themselves. Those who use Afterpay need to be mindful of the risks of booting up a debt trap. Now they might not fall into a debt trap in the same way someone using a credit card might. But what tends to happen is Buy now, pay later users that have overextended themselves sign up for a myriad of other providers, or they stop paying other bills that are more important.”

What’s important is that we begin to normalise conversations about money, about investments, and about debt. We’re living in a time where the way debt is marketed is shifting dramatically, so it’s imperative to improve our financial literacy because our critical thinking skills and understanding what’s right for us has never felt more important.

Life and Debt is available to listen to on Spotify and Apple Podcasts.

This podcast is a project from the Young Ambassadors in The Ethics Centre’s Banking and Finance Oath initiative. Our work is made possible by donations including the generous support of Ecstra Foundation – helping to build the financial wellbeing of Australians.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Health + Wellbeing, Business + Leadership

Teachers, moral injury and a cry for fierce compassion

Opinion + Analysis

Business + Leadership

Ethics in engineering makes good foundations

Opinion + Analysis

Business + Leadership

The near and far enemies of organisational culture

Opinion + Analysis

Business + Leadership

6 Things you might like to know about the rich

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Sex ed: 12 books, shows and podcasts to strengthen your sexual ethics

Sex ed: 12 books, shows and podcasts to strengthen your sexual ethics

Opinion + AnalysisSociety + Culture

BY Eleanor Gordon Smith 18 JUL 2022

Anyone who has sat through sex ed class in school or the workplace knows how difficult it is to discuss sexual ethics.

From puberty and relationships to consent and self-expression, our sexual experiences are so varied that it’s no small feat for our education to accommodate them all.

Here are 12 of my favourite books, tv shows and podcasts that thoughtfully consider the ethics around sex:

Tomorrow Sex Will Be Good Again by Katherine Angel

A critically-acclaimed analysis of female desire, consent and sexuality, spanning science, popular culture, pornography and literature.

The Right to Sex by Amia Srinivasan

A whip-smart contemporary philosophical exploration of how morality intersects with sex, particularly whether any of us can have a moral obligation to assist anothers’ sexual fulfilment.

I May Destroy You

British dark comedy-drama television series tracing the impact of sexual assault on memory, self-understanding, and relationships, and especially other sexual desires and expectations.

Disgrace by J.M. Coetzee

Multi award winning novel tracing misogyny, consent, power and indifference as they play out in one professor’s own actions and family in divided South Africa.

Love and Virtue by Diana Reid

Reid’s debut novel explores Australian college life and accompanying issues of consent, class, feminism and institutional privilege.

Sex Education

British comedy-drama series entering on the experience of adolescent sex education, thoughtful and nuanced around issues of consent, puberty, betrayal, love.

The Argonauts by Maggie Nelson

A memoir and series of ethical reflections weaving personal and detailed sexual experience together with gender and the family unit.

Masters of Sex

American drama exploring the research and relationship of William Masters and Virginia Johnson Masters and their pioneering scientific work on human sexuality.

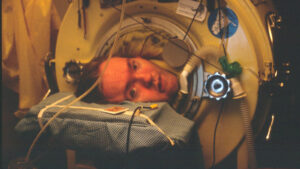

On Seeing a Sex Surrogate by Mark O’ Brien

Photo courtesy of Jessica Yu

A short personal memoir about disability and sexual expression, through the particular experience of seeing a sexual surrogate.

Fleabag

British television series exploring sex, infidelity, ageism and how casual sexual identity joins up with the rest of a person’s identity.

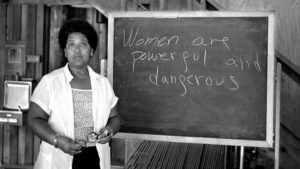

The Uses of the Erotic essay by Audre Lorde

A beautiful series of literary reflections on the power of the erotic, along with an exploration of why it is kept hidden, private, and denied, especially to particular groups.

Do the right thing from Little Bad Thing

From the podcast, Little Bad Thing about the things we wish we hadn’t done. This episode features a thoughtful conversation about the aftermath of assault, choices and healing.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Society + Culture

Ethics programs work in practise

Explainer

Society + Culture, Politics + Human Rights

Thought experiment: The original position

Explainer, READ

Society + Culture

Ethics Explainer: Cancel Culture

Opinion + Analysis

Health + Wellbeing, Society + Culture

Should I have children? Here’s what the philosophers say

BY Eleanor Gordon Smith

Eleanor Gordon-Smith is a resident ethicist at The Ethics Centre and radio producer working at the intersection of ethical theory and the chaos of everyday life. Currently at Princeton University, her work has appeared in The Australian, This American Life, and in a weekly advice column for Guardian Australia. Her debut book “Stop Being Reasonable”, a collection of non-fiction stories about the ways we change our minds, was released in 2019.

8 questions with FODI Festival Director, Danielle Harvey

8 questions with FODI Festival Director, Danielle Harvey

Opinion + AnalysisSociety + Culture

BY The Ethics Centre 12 JUL 2022

After a two-year hiatus, the Festival of Dangerous Ideas (FODI), is returning live and unfiltered to Sydney from 17–18 September at Carriageworks.

Ahead of the eleventh festival’s program release, we sat down with Festival Director, Danielle Harvey to get a sneak peek into the 2022 program and what it takes to create Australia’s original disruptive festival.

Our world has so rapidly changed over the past few years. How do you determine what makes an idea truly dangerous in this climate?

The thing with dangerous ideas is that they react and change with what’s going on in the world. When we consider FODI programming we always look to talk about the ideas that perhaps we’re not addressing in the mainstream media enough. The quiet, wicked ideas, that will be snapping at our heels before we know it!

FODI is about creating a space for unconstrained enquiry — for both audiences and speakers, so we aim to find different ways of talking about things; a different perspective or angle — whether that comes from putting people from different backgrounds or disciplines together or encouraging speakers to push their idea as far as it could possibly go.

The 2022 program will explore an ‘All Consuming’ theme. How do you feel this reflects our world at the moment?

This year’s theme responds to and critiques an age consumed by environmental disaster, disease, war, identity, political games and 24/7 digital news cycle.

It considers the constant demands for our attention and our own personal habits formed to deal with an avalanche of information, opportunity, and distraction. In an all-consuming time where there is so much vying for our attention, what exactly should we give it to?

How do you ensure a balance of ideas when putting a program together?

Our team has such a range of diverse roles and practices that provide us access to a very complementary range of experts across the arts, academia, business and politics. Some of us have worked together for over a decade which has meant we’ve developed an enduring dialogue that facilitates building a program in an exciting and agile manner.

After 10 festivals, we also have a fabulous and engaged speaker alumni network who keep us informed of any interesting developments in their respective fields.

FODI has been dubbed as Australia’s original disruptive festival, what is it about disruption that makes it important to base a festival around?

Progress happens when we are bold enough to interrogate ideas — when we’re able to have uncomfortable conversations and be unafraid to question the status quo. Holding the space open for critique without censure is incredibly important. It’s your choice if you come, if you want to sit in the uncomfortable, if you want to be curious about the world around you.

This year the festival will be held at Carriageworks. How does the festival align with this choice of site?

FODI has been privileged to have been housed in so many iconic Sydney venues, from Sydney Opera House to Cockatoo Island and Sydney Town Hall. Carriageworks is now a new home for FODI, that has empowered us to be bolder and provides a fantastic canvass for creating a truly ‘All Consuming’ experience for audiences, speakers and artists.

How would you reflect on the festival’s journey over the past few years to where it is now?

Obviously coming out of COVID in the last couple of years we’ve had some time to reflect, and perhaps the 2020 theme ‘Dangerous Realities’ was a little too prophetic! During that time we were one of the first festivals to turn that program digital, which aired over one weekend. We ended up having 10,000 people tune in live and then another 15,000 and a few days after it. I don’t think I’ve really seen any other festival that moved online and get those sorts of numbers that quickly. It’s a real credit to the FODI team and audiences.

Then in 2021 we embarked on a special audio project, ‘The In-Between’ which saw us pair unlikely people together to have a conversation about what this moment — pandemic, global power shifts, social shifts — might mean. That more open questioning was really enlightening, with some feeling afraid — like it is the end of an era. While others were more hopeful or unconvinced that it is anything new at all.

We’ve also been mining many of our FODI archival talks and released them as podcast episodes, which now have over 165,000 listens globally. It’s been fantastic to be reminded of how eerily relevant so many of these ideas were and how often we should look to the past in order to look forward.

As a result of our great digital programming and on demand content, we’ve now got this huge extended audience now and it’s been a joy to engage with people who weren’t physically able to join us previously.

What’s the most dangerous idea out there for you right now?

For the past two years we’ve been told that being with other humans is one of the most dangerous things you can do! So, I’m excited to see us come together, in person, this year and connect in a festival setting.

And finally, what are you most excited to see at FODI this year — what do you think sets this year’s program apart from others?

2022 heralds a return to public gatherings. We’re thrilled to support the arts and a return to live events and cultural activity after a very challenging time for so many in NSW and nationally.

I’m excited by bringing so many international speakers to Sydney, to hear some deep global analysis and different voices. The experiential elements we are planning will also provide another fabulous reason to get out of the house and back into our unique festival setting.

The Festival of Dangerous Ideas returns 17–18 September 2022. Program announcement and tickets on sale in July. Sign up to festivalofdangerousideas.com for latest updates.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Science + Technology, Society + Culture

That’s not me: How deepfakes threaten our autonomy

LISTEN

Health + Wellbeing, Society + Culture

Life and Debt

Opinion + Analysis

Business + Leadership, Society + Culture

Banking royal commission: The world of loopholes has ended

Opinion + Analysis

Society + Culture

The morals, aesthetics and ethics of art

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Big Thinker: Matthew Liao

Matthew Liao (1972 – present) is a contemporary philosopher and bioethicist. Having published on a wide range of topics, including moral decision making, artificial intelligence, human rights, and personal identity, Liao is best known for his work on the topic of human engineering.

At New York University, Liao is an Affiliate Professor in the Department of Philosophy, Director of the Center for Bioethics, and holds the Arthur Zitrin Chair of Bioethics. He is also the creator of Ethics Etc, a blog dedicated to the discussion of contemporary ethical issues.

A Controversial Solution to Climate Change

As the climate crisis worsens, a growing number of scientists have started considering geo-engineering solutions, which involves large-scale manipulations of the environment to curb the effect of climate change. While many scientists believe that geo-engineering is our best option when it comes to addressing the climate crisis, these solutions do come with significant risks.

Liao, however, believes that there might be a better option: human engineering.

Human engineering involves biomedically modifying or enhancing human beings so they can more effectively mitigate climate change or adapt to it.

For example, reducing the consumption of animal products would have a significant impact on climate change since livestock farming is responsible for approximately 60% of global food production emissions. But many people lack either the motivation or the will power to stop eating meat and dairy products.

According to Liao, human engineering could help. By artificially inducing mild intolerance to animal products, “we could create an aversion to eating eco-unfriendly food.”

This could be achieved through “meat patches” (think nicotine patches but for animal products), worn on the arm whenever a person goes grocery shopping or out to dinner. With these patches, reducing our consumption of meat and dairy products would no longer be a matter of will power, but rather one of science.

Alternatively, Liao believes that human engineering could help us reduce the amount of food and other resources we consume overall. Since larger people typically consume more resources than smaller people, reducing the height and weight of human beings would also reduce their ecological footprint.

“Being small is environmentally friendly.”

According to Liao, this could be achieved several ways for example, using technology typically used to screen embryos for genetic abnormalities to instead screen for height, or using hormone treatment typically used to stunt the growth or excessively tall children to instead stunt the growth of children of average height.

Reception

When Liao presented these ideas at the 2013 Ted Conference in New York, many audience members found the notion of wearing meat patches and making future generations smaller to be amusing. However, not everyone found these ideas humorous.

In response to a journal article Liao co-authored on this topic, philosopher Greg Bognar wrote that the authors were doing themselves and their profession a disservice by not adequately considering the feasibility or real cost of human engineering.

Although making future generations smaller would reduce their ecological footprint, it would take a long time for the benefits of this reduction in average height and weight to accrue. In comparison, the cost of making future generations smaller would be borne now.

As Bognar argues, current generations would need to devote significant resources to this effort. For example, if future generations were going to be 15-20cm shorter than current generations, we would need to begin redesigning infrastructure. Homes, workplaces and vehicles would need to be smaller too.

Liao and his colleagues do, however, recognise that devoting time, money, and brain power to pursuing human engineering means that we will have fewer resources to devote to other solutions.

But they argue that “examining intuitively absurd or apparently drastic ideas can be an important learning experience, and that failing to do so could result in our missing out on opportunities to address important, often urgent issues.”

While current generations may resent having to bear the cost of making future generations more environmentally friendly, perhaps it is a cost that we must bear.

Liao says, “We are the cause of climate change. Perhaps we are also the solution to it.”

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Health + Wellbeing, Relationships, Science + Technology

Hallucinations that help: Psychedelics, psychiatry, and freedom from the self

Opinion + Analysis

Science + Technology, Business + Leadership

Ask an ethicist: Should I use AI for work?

Opinion + Analysis

Science + Technology

A framework for ethical AI

Opinion + Analysis

Science + Technology

Bladerunner, Westworld and sexbot suffering

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Ethics Explainer: Social philosophy

Ethics Explainer: Social philosophy

ExplainerPolitics + Human RightsRelationships

BY The Ethics Centre 7 JUL 2022

Social philosophy is concerned with anything and everything about society and the people who live in it.

What’s the difference between a house and a cave, or a garden and a field of wildflowers? There are some things that are built by people, such as houses and gardens, that wouldn’t exist without human intervention. Similarly, there are some things that are natural, such as caves and fields of wildflowers, that would continue to exist as they were without humans. However, there is a grey area in the middle that social philosophers study, including topics like gender, race, ethics, law, politics, and relationships. Social philosophers spend their time parsing what parts of the world are constructed by humans and what parts are natural.

We can see the beginnings of the philosophical debate of social versus natural through Aristotle’s and Plato’s justifications for slavery. Aristotle believed that some people were incapable of being their own masters, and this was a natural difference between a slave and a free person. Plato, on the other hand, believed that anyone who was inferior to the Greeks could be enslaved, a difference that was made possible by the existence of Greek society.

Through the Middle Ages, attention turned to questioning religion and the divine right of monarchs. During this era, it was believed that monarchs were given their authority by God, which was why they had so much more power than the average person. British philosopher John Locke is well known for arguing that every man was created equally, and that everyone had an equal right to life, liberty, and pursuit of property. His conclusion was that these fundamental rights were natural to everyone, which contradicted the social norms that gave almost unlimited power to monarchs. The idea that a monarch naturally had the same fundamental rights as someone who worked the land would have to fundamentally change the structure of society.

During the 19th century, some philosophers began to question social categories and where they came from. Many people at the time held that social classes, or groups of people of the same socioeconomic status, were a result of biological, or natural, differences between people. Karl Marx, known for his 1848 pamphlet The Communist Manifesto, proposed his own theory about social classes. He argued that these socioeconomic differences that formed social differences were a result of the type of work that someone did and therefore social classes were socially, not biologically, constructed.

Today social philosophers are concerned with a variety of questions, including questions about race, gender, social change, and institutions that contribute to inequality. One example of a social philosopher who studies gender and race is Sally Haslanger. She has spent her time asking what are the defining characteristics of gender and race, and where these characteristics come from. In other cases, social philosophy is blended with cognitive psychology and behavioural studies, asking which of our behaviours are influenced by the society we live in and which behaviours are “natural,” or a product of our biology.

Social philosophy and ethics

Many of the questions social philosophers are concerned with are intertwined with ethics. Part of living in a society requires an (often unwritten) ethical code of conduct that ensures everything functions smoothly.

Thomas Hobbes’ social contract theory spells out the connection between a society and ethics. Hobbes believed that instead of ethics being something that existed naturally, a code of ethics and morality would arise when a group of free, self-interested, and rational people lived together in a society. Ethics would arise because people would find that better things could come from working together and trusting each other than would arise from doing everything on their own.

Today, much of how we act is determined by the societies we live in. The kinds of clothes we wear, the media we interact with, and how we talk to each other change depending on the norms of our society. This can complicate ethics: should we change our ethical code when we move to a different society with different norms? For example, one culture may say that it’s morally acceptable to eat meat, while a different culture may not. Should a person have to change the way they act moving from the meat-eating culture to the non-meat-eating culture? Moral relativists would say it is possible for both cultures to be morally right, and that we should act accordingly depending on which culture we are interacting with.

A significant reason that social philosophy is still such a nebulous field is that everyone has different life experiences and interacts with society differently. Additionally, different people feel like they owe different levels of commitment to the people around them. Ultimately, it’s a serious challenge for philosophers to come up with social theories that resonate with everyone the theory is supposed to include.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Society + Culture, Politics + Human Rights

‘The Zone of Interest’ and the lengths we’ll go to ignore evil

Explainer

Relationships

Ethics Explainer: Plato’s Cave

Opinion + Analysis

Politics + Human Rights

An angry electorate

Opinion + Analysis

Health + Wellbeing, Relationships

How to break up with a friend

BY The Ethics Centre

The Ethics Centre is a not-for-profit organisation developing innovative programs, services and experiences, designed to bring ethics to the centre of professional and personal life.

Enough with the ancients: it's time to listen to young people

Enough with the ancients: it’s time to listen to young people

Opinion + AnalysisRelationships

BY Daniel Finlay Anna Goodman 6 JUL 2022

Nearly 20% of Australia’s population is between the ages of 10 and 24, yet their social and political voices are almost unheard. In our effort to amplify these voices, The Ethics Centre will be hosting a series of workshops where young people can help us better understand the challenges they face and the best ways for us to help. We’re listening.

You’re sitting at the dinner table at a big family gathering. Conversation starts to die down and suddenly your uncle says: “Have you seen that Greta girl on the news? I understand that climate change is a big deal, but the kids these days are so angry and loud. They’d get more done if they showed some respect.”

Many people under 25 have been in this position and had to make a choice about how to respond. This decision is often more difficult than it seems because there doesn’t seem to be a preferable option. Philosopher and feminist theorist Marilyn Frye gave a name to this kind of situation: a double-bind. In her essay Oppression, she defines the double-bind as a “situation in which options are reduced to a very few and all of them expose one to penalty, censure, or deprivation.”

Frye originally used the double-bind to talk about how women often found themselves in situations where they were going to be criticised equally for engaging with or ignoring gender stereotypes. The double-bind can be used to explain the difficult positions that anyone who experiences a negative stereotype finds themselves in and provides insight into why people with important perspectives often feel the need to censor themselves.

Let’s say we do choose to speak up. We can justify our anger. There are so many huge issues that impact the world – climate change, the pandemic, rampant inequality, and so on – and it feels like things are changing far too slowly.

Young people especially should be allowed to be angry, because this is the world we will inherit.

Unfortunately, it’s a common experience for younger generations to feel that their voices aren’t listened to or respected. Even though these reasons should more than justify the anger and frustration of young people, emotion can often (unjustly) obfuscate the reality of what we say.

So, let’s try the other way. We choose to not engage and instead let the comment slide. However, then we’re at risk of being seen as the “apathetic teen,” a narrative that has been perpetuated ad nauseam claiming that young people don’t really care about anything (which we know isn’t true).

Young people care about a lot, and have a lot to care about. Not only do they care, they act. A recent survey of 7,000 young people found that two-thirds of respondents seek out ways to get involved in issues they care about, and 64% believe that it is their personal responsibility to get involved in important issues. So, it’s not always easy to just let your uncle’s tone-policing go when you feel passionate about a topic, especially when staying silent can be as damaging as speaking up.

Here we see the double-bind in action: neither of the most obvious responses to the situation are favourable or even preferable. Because of a build-up of social and cultural assumptions and expectations, we’re often placed in a position where we seem to lose in some way no matter what we decide to do.

The Australian youth experience

Unfortunately, age discrimination towards young people doesn’t end at the dinner table. A 2022 survey conducted by Greens Senator Jordon Steele-John found that “overwhelmingly, young people are feeling ignored and overlooked”. Gen Z (people born between 1995 and 2010) are more likely to be viewed as “entitled, coddled, inexperienced and lazy,” which is having negative effects on young people’s confidence in the workplace. It doesn’t help that young people are hugely underrepresented in the Australian government and positions of power in the private sector.

Young people should not have to convince everyone that their voices are worth listening to. The combination of endless global issues and lack of representation in positions of power, which is compounded by a culture that doesn’t give appropriate weight to their contributions, creates a climate that leaves young people feeling frustrated and disempowered.

So, what can we do? As with most social issues, there isn’t one simple fix to the underrepresentation and misrepresentation of youth because it stems from a few different things that are ingrained in our society and culture. We can question our assumptions and those of others by recognising that “youth” as a social or cultural category isn’t really coherent anymore. There has been an enormous rise in the number of subcultures that are increasingly interconnected thanks to mass media and the internet, meaning that “young people” are more diverse than ever before.

Most importantly, we can bring young people together and into spaces where their voices will be heard by people who are in a position to make change.

As part of our mission to do just that, The Ethics Centre is developing a growing number of youth initiatives, like the Youth Advisory Council and the Young Writer’s Competition.

Through these initiatives, we are starting an ongoing conversation with young people about the areas in their lives and futures that they think ethics is needed the most.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Health + Wellbeing, Relationships

Your child might die: the right to defy doctors orders

Opinion + Analysis

Health + Wellbeing, Relationships, Society + Culture

Look at this: the power of women taking nude selfies

Explainer

Relationships

Ethics Explainer: Begging the question

Opinion + Analysis

Relationships

Ask an ethicist: How do I get through Christmas without arguing with my family about politics?

BY Daniel Finlay

Daniel is a philosopher, writer and editor. He works at The Ethics Centre as Youth Engagement Coordinator, supporting and developing the futures of young Australians through exposure to ethics.

BY Anna Goodman

Anna is a graduate of Princeton University, majoring in philosophy. She currently works in consulting, and continues to enjoy reading and writing about philosophical ideas in her free time.

(Roe)ing backwards: A seismic shift in women's rights

(Roe)ing backwards: A seismic shift in women’s rights

Opinion + AnalysisPolitics + Human Rights

BY Mehhma Malhi 4 JUL 2022

Standing in the middle of Washington Square Park in downtown Manhattan, on the 24th of June, I propelled a sign skyward that read: Abortion Is Healthcare. There were thousands of other slogans, on posters and placards, all being hoisted repeatedly by protesters equally aggrieved by the overturning of Roe v. Wade.

Earlier that same day, at 10 AM, the United States Supreme Court had overturned the ruling of the original monumental case. Since 1973, Roe v. Wade had protected the constitutional right to privacy for nearly half a century – ensuring that every woman in the US could obtain an abortion without fear of criminal penalty. But the repeal of this landmark case has unfortunately handed the regulation of abortion back to each individual state. And now, approximately 20 US states are set to once again criminalise or entirely outlaw access to abortions, despite two-thirds of its citizens being in favour of abortion.

So how will this loss of privacy constrain women’s autonomy?

The right to an abortion protects women from bodily harm, insecure financial circumstances, and emotional grief. The medical procedure allows a woman to maintain bodily autonomy by affording the choice to decide when, or if, she ever wants a child. Abortion acknowledges a woman’s right to live life as she intends. Banning abortion severely compromises that choice. Further, it does not reduce abortion rates but instead forces women to seek abortion elsewhere or by unsafe means. In 2020, over 900 000 legal abortions were conducted in the United States by professionals or by mothers using medication prescribed by physicians.

Banning abortion places a hefty burden on women, suppressing their autonomy.

The Legal Disparity Pre-Roe

Before Roe, women in the United States had minimal access to legal abortions, which were usually only available to high-income families. Illegal abortions were unsafe and in 1930 were the cause of nearly 20% of maternal deaths. This is because many of them relied on self-induced abortions or asked community members for assistance. Given the lack of medical experience, botched procedures and infections were rife.

Pre-Roe abortion bans harmed and further disadvantaged predominantly low-income women and women of colour. In 1970 some states allowed abortion. However, given the lack of national support, women were expected to travel long distances for many hours, which placed their health at significant risk. Once again, this limited access created unequal outcomes by only providing access to select women with means to travel and financial security.

Post-Roe Injustice

Post-Roe, the outcomes appear just as grim. In a digital landscape, technology brings benefits but also comes at a cost, introducing new vulnerabilities and concerns for women. While technology equips women seeking abortion to find clinics, book appointments, and help with travel interstate, it can also amplify the persecution of women when abortion is criminalised. For example, in 2017, Mississippi prosecutors used a woman’s internet search history to prove that she had looked up where to find abortion pills before she lost her foetus. And currently, since the recent ruling, clinics are scrambling to encrypt their data, while others are resorting to using paper to protect their patients’ sensitive information from being tracked or leaked

Unfortunately, there are also concerns that data could be used from period tracking apps and location services to further restrict women from accessing abortions. In previous years, prosecutors and law enforcement have wrongfully convicted women for illegal abortions by searching their online history and text messages with friends. Now that abortion is criminalised in some states, there are worries of increased access to private information that could be used against women in court: specifically the use of third-party apps that sell information which would further isolate women and reduce their ability to receive competent care, which in some states could be accessed without their consent.

The United States Department of Health and Human Services, known as HHS, released a statement on June 29th about protecting patient privacy for reproductive health. It states that “disclosures to law enforcement officials, are permitted only in narrow circumstances” and that in most cases, the Health Insurance and Portability and Accountability Act (HIPAA), commonly known as a privacy Act, “does not protect the privacy or security of individuals’ health information” when stored on phones. The guidance continues by suggesting how women can best protect their online information. For women to defend themselves, they must take extra precautions such as using privacy browsers, turning off locations, and using different emails.

These additional measures are troubling as they stipulate how women receive care. Placing the onus on women, the risk of information leaking limits access to resources and further restricts the privacy and autonomy of pregnant women as it creates fear of constant surveillance.

Further, it places people seeking care at a significant disadvantage if they do not know what information is protected and what is not.

Post-Roe, the medical landscape will also begin to shift. Abortion care is not uncommon in other procedures conducted by obstetricians and gynaecologists (OB-GYNs). Abortion care can overlap with miscarriage aftercare and ectopic pregnancies, creating murky circumstances for physicians and delaying care for patients as they wait for legal advice and opinion. In other cases, abortion care is necessary when pregnant women have cancer and need to terminate the pregnancy to continue with chemotherapy.

By restricting abortions, many physicians will be unable to provide adequate care and fulfil their duty to patients as they will be restricted by governing laws which will hinder further practice if persecuted. Any delay in receiving an abortion is an act of maleficence, as the windows to receive abortions grow increasingly slim and the restrictions grow tighter, the process inhibits providers from treating women seeking an abortion which obstructs beneficent care. As a result, many women will lose their lives from preventable and treatable causes.

Criminalising abortion will warp access and create unnavigable procedural labyrinths that will change the digital and medical landscape. Post-Roe United States will continue to breed fear and control over women’s lives. Criminalising abortion will isolate women from their communities and obstruct them from receiving competent medical care and treatment. Banning abortions will place an undue burden on women and will unfairly jeopardise their health and right to access care.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Politics + Human Rights, Relationships

How to have a conversation about politics without losing friends

Big thinker

Politics + Human Rights, Relationships

Big Thinker: Dennis Altman

Opinion + Analysis

Politics + Human Rights, Relationships

Intimate relationships matter: The need for a fairer family migration system in Australia

Opinion + Analysis

Politics + Human Rights, Relationships

Ask an ethicist: do teachers have the right to object to returning to school?

BY Mehhma Malhi

Mehhma recently graduated from NYU having majored in Philosophy and minoring in Politics, Bioethics, and Art. She is now continuing her study at Columbia University and pursuing a Masters of Science in Bioethics. She is interested in refocusing the news to discuss why and how people form their personal opinions.

Money talks: The case for wage transparency

Money talks: The case for wage transparency

Opinion + AnalysisBusiness + Leadership

BY Jack Derwin 30 JUN 2022

Sex, death, politics, money. No matter how much some things change, some taboos stubbornly live on. But when it comes to the matter of wages, our silence on the subject is only hurting ourselves.

As we’ve discussed, radical transparency – when implemented with care – can help build trust and accountability. This openness not only assists in identifying where we stand but also in charting the necessary path forward.

Yet while the public conversation around wage inequality has never been louder, we remain remarkably tight-lipped on the topic of pay. Opening up a dialogue about our salaries may just be the first step to putting us all on equal footing.

A raw deal

While workplace discrimination exists in many forms, the gender pay gap has become the most identifiable indicator of its prevalence in the workplace.

Right now in Australia women are paid nearly 14% less than men, according to government data – slightly above the average recorded across other OECD nations. While over time the difference is narrowing, progress is predictably slow across the developed world.

This may partly be attributed to a lack of accountability among some businesses. During this year’s International Women’s Day (IWD) for example, the rhetoric of British businesses was challenged by a Twitter bot programmed specifically for the occasion.

Any tweet celebrating IWD from an official corporate account was met with an automated response, publishing the official pay gap at that specific company. The difference – often a percentage in the double digits– painted a bleak view of the current state of affairs.

But more importantly, the stunt quantified the issue at an organisational level and provided a useful reminder: by measuring the problem, we can manage it. By bringing public attention to specific cases, the bot held workplaces accountable on a case by case basis and drew a line in the sand.

Indeed, employment experts suggest this kind of open wage dialogue could be an important weapon in fighting wage inequality. Government research highlights that within Australian organisations where there is wage transparency, the gender gap is narrowing by 3.3% per year. While this may be due to many factors, transparency is at least helpful in tracking improvement over time.

Hush money

The argument for greater openness is increasingly being recognised. Last year in Australia, the then Federal Opposition proposed outlawing pay secrecy clauses which explicitly prevent colleagues from discussing their pay packets.

In the financial services sector, clauses have historically been commonplace with one study estimating women at Australia’s largest bank are collectively being paid $500 million less than their male peers. The industry union has used such figures to rally for greater transparency and amid several industrial cases in which employees were actually dismissed for disclosing their pay.

The campaign has worked. Australia’s big four banks – ANZ, NAB, Westpac and the Commonwealth Bank – all recently scrapped their privacy clauses. Staff can now choose to discuss their pay packets should they wish without fear of facing retribution from their employer. Given the four organisations employ more than 160,000 Australians between them, it’s no small achievement.

Global view

Many countries around the world, including the United States and United Kingdom, have already nullified these provisions and have been clear in justifying why. The executive order from the Obama administration doing so in 2014 for example linked them to employer discrimination and market inefficiency.

But governments are also taking additional steps to proactively open up the conversation around remuneration. Many, including the UK, now require publicly-listed companies and other employers to publish the average pay ratio between CEO and worker.

Similar laws in Australia, in operation since 2012, explicitly do so on the basis of closing the gender wage gap. In fact, around half of all OECD nations have comparable mandates.

Germany has gone one step further. Female workers can not only find out how much their male colleagues are making but are now also permitted to demand the median wage of a group doing the same job.

Notably, some corporations are even using transparency to attract talent in an extremely tight labour market. PWC became the first big consultancy firm in Australia to publish its own pay bands in a bid to find the best people, although It’s worth pointing out the breadth of each band does little to specific pay per job.

More to be done

While greater transparency is helping to hold feet to the fire, it is clear that the initiatives described above are just a start.

A recent OECD report for example points out that we’re far from anything resembling ‘radical transparency’. While around half of the 38 member nations publish company-wide figures, more can be done to turn information into meaningful action.

For example, at the moment only a limited number of companies are required to report any pay data at all, with most countries drawing the line at large publicly-listed entities. So too is the pay data these organisations provide often limited in nature.

Annual auditing of the information published and a strong independent regulator to oversee it are just two important future steps prescribed by the OECD. Any requirements need to be legally enforceable, it argues, and there needs to be penalties for those found flaunting the rules – as is already the case in Iceland.

Without these additional changes, workers aren’t actually in a better position to negotiate. Particularly when they come from groups that have historically been marginalised in the workplace. Instead it can mean they’re more acutely aware of their disadvantage with little practical means to address it.

The Norway Experiment

This conclusion is backed up by the experience of Norway, which has been trialling a form of radical transparency for years.

Norway’s tax office annually publishes every individual’s income on the public record. It also reveals the value of their assets and how much tax they paid. The idea is that in a country that leans socialist, trust must be maintained in the taxation system that supports it.

The experiment has largely been fruitful. Norway has a strong tradition of collective bargaining and a gender gap that is ranked third smallest in the world.

Naturally it’s difficult to conclude what came first: Norway’s relatively equal pay or the country’s unusual wage transparency. In all likelihood, these factors are mutually dependent.

However the Norwegian experiment also reveals the pitfalls of radical transparency and the natural threat it poses to personal privacy.

In 2001, the country digitised its records, making them instantly searchable from any personal computer. While records had been available for decades, this move eliminated the need to line up and leaf through the single book available in every municipality.

This digitalisation may have been a step too far. A study by the American Economic Association (AEC) found that the happiness of Norwegians actually became more correlated to their income level after 2001 by a factor of almost 30% – but only if that citizen had good internet access.

The hypothesis shared by the AEC is that those who could easily look up the incomes of their colleagues, friends and families, did so. Those who discovered their own incomes paled in comparison seem to have suffered emotionally because of it, even in the relatively equal nation of Norway.

In other words, the old axiom that ‘comparison is the thief of joy’ rings true. Significantly, in 2014, Norway made searches a matter of public record as well, making it known who had searched for your income. The volume of queries residents made on their neighbours fell immediately by 90% – making for presumably a far happier nation.

Lesson learned

The Norwegian experience paints a cautionary tale around the excesses of radical transparency. Specifically, it shows that wage data that is instantly available and that personally identifies individuals without their consent can do more harm than good. Careful protections will be required to ensure that workers are able to protect their own privacy.

More broadly, the examples suggest that information alone is not sufficient to prevent discrimination in the workplace. While it can serve as an important tool in bridging the gender wage gap for example, it needs to be carefully deployed along with other policies to measure progress, empower staff, and punish employers that deliberately mislead or discriminate.

Yet greater transparency clearly does have an important role to play. It helps keep workers informed of where they stand in relation to their colleagues. Making this kind of data public also makes sense that differences in pay need to be quantified before they can be rectified. Certainly, it helps enable countries to measure their progress to date and the effectiveness of their actions going forward.

Ultimately transparency is not a silver bullet, rather it is a means to an end. Properly informed and equally empowered, workers can finally begin to level the playing field.

Ethics in your inbox.

Get the latest inspiration, intelligence, events & more.

By signing up you agree to our privacy policy

You might be interested in…

Opinion + Analysis

Business + Leadership, Relationships, Science + Technology

Are we ready for the world to come?

Opinion + Analysis

Business + Leadership

Feel the burn: AustralianSuper CEO applies a blowtorch to encourage progress

Opinion + Analysis

Business + Leadership

Banks now have an incentive to change

Opinion + Analysis

Business + Leadership